"There are lots of discussions about using database[s] in Windows Store apps in MSDN forum[s]," reads a brand-new blog post by Microsoft's Robin Yang on MSDN.

Yes, developers are apparently still struggling with data access in the new Windows 8 ecosystem.

A quick check bears this out. In fact, just a week ago, a developer asked, "Is [it] possible to use 'LINQ to SQL' database in Windows 8 metro apps--or any other easy option is there to use local database?"

The answer was predictable: "It seems there is no official announcement of support for Linq to Sql or EF for database access in Windows 8 Metro Apps. You can try to use Web services to access the data."

Such questions have appeared on StackOverflow.com for well more than a year. A few examples:

Many reader answers point to using SQLite, which is exactly what Yang's post did (the post indicates the author of the post's content is Aaron Xue, though it was posted by Robin Yang).

I earlier touched on and provided links for a few other options such as IndexedDB and Web services/the cloud.

But HTML5/JavaScript seems to be the popular programming model of choice for Windows Store apps, and Yang has also conveniently provided a three-part series on this (authored by Roy Tian), titled "Using HTML5/JavaScript in Windows Store apps: Data access and storage mechanism." You can find this series (along with other posts) on the Windows Store apps development suppport blog page.

So check out these latest posts to bone up on Windows Store app data access--and perhaps keep waiting for SQL Server CE support.

What do you think about data access in Windows Store apps? Please share your thoughts here or drop me a line.

Posted by David Ramel on 01/11/20130 comments

A recent report from research firm International Data Corp. (IDC) provides further proof that data is king when it comes to software development. The Application Development & Deployment (AD&D) market is expected to grow at a higher rate in 2013 after slow sales in late 2012, and some of the hottest segments of that market revolve around data-related development, IDC reported.

"Within the AD&D markets, the Relational Database Management Systems (RDBMS) market stands out with a 34% market share. It is by far the biggest individual market," IDC said. "Unlike other mature markets, RDMBS is forecast to outperform most AD&D markets with high single-digit growth in 2013 and beyond." Oracle dominates that market, IDC said, with nearly a 50 percent market share.

Also poised for revenue growth is Data Integration and Access Software, described by IDC as "a structured data management market with revenues of more than $4 billion . . . experiencing growth on par with the RDBMS market with which it has a close relationship." IBM dominates that market, the research firm said, and rules the overall AD&D market with Oracle and Microsoft.

No surprise, IDC said the highest market growth is expected in the predictable areas, "where markets are aligning with or supporting mobile, cloud, social and big data areas."

The information was released by IDC in conjunction with its Worldwide Semiannual Software Trackers project, a paid service.

What do you think about the growth prospects for data developers in the coming years compared to other app development? Please comment here or drop me a line.

Posted by David Ramel on 01/02/20130 comments

Dino Esposito isn't asking for much from Santa this year. Nothing new or bleeding-edge. In fact, he kind of wants to step back in time, in search of simplified SQL querying:

I'd love to have back a framework that was in beta testing and probably even in production around SQL Server a decade ago: making queries in plain English, like "give me all customers based in WA." The code was amazingly able to make most of them--or at least get close, anyway. I'm working on a simplified version of it--so it would really great to have it from Santa!"

Esposito is talking about English Query, a project for SQL Server 2000 that he was involved in some 13 years ago. Esposito, a well-known developer, book and article author, presenter, trainer and all-around technical expert based in Italy, shared his thoughts with me in an informal survey I took of data developers with equally sparkling credentials, asking what their data development holiday wishes were. Following are some of their thoughts.

Dr. James McCaffrey, who manages training for Microsoft software engineers in Redmond, among many other projects, had this to say:

I get the feeling that there's a lot of flux with MVVM, MVC, and MVP and so my wish (assuming that I'm right and that there is flux) is to see some stability emerge here.

McCaffrey is right about most things, to understate it, and a lot of Microsoft products do seem to be in transition, so some stability in 2014 would be nice.

Brandon Satrom is an HTML5 expert, among a lot of other things, at Telerik.

For us, the biggest wish on the list is for a FULL OData implementation for both MVC and WebAPI. The end result we're looking for is the ability to fully and dynamically query a dataset based on URL parameters. Full OData support would be an awesome start, and if we're extra lucky this year, perhaps Dynamic LINQ integration for Entity Framework as well.

Fellow Teleriker Chris Sells is vice president of the Developer Tools Division at the company.

I think what most data developers want for Xmas is an end-to-end, offline-enabled, client-side and mobile-focused data source stack. The occasionally connected story is a hard one for any of the mobile OSes (and it's no picnic for desktop OSes, either), so something simple, capable, robust and cross-platform for client-side data story is what I'm looking for in my Xmas stocking from Santa!

Noted author Peter Vogel is a principal at PH&V Information Services.

What I'd like is some reliable way to move changes from development to production that won't drive my DBA crazy. Microsoft's new SQL deployment package is great--but if deploying a package on my Web server causes changes in my database, my DBA is going to [Editor's note: just substitute "do painful things to me" here; suffice it to say that Vogel's DBA has some anger management issues], (and I'm opposed to that).

Some reliable tool to estimate "response time under load" would be great. It would (a) take a picture of how busy my database server is over the course of a day and, (b) estimate the response time for all the data access operations in my application (and tie those operations to my UI and services). I'd then specify how much each part of my UI and my SOA will be used in production, and the tool would estimate my response time for each UI component or service operation throughout the day, highlighting those that exceed some allowable limit.

Jeremy Likness, multiple book author and principal consultant for Wintellect LLC in Atlanta, thinks some of his wishes might be coming.

True asynchronous support in Entity Framework and other data providers. Not just wrapping requests in a task, but the actual asynchronous implementation that will scale correctly in highly concurrent environments.

Better/easier extensibility of OData across various producers (that is, WCF 4) and consumers.

Consistent APIs across platforms--that is, a standard data solution for Windows 8, Windows Phone 8, the server, and so on that can be access through a common API so it's not a completely different repository and data access layer for each implementation

Stronger support in database projects for schema changes--that is, I know there is the compare/publish, but an explicit way to write in migrations so you can have push-button updates out of the box, for example, I iterate within a sprint and change a few items, I'm readily prompted to fill in any issues with the schema (default, move data and seed data) and I get two specific outputs: a creation script (start from scratch) and a migration script (upgrade from previous iteration)--again, as part of a build and not an interactive schema compare.

Sean Iannuzzi is a solutions architect for The Agency Inside Harte-Hanks. He took a lot of his precious time to give me an extremely detailed reply. It's great stuff, so I'm sharing it all with you.

What developers want as the perfect data improvement gift would be providing easier data integration from Entity Objects to Data Contracts, Model objects that are exportable as fixed data and including complete model data lists when working with Views in Razor (Data Model Extension).

Entity Objects to Data Contracts or Model Objects

Most of the time, when building Web sites or applications, either data contracts or models are needed to support various differences in the UI versus the data layer. As a result, a mapping exercise is needed to link the two together. I usually use AutoMapper, as it handles this mapping very well, but it would be awesome if this was included as part of the [.NET] framework.

Export Compact Data Elements

Another item related to data development that would be a great feature would be if certain fields in data contracts could be marked for different levels of return options. For example, at times, I may want to lazy load all of my data and only need the IDs and not all of the data associated to the data contacts. What would be awesome would be a way to annotate the data fields with levels that would control when it would be included with the return set. Something such as, deep contract member, medium contract member and light contract member, which could be added at the field level. Light contracts could just include the ID fields, medium would include ID fields and the parent records, and the deep contracts could return all data in the hierarchy structure. What would be really awesome is if this was figured out for you automatically, but that's just a wish and very unlikely.

Fixed Format Export Options

At times, exports are needed for data that's in a fixed field format that's used in a Web application or service. A great feature would be to allow annotations to support how the data could be exported and then, through reflection, pull in the attributes based on the model.

Something such as:

[AttributeUsage(AttributeTargets.All)]

public class FlatFileAttribute : System.Attribute

{

public int fieldLength { get; private set; }

public int startPosition { get; private set; }

///

/// File Attribute constructor to set

/// the start position and field length

///

///

///

public FlatFileAttribute(

int startPosition, int fieldLength)

{

this.startPosition = startPosition;

this.fieldLength = fieldLength;

}

}

Razor Data Model Extension

The last feature that I would like automatically included is the ability to map data elements from a hierarchal model to a view without the need of an extension and for the data fields to be included as part of the model. For example, if you have a model with a list of subelements and you are creating them on the view, they will be null be default. To remedy this, I usually create an extension method so that the data is included with the model--so that all model fields are included for an object such as Parent.Children, where children is a collection beneath the Parent object. This would be a nice feature as well.

public static IDisposable BeginCollectionItem(

this HtmlHelper html, string collectionName)

{

return BeginCollectionItem(html, collectionName, "", "");

}

public static IDisposable BeginCollectionItem(

this HtmlHelper html, string collectionName,

string prefix, string suffix)

{

var idsToReuse =

GetIdsToReuse(html.ViewContext.HttpContext, collectionName);

string itemIndex = idsToReuse.Count > 0 ? idsToReuse.Dequeue() :

Guid.NewGuid().ToString();

html.ViewContext.Writer.WriteLine(

prefix + string.Format("

<input id="\" name="\" value="\" type="\" {1}\??="" off\??=""

autocomplete="\" {0}.index\??="" hidden\??="" />",

collectionName, html.Encode(itemIndex)) + suffix);

return BeginHtmlFieldPrefixScope(

html, string.Format("{0}[{1}]", collectionName, itemIndex));

}

I'd like to thank all of these guys for taking the time to share their thoughts with you. And I'd like to continue the conversation. What would you like to see in the coming year in terms of data development technologies? Please comment here or drop me a line.

Posted by David Ramel on 12/20/20121 comments

I've been fooling around with REST services, getting JSON data back from free online sources and displaying it in Web or Windows Store apps via a ListView or FlipView, and so on.

After experimenting with the Windows Azure Mobile Services, which simplifies the back-end data-access process and lets you easily set up your own services, I was trying out other APIs and just had to pass on my latest discovery: beer.

Yup, there's an Open Beer Database, described as "a free, public database and API for beer information." Now, that's my kind of information. Not that I'm a lush or anything, but a beer API seems appropriate for these stressful times, what with the end of the world coming—and, worse yet, a holiday stay with the in-laws and family circus if civilization survives the predicted apocalypse. Then there's climate change, earth-destroying asteroids, sovereign insolvency, the fiscal cliff and Gangnam Style (hey, if you're talking Korean madmen druthers, give me that whacky Kim Jong Un and his ballistic missile toys in the North over Psy and his garbage music in the South any day).

Anyway, note that the Open Beer API "is currently a work-in-progress and is subject to change without notice." It returns data in JSON or JSONP (to work around cross-domain calls). It provides the usual CRUD operations via HTTP verbs GET, POST, PUT and DELETE and lets you retrieve breweries or beers, both as aggregates or singly by ID number.

For example, the beer with an ID of 2 is named the Bruin. Its description includes: "At once cuddly and ferocious, it combines a smooth, rich maltiness and mahogany color with a solid hop backbone and stealthy 7.6% alcohol." Hmm, cuddly and ferocious, much like that cute little Kim Jong Un himself (Psy is neither; I can't say here what Psy is).

The Open Beer Database includes an example for retrieving brewery information in JSONP format via JavaScript. It also points to client libraries for Ruby, PHP and Python.

So check it out the next time you're looking for an example REST service to toy around with, maybe over the holiday break. Me? I'll be up in Massachusetts, probably pounding Bruins (and I don't mean the local hockey team up there.)

Do you know of any wild and crazy REST APIs we can play with in our coding adventures? Please share your suggestions here or drop me a line.

Posted by David Ramel on 12/13/20120 comments

Amazon Web Services Inc. yesterday announced AWS Marketplace support for Windows apps and big data solutions. AWS, of course, is the equivalent of Microsoft's Windows Azure cloud service, and the AWS Marketplace is akin to the Windows Store.

The latter last month got some buzz because a company called Distimo reported it offered more apps than the Mac store (of course, the Mac store is quite different from the iPad and iPhone stores). Only a couple months old, the Windows Store includes more than 20,000 apps. In case Windows 8 really take off, it appears Amazon is positioning itself for a piece of the pie.

"Windows users will now be able to find, deploy, and start using popular application infrastructure, development tools and business software in minutes," Amazon said in a news release.

Among the Windows Server offerings is the popular Toad data development tool from Quest Software. "Offering customers choice for their cloud infrastructure and enabling open development is a priority for us, which is why we’re excited to provide our Toad solution for on-demand use across managed databases," said Quest executive Michael Sotnick in the news release. "We deliver value to the AWS ecosystem today through our freeware edition that enables more than 2 million developers to easily leverage Toad for Oracle, Toad for SQL Server and Toad for MySQL within the AWS cloud."

Big data is the hot topic among data devs these days, obviously, and the new Big Data software category includes offerings for popular technologies such as Hadoop, MongoDB and Couchbase.

This isn't the first time Amazon has courted the Windows camp, as evidenced by earlier announcements such as Amazon RDS for Microsoft SQL Server. And it probably won't be the last.

Are you a Windows developer with an opinion on Amazon's overtures? Please share your thoughts by commenting here or dropping me a line.

Posted by David Ramel on 12/05/20120 comments

A recent study of hacker forums shows SQL injection is gaining favor as an attack vector. The company Imperva conducted a study of hacker forum discussions and concluded "SQL injection is now tied with DDoS as the most discussed topic."

Last year, the company said, DDoS was the most discussed attack vector, at 22 percent of discussion volume, while SQL injection followed at 19 percent. This year, both came in at 19 percent, indicating a relative rise in the popularity of SQL injection.

You have to take your studies and statistics with a grain of salt, though, as cloud hosting company Firehost reported at about the same time that SQL injection attacks accounted for only 12 percent of Web attacks blocked by its servers in the third quarter of 2012, with cross-site scripting attacks coming in first at 35 percent.

Regardless, SQL injection continues to be a serious problem that should get more attention from security teams and developers. For the latter, remember that Microsoft has some good resources to help you minimize security weaknesses, including:

There's lots more information out there. Most of the SQL injection attacks result from weaknesses in user input validation, which shouldn't be that hard to do properly. Hopefully these studies will continue to raise awareness among the coders writing these validations.

Share your thoughts on how to protect against SQL injection attacks by commenting here or dropping me a line.

Posted by David Ramel on 11/16/20120 comments

It's no accident that staid, proprietary software giant Microsoft has opened itself up and embraced open source (and even competing) technologies, a trend perfectly exemplified by the adoption of "big data" and its flagship Apache Hadoop platform.

It comes down to people like Dave Campbell, with the interesting title of "technical fellow" at Microsoft. It results in products like HDInsight, described last week by Campbell as Hadoop on the cloud (Windows Azure), laptop and server.

Campbell was speaking at the Build 2012 developer conference in a presentation titled "Data Options in Windows Azure, What's a Developer to Do?" Attendees of his presentation received some insight into the process of how a huge, monolithic, bureaucracy-laden organization transforms itself with a view toward long-range competitiveness--or even survival--in a changing landscape.

"Part of my job is to figure out what the heck's happening and then what should we be doing about it," Campbell said. "The last year for me was, OK, we've got this story down. We understand what's going on in sort of the big data space. I wanted to schedule a couple of big talks ... and then I said I want to be able to talk to the techies, the CTOs, the guys who were doing the projects .... Then I wanted to get the story through all the way through [so] I can talk to the CIOs, who aren't going to fully appreciate the technology. The folks who were the hardest nut to crack, were the enterprise data warehouse guys, who had the feeling that 'none of this stuff is worth crap unless it's in my data warehouse. I got the one version of the truth.'"

Six or eight months ago, Campbell said, he stumbled on to a way he can quickly get through to all of the skeptics. "I said, 'That's a very, very fine version of the truth, and it's still super valuable.' I said, 'But in this new world, there's a version of the truth about what people are saying about your products and what they think about your brand and how that's playing out in the online world. And that's a different version of the truth.' And I said, 'Another version of the truth is available from all your operational systems, which are just spewing out data. We've just got data coming out of everything. And there's yet a third version of the truth or perspective that you can get out of those systems.' And I look at them and say, 'And if you're looking at one version of the truth, and even if you're doing better than the other guys, but you're not looking at those other two versions of the truth, and your competitors are, how are you keeping up?'"

The response, he said, is like "'oh, yeah,' and so even those guys ... and you actually see it. There's a lot of recognition of this now. And so, it is real. There is, of course, hype, but again, I've tried to arm you here. The trick in this, there's nothing magic. The magic is about deferring the modeling and to be able to do the information production in a very quick, efficient way, and having things sort of pop out that you can subsequently refine."

And so Campbell continues on his untiring mission to convince others of the correct way to a successful future, traveling the country and even the globe, as he pointed out in a demonstration showing how easily he "mapped" and "reduced" GPS tracking data of where he'd been in the past few months.

And judging from his presentation, he keeps long hours. One piece of his presentation, he pointed out, was done at 3:38 am. that day.

So if you want to get clues about where Microsoft may be heading in the future, you might want to keep track of Campbell and what he's saying. Strangely, though, I couldn't find him on Twitter, but he is on LinkedIn and blogs occasionally on TechNet.

It's also worth checking out the Build 2012 video. It goes into detail about how big data is easy for developers, who only have to worry about two functions: map and reduce. And a lot more.

Maybe too much. At one point, when he's summarizing how developers can choose which Windows Azure data storage option to use, he said that if attendees register what he had just said in the previous couple of minutes, "you're going to walk out of here smarter than most bloggers on this topic. Seriously."

Ouch!

What do you think of Microsoft's embrace of big data in general or HDInsight in particular? Please share your thoughts by commenting here or mailto:[email protected] dropping me a line.

Posted by David Ramel on 11/07/20121 comments

Less than three months after Entity Framework 5 was released, Microsoft this week announced the availability of EF6 Alpha 1, targeting a release to manufacturing date around mid-2013 for the database object relational mapping tool.

New features in the upcoming update include task-based asynchronous programming patterns, custom conventions for Code First development, multi-tenant migrations and many more.

The EF code base is now open source, hosted on CodePlex, program manager Rowan Miller reminded attendees at Microsoft's Build 2012 conference at company headquarters in Redmond, Wash., on Tuesday.

"We're accepting contributions to the code base as well," Miller said in a presentation, which is available on video. "If you want to work out some of how EF works, go grab the code. If you want to help us fix some bugs, we'd love you to."

However, Miller noted, when it comes time for release, the Microsoft licensing, branding and support will remain the same--along with code quality, he emphasized. "If you do want to submit bug fixes for us, you're going to have to write unit tests in the same quality code that people on our team write today."

And it might not be that easy to get contributions accepted, Miller suggested. "So far we've been open source for a few months now. We've taken four contributions, most of them still quite small at this stage, but we've got a few bigger ones brewing in the community, too."

At the EF CodePlex site, you can explore in detail the planned improvements for EF6, such as "Task-based Asynchronous Pattern support in EF."

Other improvements for EF6 listed on the CodePlex site include:

- Tooling Consolidation

- Multi-tenant Migrations

- EF Dependency Resolution

- Code-based Configuration

- Migrations History Table Customization

- Custom Code First Conventions

Rowan noted in his demonstration that developers had vociferously requested enum support, which was added in EF5, but only as integer types. He said Microsoft was working to add support for more types. He also noted that the DbGeography class, which he used in his demonstration, was targeted for improvement. Right now, he said, "it isn't such a great type," requiring some "strange" mapping to class structures.

Yet another improvement might well be "Stored Procedures & Functions in Code First," which was listed in the product roadmap for possible inclusion in EF 6, as noted by a reader in the comments section of the blog post announcing EF6. Microsoft's Arthur Vickers replied: "It's still planned to be done in EF6. Some of the metadata prerequisites are already being worked on and when they are done we should have someone start on it."

What do you think of the planned improvements to EF6? Please share your thoughts by commenting here or dropping me a line.

Posted by David Ramel on 11/01/20123 comments

Microsoft recently updated its All-In-One Script Framework, which features SQL Server (and other) scripts designed to address common problems reported by users in forums, support incidents and online communities.

Though primarily targeted at IT pros, the scripts are helpful for developers, too, as pointed out recently by Jialiang Ge, who works at a sister project called the All-In-One Code Framework. "Considering that many developers are writing T-SQL scripts too, we hope that the scripts could be useful to you," he wrote in a MSDN blog post.

He wrote about seven new scripts added to the All-In-One-Script Framework, including:

- Enroll SQL Server instances on multi server into an existing SQL Server Utility

- Check SQL Server missing KB2277078 to prevent leak of security audit entries

- How to retrieve the top N rows for each group

- Publish report to Reporting Services in SharePoint Integrated Mode

- Bulk set the Timeout property of the reports that in one specified folder

- Add one user/group to a specified Reporting Services item

- Get properties of the objects that in multiple SQL Server instances

Meanwhile, back at the All-In-One Code Framework, targeted specifically at developers, code samples are available via a standalone sample installer or as a Visual Studio extension.

Some sample scripts of interest to data developers include:

- Import Data from Excel to SQL Server

- Entity Framework Sample Provider

- Upload Files Asynchronously Using AJAX into SQL Server Database

- Bind Image in Gridview Using C# with ASP.NET

- ASP.NET Dynamic Data Unleashed

- LinqToSqlExample

- Stored Procedures

The project also has a "request code sample"

service described as "a proactive way for our developer community to obtain code samples for certain programming tasks directly from Microsoft." Project

documentation said developers can vote on requests and Microsoft engineers will choose the requests with the most votes and provide appropriate code samples. The two most popular requests at the time of this writing were "Code samples for Orchard Project" and "N-tier Entity Framework 4 end to end sample app."

So take a look at the one-stop shops for code samples and scripts and share your thoughts by commenting here or dropping me a line.

Posted by David Ramel on 10/24/20120 comments

Microsoft yesterday announced enhancements to its cloud-based backend for mobile apps, including new data storage options.

Windows Azure Mobile Services (WAMS), a preview announced in late August, provides data storage and other services to developers without the time, talent or inclination to wire up the server-side code themselves. Previously, data access was available through simple management-portal-created SQL Databases, or, as I detailed earlier, an existing database.

Now, WAMS also provides support for Windows Azure Blob and Table storage, Microsoft's Scott Guthrie announced yesterday. "This is supported using the existing 'azure' module within the Windows Azure SDK for Node.js," Guthrie said. He provided an example to show how easy it is to use the new support for Tables:

The below code could be used in a Mobile Service script to obtain a reference to a Windows Azure Table (after which you could query it or insert data into it):

var azure = require('azure'); var tableService =

azure.createTableService("< account="" name="">>", "< access="" key="">>");

Guthrie pointed to the Windows Azure Node.js dev center for tutorials on using the new data storage options via the "azure" module.

Other services were also announced as Microsoft continues to flesh out its preview offering, including support for iOS apps through the use of native iOS libraries. Previously, only Windows 8 apps were officially supported, though Microsoft partner Xamarin developed an open source SDK for MonoTouch for iOS and Mono for Android, available on GitHub, which also hosts the open source WAMS SDKs and samples.)

One reader expressed surprise that iOS support was added before Windows Phone, the most obvious choice of client to use WAMS. "Holy crap," said the reader. "iOS before Windows Phone? Come on guys. The WP7/8 OS is legit. Give it first rate support with your own products."

As the iOS support reflects a nod toward the ubiquity of Apple mobile devices, other enhancements reflect the pervading need for the requisite "social" services in modern apps. These include support for Facebook, Google and Twitter authentication and the ability to send e-mails and SMS messages from WAMs apps. Also, server deployment to the West region was added (previously only the East region was available).

As WAMS continues to evolve, with Windows Phone support probably coming soon, so does the entire Windows Azure ecosystem. "We'll have even more new features and enhancements coming later this week--including .NET 4.5 support for Windows Azure Web Sites," Guthrie said. "Keep an eye out on my blog for details as new features become available."

What do you think of the new data storage options available in the do-it-yourself cloud backend for mobile apps? Comment here or drop me a line.

Posted by David Ramel on 10/17/20121 comments

Further evidence that NoSQL database technology has triumphed over its relational counterpart in Web development was illustrated earlier this week in the unveiling of a new site aiming to provide a one-stop resource for Web developers using open technologies.

The World Wide Web Consortium's (W3C) Web Platform Docs features IndexedDB as the database technology of choice for client-side storage of substantial amounts of structured data.

Web Platform Docs was announced Monday by Microsoft and other major Web development players who partnered with the W3C to develop the wiki-style site, part of WebPlatform.org. The "alpha" release of Web Platform Docs includes "hot topics" such as HTML5, CSS, Video, File API, Media Queries and more in addition to IndexedDB.

IndexedDB, or the Indexed Database API, is a NoSQL database technology that uses key/value pairs as records with an index over the records for high-performance queries. It was initially proposed as a standard by Oracle in 2009, according to an entry on Wikipedia.

IndexedDB competed for a while with the Web SQL API, similar to SQLite, but the W3C gave up on that in November 2010, stating, "The specification reached an impasse: all interested implementors have used the same SQL backend (Sqlite), but we need multiple independent implementations to proceed along a standardisation path."

Some two years later, browser makers still need to catch up with the winner, IndexedDB. The lack of consensus and agreement on a formal standard has led to uneven support among browsers for different competing technologies. The site caniuse.com, which reports on technology compatibilities, lists IndexedDB as having full support among only about 18 percent of browsers, according to "global usage share statistics," while Web SQL is listed as having about 45 percent. However, IndexedDB was reported to be partially supported by about 31 percent of browsers, for a total of about 48 percent. Firefox is reportedly the only browser with full support in its "current" version.

Microsoft came on board with the standard in March 2010 and supports it with the new Internet Explorer 10 browser coming with Windows 8. According to Firefox browser maker Mozilla, which backed IndexedDB early on and which provides extensive documentation on the technology, the asynchronous IndexedDB API is also supported by the latest (or upcoming) versions of Chrome and Firefox browsers, but not Opera or Safari. (IndexedDB also has a synchronous API, but it's hardly used, which probably accounts for the lack of "full support" listed by caniuse.com.) Opera and Safari support Web SQL, along with the current version of Chrome (and upcoming versions).

The lack of a universally accepted standard has obviously caused problems for Web developers. "It's costly and inefficient for them to spend precious hours consulting multiple resources to understand how to employ Web technologies in a way that functions across browsers, operating systems and devices," said Microsoft's Jean Paoli and Michael Champion in their announcement of Web Platform Docs. Of course, you Web developers have known this for a while now. As one developer said on the hacks.mozilla.org site almost two years ago, commenting on Mozilla's support for IndexedDB in Firefox 4, "I think the way Mozilla are heading is correct. If we don't get this right now, before [Web apps] begin to supplant client-side apps, we're just going to be in one whole kludge of a mess that can't be undone easily."

So consider the new Web Platform Docs as an effort to further address the "kludge of a mess." The site is looking for user input and further development and is pretty bare-bones right now. The IndexedDB page just lists some 80 subpages for methods, properties, events and so on. The content borrows heavily from the MSDN Windows Internet Explorer API reference site. Still, drilling down into individual items can lead to a lot of question marks where content is supposed to go, and some are entirely empty.

So register, log on and help out on the wiki. You can start with the getting started guide about how you can contribute.

The "Web platform stewards" who helped out on the site, in addition to the W3C, Microsoft and Mozilla, include Adobe, Apple, Facebook, Google, HP, Nokia and Opera.

In addition to API documentation, the site features tutorials, forums, chat and a blog. The "Concepts" page includes articles ranging from "The history of the Web" and "How does the Internet Work" to "Mobile JavaScript best practices" and "WebSocket security."

Many Web developers expressed enthusiasm for the new initiative, writing comments on the introductory blog post such as this: "Great work to promote and develop the Web. It's one more step to move the Web forward."

Let's hope so.

What do you think about the new one-stop shop for Web development? Do you see it fleshing out into a vital resource? Do you plan to contribute? Share your thoughts by commenting here or dropping me a line.

Posted by David Ramel on 10/10/20122 comments

So everything's going mobile. We'll all hook into the cloud. Now it's touch-happy Windows 8 and an emphasis on Windows Store apps built with JavaScript and HTML5. It's inevitable, and I get that. But what's a hobbyist programmer like myself going to do, after spending a lot of time trying to learn the Microsoft .NET Framework and finally getting to the point where I can create interesting desktop applications?

Start over.

I don't like JavaScript. Never have. I don't get it--all those functions within functions and spaghetti code I can't figure out. C#/.NET seems a lot more organized and understandable.

But that's just me. More important, what about you, the professional developer making a living in the Microsoft ecosystem?

Well, the company is trying to smooth the transition. Take, for example, the new Windows Azure Mobile Services (WAMS) preview, which I've been playing around with. To recap, this is a Microsoft effort to simplify back-end development for your mobile cloud apps, targeting developers who want to focus on the client side of things and not worry about the nitty-gritty details of interacting with a database and such.

I found a couple things interesting about this initiative. First of all, it's yet another indication of Microsoft's attempt to be part of the transition from a world of PC desktops to mobile devices hooked into the cloud, obviously, along with becoming a good open source citizen.

Second, it's yet another indication of Microsoft's goal to simplify programming, making it more accessible to the not-professionally-trained masses. It kind of feeds into the whole "can anyone be a programmer" debate, which recently garnered more than 760 comments on Slashdot in response to an ArsTechnica.com article.

I decided to see if WAMS delivers, because, of course, if I can do it, anyone can do it. Well, I can do it; it's that easy. I started with some beginner tutorials available at the WAMS developer site, complete with a link to a free Windows Azure trial account to get you started. You also have to enable the WAMS preview in the account management portal. And you need to download the WAMS SDK, which, though clearly targeted at the mobile arena, could only build Windows 8 apps at the time I started playing around with it--though Windows Phone, Android and iOS support was promised soon. (Update: Microsoft partner Xamarin announced an open source SDK for MonoTouch for iOS and Mono for Android, available on GitHub, which also hosts the open source WAMS SDKs and samples.)

The first tutorial, "Get started with Mobile Services," is a simple "TodoList" app, letting you view, add and check-off/delete items from a list of things to be done. First you have to create a service. You need to fill in specific things such as the URL of your Windows Store app, database to use and region (though, curiously, the "Northwest US" region illustrated in the tutorial isn't available yet--you can only use "East US"). In this tutorial you create a new database server and table, instead of use an existing one.

After setting up the service, you create a new app to use it. The management portal includes a quickstart to do this. The quickstart can also guide you through the process of connecting an existing app, which basically just requires adding some references and a few lines of code to connect to your database table, which you make via the portal.

There are also tutorials available for getting started with data, validating and modifying data using server-side scripts, adding paging to queries, adding authentication, adding push notifications and more. These include downloadable projects for C# or JavaScript apps. (Yes, C# and .NET aren't going away; I exaggerated a little bit earlier, but you know what I mean.)

These beginner tutorials all involved setting up a super-simple new database or using a built-in data source such as a collection for your data. I usually check out a bunch of different tutorials when investigating a new technology, mixing and matching and trying different things until I usually get it to work right through sheer brute force, trial-and-error. I wondered about the use of an existing database. I couldn't find nearly as much guidance for that scenario, so I thought I'd explore it further.

I turned to the trusty AdventureWorks example database. WAMS uses ordinary Windows Azure SQL Databases, so I used the SQL Database Migration Wizard to generate a script to build the database in the cloud. You just need the info to connect to the server you set up via WAMS.

Once the wizard is done and your database is visible from the WAMS portal--you can't use it. You have to do a few different things to get it working. That process is described by Microsoft's Paul Batum in response to a Sept. 6 reader query in the WAMS forum. Basically you have to connect to the database management portal, reached from the regular Windows Azure management portal, to alter the schema so WAMS can use it. I wanted to use the AdventureWorks HumanResources.Employee table, and the WAMS service I set up was called "ram," so I ran this "query" from the database management portal:

ALTERSCHEMA ram TRANSFER HumanResources.Employee That changed the schema of just that one table, of course, as you can see in Figure 1.

[Click on image for larger view.] |

| Figure 1. Changing the schema of an existing database table to match your WAMS service. |

That would be quite tedious to do for every table, but there's probably some batch command or something that can change them all. I also had to change the existing primary key "EmployeeID" column to a lowercase "id," which WAMS requires for everything to work correctly, such as the browse database functionality (I'm not sure if anything else is broken). But WAMS still didn't know about the database, so I had to use the portal to "create" a table named "Employee," exactly as demonstrated in the tutorials where you set up a new database from the portal. After a few seconds, WAMS recognized the database and populated the new table with records. If your primary key column is "id," you can browse the database, as shown in Figure 2.

[Click on image for larger view.] |

| Figure 2. Browsing your database table via the management portal. |

Having an existing database at the ready, I used the portal "Get Started" page to grab the information I needed to connect to WAMS from an existing app (it also shows you how to create a brand-new app, which involves creating a database table and downloading a prebuilt Visual Studio solution all set up to use it). This was fairly straightforward. Figure 3 shows the steps and code for C# apps, while Figure 4 shows the process for JavaScript apps. Heeding the winds of change, I took the JavaScript route.

[Click on image for larger view.] |

| Figure 3. The steps to build a C# app. |

[Click on image for larger view.] |

| Figure 4. The steps to build a JavaScript app. |

With the MobileServiceClient variable "client" obtained from the WAMS portal as shown in Figure 4, I simply had to grab the table, read it and bind the results, assigned to the dataSource property of a WinJS.Binding.List, to the ListView's itemDataSource:

var empTable = client.getTable('Employee') .read()

.done(function (results) {

empItemsListView.winControl.itemDataSource =

new WinJS.Binding.List(results).dataSource;

});The read function uses one of four server-side scripts (JavaScript) that WAMS provides for insert, read, update and delete operations (because there's no System.Data namespace to use).

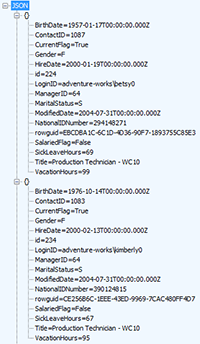

You can customize the scripts as you wish. For a contrived, impractical example, to query the AdventureWorks Employee table and return only three "Production Technician – WC10" employees who are female and single, I changed the read script to this:

function read(query, user, request) { query.where({Gender: 'F', MaritalStatus :'S', Title :

'Production Technician - WC10'})

.take(3);

request.execute();

}Note that a mssql object is also available for situations where a more complex SQL query might be needed. With that object, the read script above could be written as:

function read(query, user, request) { mssql.query("select top 3 * from Employee

where Title = 'Production Technician - WC10'

and Gender='F'

and MaritalStatus = 'S' ", [], {

success: function(results) {

request.respond(statusCodes.OK, results);

}

});

}Normally, of course, the server-side scripts would be used for validation, custom authorization and so on. Such query customization as in my contrived example would be handled from the client. For example, the functionality of my contrived example would be duplicated in the MobileServices.MobileServiceClient's getTable function from the client, like this:

var empTable = client.getTable('Employee') .where({ Title: 'Production Technician - WC10', MaritalStatus:

'S', Gender: 'F' })

.take(3)

.read() .done(function (results) {

empItemsListView.winControl.itemDataSource =

new WinJS.Binding.List(results).dataSource;

});WAMS uses a REST API, so the above function call emits the following GET request header to the server, as reported by Fiddler, the free Web debugging tool:

GET /tables/Employee?$filter=(((Title%20eq%20 'Production%20Technician%20-%20WC10')%20

and%20(MaritalStatus%20eq%20'S'))%20

and%20(Gender%20eq%20'F'))&$top=3 HTTP/1.1That returns the JSON objects shown in Figure 5, as reported (and decrypted) by Fiddler.

[Click on image for larger view.] |

| Figure 5. The JSON objects returned by WAMS, as seen in the Fiddler Web debugger. |

The resulting ListView display when I hit F5 in Visual Studio is shown in Figure 6.

[Click on image for larger view.] |

| Figure 6. The final product: database information displayed in a ListView. |

I didn't explore the tutorials much beyond the basics because I was primarily interested in the new aspect of connecting to an existing database, and this proved the concept. But for mobile apps, obviously, push notifications are important and, as mentioned, some tutorials are available through the portal to cover that functionality and user authentication. The push tutorial, however, uses C#, not JavaScript. One reader commented on the push notification tutorial, "Where is the JS version of this documentation? I am a HTML developer." There was no reply.

Not to be outdone, other readers in the .NET camp complained about the lack of C# code, specifically for server-side scripting, in the WAMS support forums. In reply to the question of whether or not C# or another .NET language would be supported for scripting, WAMS guru Josh Twist replied: "we have no firm plans right now but certainly haven't ruled this out. Again, we're listening to customer feedback and demand on this (you're not the first person to ask) so thanks for posting." Several other readers also weighed in on the subject, with one saying: "I agree. This seems kinda mismatched. The client allows script or C#. But the server side is JavaScript only. Seems the server side would be even a better fit with C#."

There were also some requests for Visual Basic tutorials. In fact, that was the post with the most total views in the support forum. Twist replied, "This is on our list of things to do." (Hmm, I wonder if that "to do" pun--all the tutorials are "to do" lists--was intended.) Microsoft's Glenn Gailey replied with links to the Visual Basic versions of the "Get started with data" tutorial and the WAMS quickstart project.

I'm sure such issues will get ironed out as the WAMS preview continues and user feedback is collected, so give it a try and let Microsoft know what you think. As a hobbyist, I'm certainly giving it the thumbs up. In fact, I was gratified that Twist mentioned the hobbyist as one of three distinct personas to whom WAMS is relevant. Twist listed these three roles in his introduction to WAMS on his The Joy of Code site. He said Microsoft research found that about two-thirds of developers were interested in the cloud but suffered from "cloudphobia," in that "they wanted backend capabilities like authentication, structured storage and push notifications for their app, but they were reluctant to add them" for lack of time, expertise and so on. In addition to the hobbyist, the other two developer types he mentioned were the "client-focused developer," targeted by WAMS, and the "backend developer," who is already experienced in writing server code.

So I'm looking forward to seeing how WAMS matures (hopefully before my 3-month trial Windows Azure subscription expires). As Twist said in a Joy of Code post, "we're working on making it even easier to build any API you like in Mobile Services. Stay tuned!"

What do you think about WAMS? Share your thoughts by commenting here or dropping me a line.

Posted by David Ramel on 10/03/20120 comments