Fresh from last week's Build developer conference in San Francisco, Microsoft executives appeared at the company's first-ever Ignite conference in Chicago and provided more details about the company's new Azure SQL Data Warehouse.

The company yesterday demoed the first "sneak peek" at the new "elastic data warehouse in the cloud," and today exec Tiffany Wissner penned a blog post to highlight specific functionalities.

Wissner explained how Azure SQL Data Warehouse expands upon the company's flagship relational database management system (RDBMS), SQL Server.

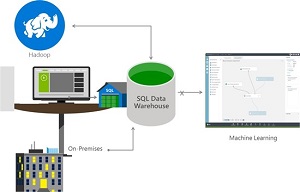

"Azure SQL Data Warehouse is a combination of enterprise-grade SQL Server augmented with the massively parallel processing architecture of the Analytics Platform System (APS), which allows the SQL Data Warehouse service to scale across very large datasets," Wissner said. "It integrates with existing Azure data tools including Power BI for data visualization, Azure Machine Learning for advanced analytics, Azure Data Factory for data orchestration and movement as well as Azure HDInsight, our 100 percent Apache Hadoop service for Big Data processing."

[Click on image for larger view.]

Working with Azure SQL Data Warehouse (source: Microsoft)

[Click on image for larger view.]

Working with Azure SQL Data Warehouse (source: Microsoft)

Just over a year ago, Microsoft introduced the APS as a physical appliance wedding its SQL Server Parallel Data Warehouse (PDW) with HDInsight. APS is described by Microsoft as "the evolution of the PDW product that now supports the ability to query across the traditional relational data warehouse and data stored in a Hadoop region -- either in the appliance or in a separate Hadoop cluster."

Wissner touted the pervasiveness of SQL Server as a selling point of the new solution, as enterprises can leverage developer skills and knowledge acquired from its years of everyday use.

"The SQL Data Warehouse extends the T-SQL constructs you're already familiar with to create indexes, partitions, functions and procedures which allows you to easily migrate to the cloud," Wissner said. "With native integrations to Azure Data Factory, Azure Machine Learning and Power BI, customers are able to quickly ingest data, utilize learning algorithms, and visualize data born either in the cloud or on-premises."

PolyBase in the cloud is another attractive feature, Wissner said. PolyBase was introduced with PDW in 2013 to integrate data stored in the Hadoop Distributed File System (HDFS) with SQL Server, one of many emerging SQL-on-Hadoop solutions.

"SQL Data Warehouse can query unstructured and semi-structured data stored in Azure Storage, Hortonworks Data Platform, or Cloudera using familiar T-SQL skills making it easy to combine data sets no matter where it is stored," Wissner said. "Other vendors follow the traditional data warehouse model that requires data to be moved into the instance to be accessible."

Although Wissner didn't identify any of those "other vendors," Microsoft took pains to position Azure SQL Data Warehouse as an improvement upon the Redshift cloud database offered by Amazon Web Services Inc. (AWS), which Microsoft is challenging for public cloud supremacy.

One of the advantages Microsoft sees in its product over Redshift is the ability to save costs by pausing cloud compute instances. This was mentioned at Build and echoed today by Wissner.

"Dynamic pause enables customers to optimize the utilization of the compute infrastructure by ramping down compute while persisting the data and eliminating the need to backup and restore," Wissner said. "With other cloud vendors, customers are required to back up the data, delete the existing cluster, and, upon resume, generate a new cluster and restore data. This is both time consuming and complex for scenarios such as data marts or departmental data warehouses that need variable compute power."

Again parroting the Build message, Wissner also discussed Azure SQL Data Warehouse's ability to separate compute and storage services, scaling them independently up and down immediately as needed.

"With SQL Data Warehouse you are able to quickly move to the cloud without having to move all of your infrastructure along with it," Wissner concluded. "With the Analytics Platform System, Microsoft Azure and Azure SQL Data Warehouse, you can have the data warehouse solution you need on-premises, in the cloud or a hybrid solution."

Users interested in trying out the new offering, expected to hit general availability this summer, can sign up to be notified when that happens.

Posted by David Ramel on 05/05/20150 comments

Forget all that techy Azure Big Data stuff -- Microsoft found a new way to put databases to work that's really interesting: guessing your age from your photo.

Threatening to upstage all the groundbreaking announcements at the Build conference is a Web site where you provide a photo and Microsoft's magical machinery consults a database of face photos to guess the age of the subjects.

Tell me you didn't (or won't) visit How-Old.net (How Old Do I Look?) and provide your own photo, hoping the Azure API would say you look 10 years younger than you are?

I certainly did. But it couldn't find my face (I was wearing a bicycle helmet in semi-profile), and then I had to get back to work. But you can bet I'll be back. So will you, right?

(Unless you're one of those fine-print privacy nuts.)

Why couldn't Ballmer come up with stuff like this? Could there be a better example of how this isn't your father's Microsoft anymore?

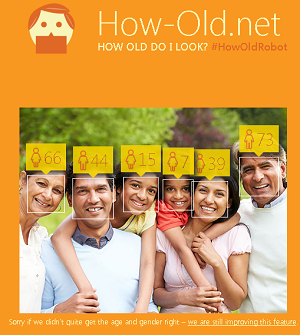

Microsoft machine learning (ML) engineers Corom Thompson and Santosh Balasubramanian explained in a Wednesday blog post how they were fooling around with the company's new face-recognition APIs. They sent out a bunch of e-mails to garner perhaps 50 testers.

[Click on image for larger view.]

How Old Do I Look? (source: Microsoft)

[Click on image for larger view.]

How Old Do I Look? (source: Microsoft)

"We were shocked," they said. "Within a few hours, over 35,000 users had hit the page from all over the world (about 29k of them from Turkey, as it turned out -- apparently there were a bunch of tweets from Turkey mentioning this page). What a great example of people having fun thanks to the power of ML!"

They said it took just a day to wire the solution up, listing the following components:

- Extracting the gender and age of the people in the pictures.

- Obtaining real-time insights on the data extracted.

- Creating real-time dashboards to view the results.

Their blog post gives all the details about the tools used and their implementation, complete with code samples. Go read it if you're interested.

Me? It's Friday afternoon and the boss is 3,000 miles away -- I'm finding a better photo of myself and going back to How-Old.net. I'm sure I don't look a day over 29.

In fact, I'll do it now. Hold on.

OK, it says I look seven years older than I am. I won't even give you the number. Stupid damn site, anyway ...

Posted by David Ramel on 05/01/20150 comments

A new Azure SQL Data Warehouse preview offered as a counter to Amazon's Redshift headed several data-related announcements at the opening of the Microsoft Build conference today.

Also being announced were Azure Data Lake and "elastic databases" for Azure SQL Database, further demonstrating the company's focus on helping customers implement and support a "data culture" in which analytics are used for everyday business decisions.

"The data announcements are interesting because they show an evolution of the SQL Server technology towards a cloud-first approach," IDC analyst Al Hilwa told this site. "A lot of these capabilities like elastic query are geared for cloud approaches, but Microsoft will differentiate from Amazon by also offering them for on-premises deployment. Other capabilities like Data Lake, elastic databases and Data Warehouse are focused on larger data sets that are typically born in the cloud. The volumes of data supported here builds on Microsoft's persistent investments in datacenters."

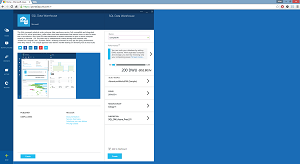

Azure SQL Data Warehouse will be available as a preview in June, Microsoft announced during the Build opening keynote. It was designed to provide petabyte-scale data warehousing as a service that can elastically scale to suit business needs. In comparison, the Amazon Web Services Inc. (AWS) Redshift -- unveiled more than two years ago -- is described as "a fast, fully managed, petabyte-scale data warehouse solution that makes it simple and cost-effective to efficiently analyze all your data using your existing business intelligence tools."

[Click on image for larger view.]

Azure SQL Data Warehouse (source: Microsoft)

[Click on image for larger view.]

Azure SQL Data Warehouse (source: Microsoft)

Microsoft pointed out what it said are numerous advantages that Azure SQL Data Warehouse provides over AWS Redshift, such as the ability to independently adjust compute and storage, as opposed to Redshift's fixed compute/storage ratio. Concerning elasticity, Microsoft described its new service as "the industry’s first enterprise-class cloud data warehouse as a service that can grow, shrink and pause in seconds," while it could take hours or days to resize a Redshift service. Azure SQL Data Warehouse also comes with a hybrid configuration option for hosting in the Azure cloud or on-premises -- as opposed to cloud-only for Redshift -- and offers pause/resume functionality and compatibility with true SQL queries, the company said. Redshift has no support for indexes, SQL UDFs, stored procedures or constraints, Microsoft said.

Enterprises can use the new offering in conjunction with other Microsoft data tools such as PowerBI, Azure Machine Learning, Azure HDInsight and Azure Data Factory.

Speaking of other data offerings, the Azure Data Lake repository for Big Data analytics project workloads provides one system for storing structured or unstructured data in native formats. It follows the trend -- disparaged by some analysts -- pioneered by companies such as Pivotal Software Inc. and its Business Data Lake. It can work with the Hadoop Distributed File System (HDFS) so it can be integrated with a range of other tools in the Hadoop/Big Data ecosystem, including Cloudera and Hortonworks Hadoop distributions and Microsoft's own Azure HDInsight and Azure Machine Learning.

For straight SQL-based analytics, Microsoft introduced the concept of elastic databases for Azure SQL Database, its cloud-based SQL Database-as-a-Service (DBaaS) offering. Azure SQL Database elastic databases reportedly provide one pool to help enterprises manage multiple databases and provision services as needed.

The elastic database pools let enterprises pay for all database usage at once and facilitate the running of centralized queries and reports across all data stores. The elastic databases support full-text search, column-level access rights and instant encryption of data. They "allow ISVs and software-as-a-service developers to pool capacity across thousands of databases, enabling them to benefit from efficient resource consumption and the best price and performance in the public cloud," Microsoft said in a news release.

Posted by David Ramel on 04/29/20150 comments

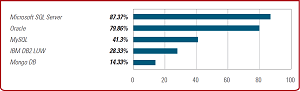

Despite all the publicity around Big Data and Apache Hadoop, a new database deployment survey indicates traditional, structured relational database management systems (RDBMS) still reign among enterprises, with SQL Server dueling Oracle for the overall lead.

Also, the new survey commissioned by Dell Software shows the use of traditional structured data is growing even faster than unstructured data, so the findings aren't just an example of a larger installed user base being eroded by upstart technologies.

"Although the growth of unstructured data has garnered most of the attention, Dell's survey shows structured data growing at an even faster rate," the company said in a statement. "While more than one-third of respondents indicated that structured data is growing at a rate of 25 percent or more annually, fewer than 30 percent of respondents said the same about their unstructured data."

Dell commissioned Unisphere Research to poll some 300 database administrators and other corporate data staffers in a report titled "The Real World of the Database Administrator."

[Click on image for larger view.]

What Database Brands Do You Have Running in Your Organization? (source: Dell Software)

[Click on image for larger view.]

What Database Brands Do You Have Running in Your Organization? (source: Dell Software)

"Although advancements in the ability to capture, store, retrieve and analyze new forms of unstructured data have garnered significant attention, the Dell survey indicates that most organizations continue to focus primarily on managing structured data, and will do so for the foreseeable future," the company said.

In fact, Dell said, more than two-thirds of enterprises reported that structured data constitutes 75 percent of the data being managed, while almost one-third of organizations reported not managing unstructured data at all -- yet.

There are many indications that organizations will widely adopt the new technologies, Dell said, as they need to support new analytical use cases.

But for the present, some 78 percent of respondents reported they were running mission-critical data on the Oracle RDBMS, closely followed by Microsoft SQL Server at about 72 percent. After MySQL and IBM DB2, the first NoSQL database to crack the most-used list is MongoDB.

Also, the survey stated, "Clearly, traditional RDBMSs shoulder the lion's share of data management in most organizations. And since more than 85 percent of the respondents are running Microsoft SQL Server and about 80 percent use Oracle, the evidence is clear that most companies support two or more DBMS brands.

Looking to the future, Dell highlighted two specific indicators of the growing dependence on NoSQL databases, especially in large organizations:

- Approximately 70 percent of respondents using MongoDB are running more than 100 databases, 30 percent are running more than 500 databases, and nearly 60 percent work for companies with more than 5,000 employees.

- Similarly, 60 percent of respondents currently using Hadoop are running more than 100 databases, 45 percent are running more than 500 databases, and approximately two-thirds work for companies with more than 1,000 employees.

But despite the big hype and big plans on the part of big companies, the survey indicated that Big Data isn't making quite as big an impact on enterprises as might be expected.

Instead, even newer upstart technologies are seen as being more disruptive.

"Most enterprises believe that more familiar 'new' technologies such as virtualization and cloud computing will have more impact on their organization over the next several years than 'newer' emerging technologies such as Hadoop," the survey states. "In fact, Hadoop and NoSQL do not factor into many companies' plans over the next few years."

Posted by David Ramel on 04/21/20150 comments

SQL skills pay well and the technology is among the most popular as indicated by a big new developer survey from Stack Overflow, which tracked everything from caffeine consumption to indentation preferences.

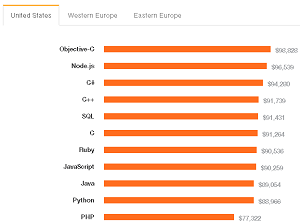

While Objective-C was reported as the most lucrative technology to learn -- garnering an average salary of $98,828 in the U.S. -- SQL wasn't far behind, coming in at No. 5 on the list with an average reported salary of $91,431 in the U.S.

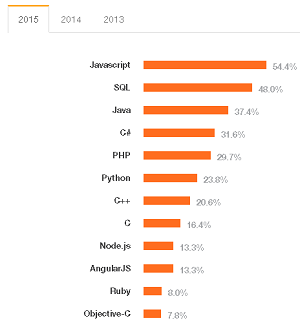

In terms of popularity, SQL was at No. 2 in the rankings, listed by 48 percent of respondents, second only to the ubiqutous JavaScript, listed by 54.4 percent of respondents.

These results are inline with previous such surveys from a few years ago, showing SQL isn't losing much ground in the technology wars.

"These results are not unbiased," Stack Overflow warned about the new survey. "Like the results of any survey, they are skewed by selection bias, language bias, and probably a few other biases. So take this for what it is: the most comprehensive developer survey ever conducted. Or at least the only one that asks devs about tabs vs. spaces."

Stack Overflow, in case you didn't know, is the go-to place for coders to get help with their problems. The site reports some 32 million monthly visitors.

Using this unique status, the site polled 26,086 people from 157 countries in February, posing a list of 45 questions.

One of the key areas of inquiry concerned salaries, of course. The survey found that behind Objective-C, the most lucrative skills were Node.js., C#, C++ and SQL.

[Click on image for larger view.]

Who Makes What (source: Stack Overflow)

[Click on image for larger view.]

Who Makes What (source: Stack Overflow)

But if it's purchasing power you're interested in, Ukraine is tops -- at least according to the metric of how many Big Macs you can buy on your salary.

It also might help to work remotely, as coders who don't have to fight commute traffic earn about 40 percent more than those who never work from home.

On the technology front, Apple's young Swift language was the most loved, Salesforce the most dreaded, and Android the most wanted (devs who aren't developing with the tech but have indicated interest in doing so).

[Click on image for larger view.]

Most Popular Technologies (source: Stack Overflow)

[Click on image for larger view.]

Most Popular Technologies (source: Stack Overflow)

Interestingly, the popular Java programming language didn't make the top 10 list of most loved languages, or most dreaded, though it was in the middle of the pack for most wanted and came in at No. 3 in overall technology popularity.

Other survey highlights include:

- JavaScript is the most popular technology, followed by SQL, Java, C# and PHP.

- NotePad++ is the most popular text editor, followed by Sublime Text, Vim, Emacs and Atom.io.

- 1,900 respondents reported being mobile developers, with 44.6 percent working with Android, 33.4 percent working with iOS and 2.3 percent working with Windows Phone (and 19.8 percent not identifying with any of those).

- The biggest age group is 25-29, where 28.5 percent of respondents lie. Only 1.9 percent of respondents were over 50.

- Only 5.8 percent of respondents reported themselves as being female.

- Most developers (41.8 percent) reported being self-taught, with only 18.4 percent having earned a master's degree in computer science or a related field.

- Most respondents identified themselves as full-stack developers (6,800). Two reported being farmers.

And, oh, by the way, Norwegian developers consume the most caffeinated beverages per day (3.09), and tabs were the more popular indentation technique, preferred by 45 percent of respondents. Spaces were popular with 33.6 percent of respondents.

But there's more to that story.

"Upon closer examination of the data, a trend emerges: Developers increasingly prefer spaces as they gain experience," the survey stated. "Stack Overflow reputation correlates with a preference for spaces, too: users who have 10,000 rep or more prefer spaces to tabs at a ratio of 3 to 1."

I'm a spaces guy myself. How about you?

Posted by David Ramel on 04/14/20150 comments

Forrester Research Inc. analyst Boris Evelson said existing approaches to business intelligence (BI) need updating in the modern world, and converging them with Big Data technologies requires more than traditional DBMS systems such as SQL Server can provide.

BI is alive and well in the age of Big Data and will continue to enjoy a thriving market, Evelson said, but the world is constantly changing and more innovation is needed.

"Some of the approaches in earlier-generation BI applications and platforms started to hit a ceiling a few years ago," Evelson said in a blog post today. "For example, SQL and SQL-based database management systems (DBMS), while mature, scalable and robust, are not agile and flexible enough in the modern world where change is the only constant."

But Big Data can provide those agile and flexible alternatives in a convergence with BI. "In order to address some of the limitations of more traditional and established BI technologies, Big Data offers more agile and flexible alternatives to democratize all data, such as NoSQL, among many others," the analyst said.

While the emergence of NoSQL data stores as a necessary replacement for traditional DBMS in some Big Data scenarios is well-known, the research firm's suggested solution is somewhat less obvious.

Forrester proposes using a flexible hub-and-spoke data platform to meld the BI and Big Data worlds, Evelson said in publicizing a new research report titled, "Boost Your Business Insights By Converging Big Data And BI." The research builds on previous themes explored by Forrester, such as a 2013 report that features the hub-and-spoke pattern prominently in a discussion of Big Data patterns.

The new report describes such an architecture as featuring the following components:

- Hadoop-based data hubs/lakes to store and process majority of the enterprise data.

- Data discovery accelerators to help profile and discover definitions and meanings in data sources.

- Data governance that differentiates the processes you need to perform at the ingest, move, use and monitor stages.

- BI that becomes one of many spokes of the Hadoop-based data hub.

- A knowledge management portal to front-end multiple BI spokes.

- Integrated metadata for data lineage and impact analysis.

Evelson isn't the first Forrester analyst to hint at big changes in the datacenter as Big Data matures and gets integrated with other tools.

"Enterprises that have a more complete data platform story, as well as a vision, are more likely to succeed in the coming years and also have a competitive advantage if they get onto this bandwagon of data platform, which includes Hadoop, Big Data, NoSQL as well as traditional databases -- all integrated," Forrester analyst Noel Yuhanna told ADTMag last year. "Because that's where you see customers that are more successful, having all those data types together and managed together and provided together in a manner that will be helpful for businesses to operate."

That theme is echoed in this new research, which identifies three key areas upon which that the hub-and-spoke system should be based. These three layers are labeled cold, warm and hot, expressing the relationship between speedy and powerful analytics and associated expenses. The cold layer holds most enterprise data in the Hadoop framework, which can be slower than databases such as SQL Server but costs less to operate. The warm layer uses DBMS for somewhat faster queries at a somewhat more expensive price. The hot layer is for speedy analysis with in-memory tools where cost might not be as important as the benefits gleaned from real-time, interactive data processing.

"But at the end of the day, while new terms are important to emphasize the need to evolve, change and innovate, what's infinitely more imperative is that both strive to achieve the same goal: transform data into information and insight," Evelson said. "Alas, while many developers are beginning to recognize the synergies and overlaps between BI and Big Data, quite a few still consider and run both in individual silos."

Posted by David Ramel on 03/27/20150 comments

Idera Inc. announced new suites for managing SQL Servers, providing performance monitoring, automated maintenance, security features and more.

Known for its performance monitoring solutions, the Houston-based company renamed its primary management suite from SQL Suite to SQL Management Suite and launched three new software packages for specific functionalities.

"These suites combine top Idera products and are designed to help DBAs tackle complementary tasks more effectively as part of their daily job maintaining SQL Servers," the company said in a blog post this week.

The three new offerings are SQL Performance Suite, SQL Maintenance Suite and SQL Security Suite.

The flagship SQL Management Suite includes many of the tools found in the other packages for performance monitoring, alerting and diagnostics; compliance management for monitoring, auditing and alerting of activity and changes to data; security and permissions management; backup and restoration of SQL data; and defragmentation.

Some of the other suites add tools for their specific functionality -- for example, a business intelligence (BI) manager for tracking the health of SQL Server Analysis Services (SSAS), SQL Server Reporting Services (SSRS) and SQL Server Integration Services (SSIS).

"Our customers are responsible for maintaining and managing complex SQL environments and we listen to them to ensure our products consistently meet their performance, compliance and administrative needs," said CEO Randy Jacobs. "With most customers typically purchasing more than one product, we developed the new SQL Suites to improve the buying process and increase solution value for DBAs."

Idera, which offers a 14-day trial of its suites, didn't provide pricing information.

Posted by David Ramel on 03/19/20150 comments

Continuing the company's shift to openness and interoperability, Microsoft yesterday released an updated PHP driver for its flagship Relational Database Management System (RDBMS), SQL Server.

The new driver supports PHP 5.6 and eases working with the Open Database Connectivity (ODBC) interface, described by Wikipedia as "a standard programming language middleware API for accessing database management systems."

"This driver allows developers who use the PHP scripting language to access Microsoft SQL Server and Microsoft Azure SQL Database, and to take advantage of new features implemented in ODBC," Microsoft said in a blog post. "The new version works with Microsoft ODBC Driver 11 or higher."

In January 2013 Microsoft introduced new ODBC drivers for SQL Server in version 11. "Microsoft is adopting ODBC as the de-facto standard for native access to SQL Server and Windows Azure SQL Database," the company said at the time. "We have provided longstanding support for ODBC on Windows and, in the SQL Server 2012 time frame, released support for ODBC on Linux (Red Hat Enterprise Linux 5 and 6, and SUSE Enterprise Linux)."

Key new features in the Windows version included driver-aware connection pooling, connection resiliency and asynchronous execution (polling method).

Posted by David Ramel on 03/10/20150 comments

One day after Big Data player Pivotal Software Inc. changed its business model by open sourcing core technologies, Microsoft today announced related product updates with a definite open source slant.

The "new and enhanced" data services include an Azure HDInsight preview that runs on Linux, and the general availability of Storm on HDInsight, Azure Machine Learning, and Informatica technology on the Microsoft Azure cloud.

"Just about every interesting innovation that's going on -- in data today, in machine learning and other areas -- has its roots in an open source ecosystem," Pivotal CEO Paul Maritz said yesterday at a live streaming event.

Perhaps an exaggeration, but the underlying meaning was grokked by Microsoft years ago, and the company is in the middle of a swing to openness and interoperability, led by new CEO Satya Nadella and top lieutenants such as T. K. "Ranga" Rengarajan, head of the data platform.

"Azure Machine Learning reflects our support for open source," stated a blog post today authored by Rengarajan and machine learning exec Joseph Sirosh. "The Python programming language is a first-class citizen in Azure Machine Learning Studio, along with R, the popular language of statisticians." Microsoft acquired stewardship of the R language earlier this year.

Data developers can now use the Machine Learning Marketplace to discover appropriate APIs and prebuilt services for common concerns such as recommendation engines, detecting anomalies and forecasting.

The open source story continues with Storm for Azure HDInsight. Azure HDInsight is Microsoft's cloud service based on 100 percent Apache Hadoop technology, open sourced by the Apache Software Foundation.

"Storm is an open source stream analytics platform that can process millions of data 'events' in real time as they are generated by sensors and devices," Microsoft said. "Using Storm with HDInsight, customers can deploy and manage applications for real-time analytics and Internet-of-Things (IoT) scenarios in a few minutes with just a few clicks. We are also making Storm available for both .NET and Java and the ability to develop, deploy and debug real-time Storm applications directly in Visual Studio. That helps developers to be productive in the environments they know best." Microsoft added Storm integration last fall.

Of course, there's nothing more open source than the Linux OS, and Azure HDInsight is now available as a preview project running on Ubuntu clusters. Ubuntu is a popular Linux distribution, described by Microsoft as "the leading scale-out Linux."

Adding Linux support in addition to Windows "is particularly compelling for people that already use Hadoop on Linux on-premises like on Hortonworks Data Platform, because they can use common Linux tools, documentation, and templates and extend their deployment to Azure with hybrid cloud connections," Microsoft said.

Also, to increase customer options for leveraging technology from Microsoft partners, the Redmond software giant announced that Informatica data integration technology will be available in the Azure Marketplace.

"Today, Informatica is announcing the availability of its Cloud Integration Secure Agent on Microsoft Azure and Linux Virtual Machines as well as an Informatica Cloud Connector for Microsoft Azure Storage," Informatica exec Ronen Schwartz said in a blog post today. "Users of Azure data services such as Azure HDInsight, Azure Machine Learning and Azure Data Factory can make their data work with access to the broadest set of data sources including on-premises applications, databases, cloud applications and social data."

All the Microsoft news comes during the Strata + Hadoop World conference underway in San Jose, Calif.

"These new services are part of our continued investment in a broad portfolio of solutions to unlock insights from data," Microsoft said. "They can help businesses dramatically improve their performance, enable governments to better serve their citizenry, or accelerate new advancements in science. Our goal is to make Big Data technology simpler and more accessible to the greatest number of people possible: Big Data pros, data scientists and app developers, but also everyday businesspeople and IT managers."

Posted by David Ramel on 02/18/20150 comments

Microsoft announced a bevy of improvements to its cloud-based data products, including the Azure SQL Database Update V12 (preview), sporting new security features and bringing it closer to full SQL Server engine compatibility.

The new security mechanisms available now or on tap include row-level security, dynamic data masking and transparent data encryption. These will be added to the existing auditing feature so users can further protect cloud data and comply with corporate and industry policies, Microsoft said.

Company exec Tiffany Wissner said in a blog post yesterday that row-level security was available now as a public preview. "Coming soon, SQL Database will also preview dynamic data masking, which is a policy-based security feature that helps limit the exposure of data in a database by returning masked data to non-privileged users who run queries over designated database fields, like credit-card numbers, without changing data on the database," Wissner said. She added the transparent data encryption is coming to SQL Database V12 databases to encrypt data at rest.

Already rolled out in Europe but coming to the United States soon, the updated SQL Database V12 has near-complete compatibility with the company's flagship SQL Server relational database management system (RDBMS) engine. The cloud service has always lacked some features of the regular SQL Server but has steadily been catching up.

The latest SQL Database will also better support larger databases -- this is the age of Big Data, after all -- and improved performance on the Premium tier.

"Internal tests on over 600 million rows of data show Premium query performance improvements of around 5x in the new preview relative to today's Premium SQL Database and up to 100x when applying the in-memory columnstore technology," Wissner said.

The new SQL Database version will start rolling out in the United States on Feb. 9 and should be available to most global datacenters by the end of that month.

Microsoft also announced simplified management of SQL Server running in Microsoft Azure Virtual Machines (VMs). For example, while mission-critical applications can benefit greatly from the SQL Server AlwaysOn high availability (HA) and disaster recovery (DR) capabilities, such environments can be difficult to set up.

"Now with new auto HA setup capabilities using the AlwaysOn Portal Template added for SQL Server in Azure VMs, this really becomes a simpler task, freeing up your valuable time and resources to focus on other business priorities," Wissner said.

Backups, patching, and the monitoring and managing of SQL Server instances were also improved, Microsoft said.

"As a company committed to maintaining the highest innovation standards for our global clients, we're always eager to test the latest features," the company quoted exec John Schlesinger at customer company Temenos as saying. "So previewing the latest version of SQL Database was a no-brainer for us. After running both a benchmark and some close-of-business workloads, which are required by our regulated banking customers, we saw significant performance gains including a doubling of throughput for large blob operations, which are essential for our customers' reporting needs."

Along with pure data-related enhancements, Microsoft also announced other Azure updates affecting its enterprise mobility offerings and Azure Media Services.

"To enhance application access management, Microsoft Azure Active Directory is introducing, in public preview, conditional access policies that can enforce multi-factor authentication per application," said company exec Vibhor Kapoor in his own blog post yesterday. Also in public preview, Connect Health helps monitor and gain insights into the identity infrastructure used to extend on-premises identities to the cloud, such as Active Directory Federation Services (AD FS).

He also announced an Azure Rights Management Services (RMS) migration toolkit to help enterprises move from Active Directory RMS or Windows RMS to Azure RMS while maintaining access to existing RMS-protected content and policies.

For Azure Media Services, Kapoor said the content protection feature is now available for live and on-demand workflows, helping to address piracy concerns.

"Whether you are looking to run your SQL Server workload in an Azure VM or via the SQL Database managed service, there's no better time than now to move your enterprise workloads to the cloud or build new applications with Microsoft Azure," Kapoor said.

Current Azure subscribers can sign up to test the preview.

Posted by David Ramel on 01/30/20150 comments

The software arm of Dell Inc. yesterday announced new versions of a bevy of products for the caretaking and development of SQL Server relational database management systems (RDBMSes).

Dell Software updated five different tools for monitoring, managing, protecting and boosting the performance of SQL Server databases, highlighted by version 11 of its Spotlight on SQL Server Enterprise.

That tool provides operational monitoring, diagnostics, administration and automatic tuning of physical on-premises, cloud-hosted or virtualized databases.

Reflecting the new "mobile-first" industry mindset, Spotlight on SQL Server Enterprise 11 features a new mobile app for remotely diagnosing issues from a smartphone. The app is available in native versions for Apple iOS, Google Android and Microsoft Windows Phone platforms.

The updated monitoring software also adds new multi-dimensional workload analysis and a new System Center Operations Manager management pack for users of the Microsoft cross-platform datacenter management software for OSes and hypervisors.

Spotlight also works with the new Toad for SQL Server 6.5, which helps developers and DBAs check system health and performance analytics.

The Dell data management Toad tool has been updated to work with the latest versions of SQL Server 2014 Enterprise and SQL Server Express running on the Amazon EC cloud or the Microsoft Azure cloud, as well as other Microsoft platforms such as SQL Server Analysis Services (SSAS), Azure Marketplace, Azure Table services and SharePoint.

The company also announced "the newly released Foglight for SQL Server 5.7, for real-time and historical database performance monitoring of both virtualized and non-virtualized databases." Dell said it features the new SQL Performance Investigator for workload analytics.

Another updated tool is LiteSpeed for SQL Server 8.0 for backup and recovery, now supporting the Amazon S3 storage service and SQL Server 2014. It also has a new UI and multiple-database restore functionality.

While the aforementioned products are available now, Dell said it plans to introduce later in the year version 8.6 of SharePlex for database replication and integration.

"The updated solution enables IT staff to offload reporting, migrate data and provide immediate data integration services from Oracle databases to Microsoft SQL Server for better business insight," Dell said. The tool now provides replication support for eight platforms: Oracle, SQL Server, Apache Hadoop, SAP ASE, Open Database Connectivity (ODBC), Java Message Service (JMS), SQL flat files and XML files.

Dell said developers and DBAs will be able to see the new products at next week's PASS Summit.

Posted by David Ramel on 10/29/20140 comments

In Microsoft's new era of openness, interoperability and increased customer options, the company continues to hedge its Big Data bets with a stream of new partnerships, services and initiatives.

The company's continued expansion of data developer services in Microsoft Azure cloud was highlighted this week by a partnership with Cloudera Inc., one of the "big three" Big Data players with enterprise offerings based on Apache Hadoop.

Cloudera Enterprise this week achieved Azure Certification to offer more Big Data options for Microsoft cloud customers, and further integration of Cloudera technology with other Microsoft data services is on tap.

"As a result of this certification, organizations will be able to launch a Cloudera Enterprise cluster from the Azure Marketplace starting Oct. 28," Microsoft said in a blog post. "Initially, this will be an evaluation cluster with access to MapReduce, HDFS and Hive. At the end of this year when Cloudera 5.3 releases, customers will be able to leverage the power of the full Cloudera Enterprise distribution including HBase, Impala, Search and Spark."

Just last week, Hortonworks Inc. -- another of the top three Hadoop vendors and a principal competitor to Cloudera --

announced Azure certification for its Hortonworks Data Platform (HDP). This expands on the partnership of Microsoft and Hortonworks, which last year teamed up for the Microsoft cloud-based Hadoop service,

HDInsight, and earlier developed

HDP for Windows.

Also last week, Microsoft announced HDInsight integration with Apache Storm for real-time Big Data analytics.

In the latest move with Cloudera, Azure customers will have more Big Data options, especially after Cloudera 5.3 is released in December. Then, Cloudera said, customers will be able to:

- Deploy Cloudera directly from the Microsoft Azure Marketplace.

- Import data into Cloudera from SQL Server.

- Use Microsoft Power BI for Office 365 for self-service business intelligence.

- Use Azure Machine Learning for cloud-based predictive analytics.

The SQL Server functionality is a further sign of Microsoft's tremendous effort to keep its traditional flagship relational database management system (RDBMS) relevant in the new world of Big Data analytics powered by non-relational NoSQL databases. Partnerships with Big Data vendors are key to that strategy, and Microsoft has now teamed up with two of the leading enterprise offerings in major initiatives.

"Microsoft and Cloudera are collaborating to help customers realize Big Data insights with the cloud," said Microsoft exec Scott Guthrie in a statement. "Now Azure customers can deploy Cloudera Enterprise with a few clicks, visualize their data with Microsoft Power BI and gain insights to transform their business -- all within minutes."

Stay tuned for further partnership news, possibly even with the third leading Hadoop vendor, MapR Technologies Inc.

Posted by David Ramel on 10/23/20140 comments