News

Microsoft Deep Learning Alternative: On-Device Training for ONNX Runtime

Microsoft announced on-device training of machine language models with the open source ONNX Runtime (ORT).

The ORT is a cross-platform machine-learning model accelerator, providing an interface to integrate hardware-specific libraries that can be used with models from PyTorch, Tensorflow/Keras, TFLite, scikit-learn and other frameworks.

The open source project is just one part of the company's huge AI push that started late last year with the debut of the sentient-sounding ChatGPT chatbox from Microsoft partner OpenAI, a push that has jump-started AI and ML development in the Microsoft dev space. It already powers machine learning models in key Microsoft products and services across Office, Azure, Bing and dozens of community projects.

Microsoft said ORT provides a simple experience for AI developers to run models on multiple hardware and software platforms, going beyond accelerating server-side inference and training with the capability to inference on mobile devices and web browsers. New on-device training functionality was announced last week, extending the ORT-Mobile inference offering to enable training on the edge devices to make it easier for developers to take an inference model and train it locally on-device -- with data present on-device -- to improve the user experience.

The new on-device training capability, the company said, allows application developers to personalize experiences for users without compromising privacy, with practical applications falling into two broad categories:

- Federated learning: This technique can be used to train global models based on decentralized data without sacrificing user privacy.

- Personalized learning: This technique involves fine-tuning models on-device to create new personalized models.

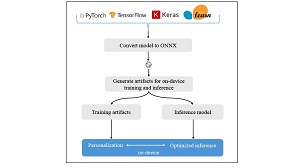

[Click on image for larger view.] High-Level Workflow for Personalization with ONNX Runtime (source: Microsoft).

[Click on image for larger view.] High-Level Workflow for Personalization with ONNX Runtime (source: Microsoft).

"As opposed to traditional deep learning (DL) model training, On-Device Training requires efficient use of compute and memory resources," Microsoft said in a May 31 blog post. "Additionally, edge devices vary greatly in compute and memory configurations. To support these unique needs of edge device training, we created On-Device Training capability that is framework agnostic and builds on top of the existing C++ ORT core functionality.

"With On-Device Training, application developers can now infer and train using the same binaries. At the end of a training session, the runtime produces optimized inference ready models which can then be used for a more personalized experience on the device. For scenarios like federated learning, the runtime provides model differences since the aggregation happens on the server side."

Key benefits of the approach were summarized like this:

- Memory and performance efficient local trainer for lower resource consumption on device (battery life, power usage, and multiple app training).

- Optimized binary size which fits strict constraints on edge devices.

- Simple APIs and multiple language bindings make it easy to scale across multiple platform targets (Now available -- C, C++, Python, C#, Java. Upcoming -- JS, Objective-C, and Swift).

- Developers can extend their existing ORT Inference solutions to enable training on the edge.

- Same ONNX model and runtime optimizations can run across desktop, edge, and mobile devices, without having to re-design training solution across platforms.

The dev team plans to add support for iOS and web browsers while also enabling more optimizations to make the technique more efficient. Devs can also look forward to the publication of deep dives and tutorials in the coming months, with Microsoft inviting feedback on the GitHub repo.

About the Author

David Ramel is an editor and writer for Converge360.