Neural Network Lab

Particle Swarm Optimization Using C#

Particle swarm optimization isn't usually seen as the first-choice technique for training a neural network but, as James McCaffrey demonstrates, it's a useful alternative.

Particle swarm optimization (PSO) is a technique for finding approximate solutions to difficult or impossible numeric optimization problems. In particular, PSO can be used to train a neural network. PSO is loosely based on the behavior of groups such as flocks of birds or schools of fish. This article explains PSO and presents a complete demo program in C#.

A neural network is essentially a complex function that accepts numeric inputs and generates numeric outputs. A fully connected neural network with m inputs, h hidden nodes and n outputs has (m * h) + (h * n) + (h + n) weights and biases. Training a neural network is the process of finding values for the weights and biases so that for a given set of training inputs, the computed outputs of the network closely match (in other words, have minimal error) the known outputs associated with the training inputs. So, training a neural network is essentially a numerical optimization problem.

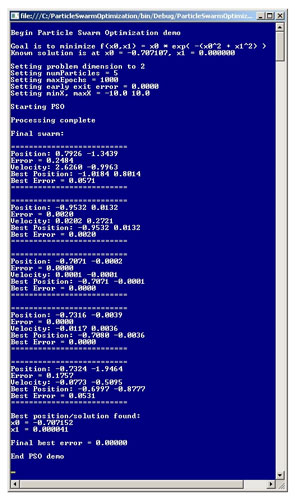

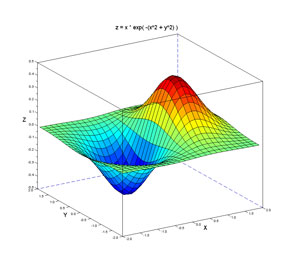

In order to use PSO to train a neural network, you must have a solid grasp of PSO. Because neural networks are fairly complicated, it's best to learn about PSO by using a simpler problem than training a neural network. Take a look at the screenshot of a demo program in Figure 1, and the graph of a function in Figure 2.

[Click on image for larger view.]

Figure 1. Particle Swarm Optimization Demo

[Click on image for larger view.]

Figure 1. Particle Swarm Optimization Demo

[Click on image for larger view.]

Figure 2. Dummy Double-Dip Function to Minimize

[Click on image for larger view.]

Figure 2. Dummy Double-Dip Function to Minimize

The image in Figure 2 is the graph of the function:

z = x * exp( -(x^2 + y^2) )

This is just a relatively simple function that can be used to test different numerical optimization techniques. The function has a known minimum value of z = -0.4288819 at x = -0.7071068 and y = 0.0. The screenshot in Figure 1 shows PSO in action, finding an approximate solution to the minimization optimization problem.

The demo program begins by setting up some PSO parameters. PSO is an iterative process and a particle represents a possible solution to the optimization problem being analyzed. The problem dimension is set to 2 because the function to minimize has two values, x and y (or, equivalently, x0 and x1). The number of particles is set to 5. More particles generally lead to a better solution at the expense of performance. Parameter maxEpochs is set to 1,000 and is the maximum number of processing iterations to be used. Again, more epochs can give a better solution but will take longer. Parameter exitError is set to 0.0. The exit error is a value that, if during PSO processing a solution has an error this low, the algorithm will exit early before reaching maxEpochs iterations. In the demo, because exitError is set to 0.0, the algorithm will not terminate early.

Parameters minX and maxX are constraints on the possible values of the components of a possible solution. In the demo, minX and maxX are set to -10.0 and +10.0, respectively. If you look at the graph in Figure 2, you can see that the minimum value of z occurs when both x and y are between -2.0 and +2.0, so those values could have been used. Values for minX and maxX will vary from problem to problem. In neural network training, if all input values are normalized, setting minX and maxX to -10.0 and +10.0 is a reasonable rule of thumb in most cases.

Once the PSO parameters are set, the demo programs call the PSO solving method. After 1,000 iterations, the demo displays the final values of the five particles in the swarm, along with the best solution found. In this example, PSO found a best solution of x0 = -0.707152, x1 = 0.000041 which is very close to, but not quite, the optimal solution of x0 = -0.707107, x1 = 0.000000. The best solution has a very low error of 0, rounded to five places.

The Double-Dip Function

When evaluating different numerical optimization techniques, I often use the function z = x * exp(-(x^2 + y^2)) shown in Figure 2. The function doesn't have an official name (at least, that I'm aware of), so I call it the double-dip function. The function is useful as a baseline benchmark problem because it has a single global minimum and a single global maximum, with easily computed known solutions.

Because the double-dip function has a known solution, there's no need to use PSO. PSO is best used for problems where there is no easy classical technique, such as using the calculus derivative, for finding a solution. Training a neural network is an example of such a problem. It's important to remember that PSO is not guaranteed to find an optimal solution to an optimization problem.

Overall Program Structure

To create the demo program, I launched Visual Studio and created a C# console application and named it ParticleSwarmOptimization. The demo has no significant .NET dependencies so any version of Visual Studio will work. After the template code loaded, in the Solution Explorer window I renamed file Program.cs to ParticleProgram.cs and Visual Studio automatically renamed class Program for me. At the top of the source code, I deleted all unneeded references to namespaces, leaving just the reference to System.

The overall program structure with some WriteLine statements removed and minor edits to save space is presented in Listing 1. Method Solve does most of the work and uses the PSO algorithm search for a good solution to minimize the double-dip function. Method Error accepts an array of double, which represents a possible solution and returns the squared difference between the computed value of the double-dip function and the known optimal value. Program-defined class Particle encapsulates a virtual particle. I removed all normal error-checking to keep the size of the demo program small and the main ideas clear.

Listing 1: Particle Swarm Demo Program Structure

using System;

namespace ParticleSwarmOptimization

{

class ParticleProgram

{

static void Main(string[] args)

{

Console.WriteLine("Particle Swarm Optimization");

int dim = 2; // problem dimensions

int numParticles = 5;

int maxEpochs = 1000;

double exitError = 0.0; // exit early

double minX = -10.0; // problem-dependent

double maxX = 10.0;

Console.WriteLine("\nStarting PSO");

double[] bestPos = Solve(dim, numParticles,

minX, maxX, maxEpochs, exitError);

double bestError = Error(bestPosition);

Console.WriteLine("Best positionfound:");

for (int i = 0; i < bestPos.Length; ++i)

{

Console.Write("x" + i + " = ");

Console.WriteLine(bestPos[i].ToString("F6") + " ");

}

Console.WriteLine("");

Console.Write("Final best error = ");

Console.WriteLine(bestError.ToString("F5"));

Console.WriteLine("\nEnd PSO demo\n");

Console.ReadLine();

} // Main

static double Error(double[] x) { . . }

static double[] Solve(int dim, int numParticles, double minX,

double maxX, int maxEpochs, double exitError) { . . }

}

public class Particle { . . }

}

The Particle Class

The Particle class defines a particle for use by the PSO algorithm. Class Particle is presented in Listing 2. For simplicity, all class members are declared using public scope. Array position represents the current position of a particle, which in turn represents a possible solution to an optimization problem. Array velocity represents the current speed and direction of a particle, presumably towards a new, better position/solution. Field error is the error associated with the particle's current position. Array bestPosition holds the position of the particle which yielded the best (lowest) error value. Field bestError is the error value associated with location bestPosition.

Listing 2: The Particle Class

public class Particle

{

public double[] position;

public double error;

public double[] velocity;

public double[] bestPosition;

public double bestError;

public Particle(double[] pos, double err, double[] vel,

double[] bestPos, double bestErr)

{

this.position = new double[pos.Length];

pos.CopyTo(this.position, 0);

this.error = err;

this.velocity = new double[vel.Length];

vel.CopyTo(this.velocity, 0);

this.bestPosition = new double[bestPos.Length];

bestPos.CopyTo(this.bestPosition, 0);

this.bestError = bestErr;

}

public override string ToString()

{

string s = "";

s += "==========================\n";

s += "Position: ";

for (int i = 0; i < this.position.Length; ++i)

s += this.position[i].ToString("F4") + " ";

s += "\n";

s += "Error = " + this.error.ToString("F4") + "\n";

s += "Velocity: ";

for (int i = 0; i < this.velocity.Length; ++i)

s += this.velocity[i].ToString("F4") + " ";

s += "\n";

s += "Best Position: ";

for (int i = 0; i < this.bestPosition.Length; ++i)

s += this.bestPosition[i].ToString("F4") + " ";

s += "\n";

s += "Best Error = " + this.bestError.ToString("F4") + "\n";

s += "==========================\n";

return s;

}

}

The Particle constructor simply accepts values for a particle and copies them in. Because all fields are public, the constructor could have been omitted but, in my opinion, using an explicit constructor is a cleaner design. The ToString method is crude but useful for WriteLine-style debugging during development.

The Error Function

Method Error is defined as:

static double Error(double[] x)

{

double trueMin = -0.42888194;

double z = x[0] *

Math.Exp( -((x[0]*x[0]) + (x[1]*x[1])) );

return (z - trueMin) * (z - trueMin)

}

The method accepts a particle's position/solution as array x, computes the value of the double-dip function at position x, and returns the square of the difference between the computed solution and the known solution. In the case of neural network training, the error function will be some measure of how closely a set of network-computed output values matches a set of training data output values.

The Solve Method

Method Solve implements the PSO algorithm. The method is presented in Listing 3, located toward the end of this article. Method Solve assumes that there is an accessible error method. An alternative is to pass the error method into Solve as a delegate. Method Solve begins by setting up a Random object:

Random rnd = new Random(0);

Object rnd is used to initialize each particle's position and velocity with random values, and to randomly kill particles. Next, a collection of Particle objects is created and an array to hold the best position/solution found is allocated:

Particle[] swarm = new Particle[numParticles];

double[] bestGlobalPosition = new double[dim];

double bestGlobalError = double.MaxValue;

Recall that each particle has a best position found; array bestGlobalPosition is the best-known position of any particle in the swarm.

Next, each particle in the swarm is initialized to a random position and the error associated with the random position is determined. The key code is:

double[] randomPosition = new double[dim];

for (int j = 0; j < randomPosition.Length; ++j)

randomPosition[j] = (maxX - minX) * rnd.NextDouble() + minX;

double error = Error(randomPosition);

The next step in initialization is to create a random velocity:

double[] randomVelocity = new double[dim];

for (int j = 0; j < randomVelocity.Length; ++j)

{

double lo = minX * 0.1;

double hi = maxX * 0.1;

randomVelocity[j] = (hi - lo) * rnd.NextDouble() + lo;

}

Each component of the initial velocity is set to a small random value. I use 0.1 times minX and maxX as limits, which are somewhat arbitrary, but which have worked well for me in practice.

With a random position, associated error and random velocity set up, the current particle can be initialized:

swarm[i] = new Particle(randomPosition, error,

randomVelocity, randomPosition, error);

The call may look at bit odd at first glance. The fourth and fifth parameters to the Particle constructor are the particle's best-known position and associated error, which initially are the same as the starting position and error.

After each particle is initialized, each is checked to see if it has the best position of any of the particles in the swarm:

if (swarm[i].error < bestGlobalError)

{

bestGlobalError = swarm[i].error;

swarm[i].position.CopyTo(bestGlobalPosition, 0);

}

After swarm initialization, method Solve prepares the main PSO processing loop:

double w = 0.729; // inertia

double c1 = 1.49445; // cognitive weight

double c2 = 1.49445; // social weight

double r1, r2; // cognitive, social randomizations

double probDeath = 0.01;

int epoch = 0;

double[] newVelocity = new double[dim];

double[] newPosition = new double[dim];

double newError;

When a particle moves in PSO, a new velocity is first computed. Then, the new velocity is used to compute the new position. A particle's new velocity depends on three factors: the particle's current velocity, the particle's best-known position and the best-known position of any particle in the swarm. Variable w is called the inertia weight and determines the influence of the current velocity. Variables c1 and c2 are called the cognitive and social weights and determine the influence of the particle's best position and the global best-known position, respectively. The values used here have been suggested by PSO research and you can think of them as magic constants.

Variables r1 and r2 are values that add a randomization effect to a particle's movement, which helps to prevent the particle from becoming stuck in a dead end. Variable probDeath is set to a small value and is used to randomly kill and then regenerate a particle. The death-birth process is optional in PSO, but I've found it to be a useful enhancement.

In pseudo-code, the main PSO processing loop is:

loop until done

for each particle

compute new velocity

use velocity to compute new position

compute new error at position

check if new error is a particle best

check if new error is a swarm best

kill particle with small probability

end each particle

end loop

return best position found

The new velocity for the current particle, currP, is computed like so:

Particle currP = swarm[i];

for (int j = 0; j < currP.velocity.Length; ++j)

{

r1 = rnd.NextDouble();

r2 = rnd.NextDouble();

newVelocity[j] = (w * currP.velocity[j]) +

(c1 * r1 * (currP.bestPosition[j] - currP.position[j])) +

(c2 * r2 * (bestGlobalPosition[j] - currP.position[j]));

}

This is the heart of the PSO algorithm. If you look closely, you'll see how each component of the current particle's velocity depends on the current velocity, the particle's best-known position and the swarm's best-known position. The net effect is that particles tend to move towards better solutions, and their velocity tends to decrease as their position improves.

The new position for the current particle, currP, is computed like this:

for (int j = 0; j < currP.position.Length; ++j)

{

newPosition[j] = currP.position[j] + newVelocity[j];

if (newPosition[j] < minX)

newPosition[j] = minX;

else if (newPosition[j] > maxX)

newPosition[j] = maxX;

}

For example, suppose a particle is at (3.0, 7.0) and the new velocity is (1.0, -2.0). The particle's new position would be (4.0, 5.0). If a component of the current particle's position goes out of range, it's brought back into range.

After a particle's new position is determined, the error associated with the new position is computed then checked to see if it's a new best error for the particle or a new best error for any particle in the swarm.

The effectiveness of PSO depends to some extent on the initial positions generated for each particle. I augment basic PSO by randomly killing then regenerating particles. In pseudo-code:

generate a random value

if random value small then

generate a new random position

compute error of new position

(leave existing velocity)

check if new swarm best (by chance)

end if

The idea of randomly killing particles is to try and prevent particles from clumping together near a non-optimal solution. If the probability of death is too high, particles will die off too quickly and not reach a good position/solution. But if the probability of death is too low, repositioning will occur so infrequently that it may not be useful. I've found that a death probability between 0.01 and 0.1 is generally most effective.

Listing 3: The PSO Solve Method

static double[] Solve(int dim, int numParticles, double minX,

double maxX, int maxEpochs, double exitError)

{

// assumes accessible Error() and Particle class

Random rnd = new Random(0);

Particle[] swarm = new Particle[numParticles];

double[] bestGlobalPosition = new double[dim];

double bestGlobalError = double.MaxValue;

// swarm initialization

for (int i = 0; i < swarm.Length; ++i)

{

double[] randomPosition = new double[dim];

for (int j = 0; j < randomPosition.Length; ++j)

randomPosition[j] = (maxX - minX) *

rnd.NextDouble() + minX;

double error = Error(randomPosition);

double[] randomVelocity = new double[dim];

for (int j = 0; j < randomVelocity.Length; ++j)

{

double lo = minX * 0.1;

double hi = maxX * 0.1;

randomVelocity[j] = (hi - lo) * rnd.NextDouble() + lo;

}

swarm[i] = new Particle(randomPosition, error,

randomVelocity, randomPosition, error);

// global best position/solution?

if (swarm[i].error < bestGlobalError)

{

bestGlobalError = swarm[i].error;

swarm[i].position.CopyTo(bestGlobalPosition, 0);

}

} // initialization

double w = 0.729; // inertia weight

double c1 = 1.49445; // cognitive weight

double c2 = 1.49445; // social weight

double r1, r2; // cognitive and social randomizations

double probDeath = 0.01;

int epoch = 0;

double[] newVelocity = new double[dim];

double[] newPosition = new double[dim];

double newError;

// main loop

while (epoch < maxEpochs)

{

for (int i = 0; i < swarm.Length; ++i) // each Particle

{

Particle currP = swarm[i];

for (int j = 0; j < currP.velocity.Length; ++j)

{

r1 = rnd.NextDouble();

r2 = rnd.NextDouble();

newVelocity[j] = (w * currP.velocity[j]) +

(c1 * r1 * (currP.bestPosition[j] - currP.position[j])) +

(c2 * r2 * (bestGlobalPosition[j] - currP.position[j]));

}

newVelocity.CopyTo(currP.velocity, 0);

for (int j = 0; j < currP.position.Length; ++j)

{

newPosition[j] = currP.position[j] + newVelocity[j];

if (newPosition[j] < minX)

newPosition[j] = minX;

else if (newPosition[j] > maxX)

newPosition[j] = maxX;

}

newPosition.CopyTo(currP.position, 0);

newError = Error(newPosition);

currP.error = newError;

if (newError < currP.bestError)

{

newPosition.CopyTo(currP.bestPosition, 0);

currP.bestError = newError;

}

if (newError < bestGlobalError)

{

newPosition.CopyTo(bestGlobalPosition, 0);

bestGlobalError = newError;

}

// death?

double die = rnd.NextDouble();

if (die < probDeath)

{

for (int j = 0; j < currP.position.Length; ++j)

currP.position[j] = (maxX - minX) *

rnd.NextDouble() + minX;

currP.error = Error(currP.position);

currP.position.CopyTo(currP.bestPosition, 0);

currP.bestError = currP.error;

if (currP.error < bestGlobalError)

{

bestGlobalError = currP.error;

currP.position.CopyTo(bestGlobalPosition, 0);

}

}

} // each Particle

++epoch;

} // while

// show final swarm

Console.WriteLine("\nProcessing complete");

Console.WriteLine("\nFinal swarm:\n");

for (int i = 0; i < swarm.Length; ++i)

Console.WriteLine(swarm[i].ToString());

double[] result = new double[dim];

bestGlobalPosition.CopyTo(result, 0);

return result;

}

An Alternative to Back-Propagation

The explanation of PSO and demo code presented in this article should get you up to speed with a thorough knowledge of PSO. By far the most common technique for training a neural network is the back-propagation algorithm. Back-propagation is mathematically elegant but has some serious drawbacks. In particular, for many problems, back-propagation tends to be extremely sensitive to the values used for the learning rate and momentum. PSO is an attractive alternative to back-propagation for training neural networks.