The Data Science Lab

Multi-Class Classification Using New PyTorch Best Practices, Part 2: Training, Accuracy, Predictions

Following new best practices, Dr. James McCaffrey of Microsoft Research revisits multi-class classification for when the variable to predict has three or more possible values.

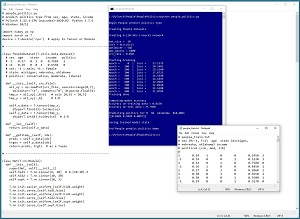

This is the second of two articles that explain how to create and use a PyTorch multi-class classifier. A good way to see where this article is headed is to examine the screenshot of a demo program in Figure 1.

The demo program predicts the political leaning (conservative, moderate, liberal) of a person. The

first article in the series explained how to prepare the training and test data, and how to define the neural network classifier. This article explains how to train the network, compute the accuracy of the trained network, use the network to make predictions, and save the network for use by other programs.

The demo begins by loading a 200-item file of training data and a 40-item set of test data. Each tab-delimited line represents a person. The fields are sex, age, state of residence (Michigan, Nebraska or Oklahoma), annual income and politics type (0 = conservative, 1 = moderate, 2 = liberal). The goal is to predict politics type from sex, age, state and income.

[Click on image for larger view.] Figure 1: Multi-Class Classification Using PyTorch Demo Run

[Click on image for larger view.] Figure 1: Multi-Class Classification Using PyTorch Demo Run

After the training data is loaded into memory, the demo creates a 6-(10-10)-3 neural network. There are six input nodes, two hidden neural layers with 10 nodes each and three output nodes.

The demo prepares to train the network by setting a batch size of 10, stochastic gradient descent (SGD) optimization with a learning rate of 0.01, and maximum training epochs of 1,000 passes through the training data. The meaning of these values and how they are determined will be explained shortly.

The demo program monitors training by computing and displaying the loss value for one epoch. The loss value slowly decreases, which indicates that training is probably succeeding. The magnitude of the loss values isn't directly interpretable; the important thing is that the loss decreases.

After 1,000 training epochs, the demo program computes the accuracy of the trained model on the training data as 81.50 percent (163 out of 200 correct). The model accuracy on the test data is 75.00 percent (30 out of 40 correct).

After evaluating the trained network, the demo predicts the politics type for a person who is male, 30 years old, from Oklahoma and who makes $50,000 annually. The prediction is [0.6905, 0.3049, 0.0047]. These values are pseudo-probabilities. The largest value (0.6905) is at index [0] so the prediction is class 0 = conservative.

The demo concludes by saving the trained model to file so that it can be used without having to retrain the network from scratch. There are two different ways to save a PyTorch model. The demo uses the save-state approach.

This article assumes you have a basic familiarity with Python and intermediate or better experience with a C-family language but does not assume you know much about PyTorch or neural networks. The complete demo program source code and data can be found in my Sept. 1 post, "Multi-Class Classification Using PyTorch 1.12.1 on Windows 10/11."

As noted, the first article in this two-part series can be found in my Visual Studio Magazine Data Science Lab.

Overall Program Structure

The overall structure of the demo program is presented in Listing 1. The demo program is named people_politics.py. The program imports the NumPy (numerical Python) library and assigns it an alias of np. The program imports PyTorch and assigns it an alias of T. Most PyTorch programs do not use the T alias, but my work colleagues and I often do so to save space. The demo program indents using two spaces rather than the more common four spaces, again to save space.

Listing 1: Overall Program Structure

# people_politics.py

# predict politics type from sex, age, state, income

# PyTorch 1.12.1-CPU Anaconda3-2020.02 Python 3.7.6

# Windows 10/11

import numpy as np

import torch as T

device = T.device('cpu')

class PeopleDataset(T.utils.data.Dataset): . . .

class Net(T.nn.Module): . . .

def accuracy(model, ds): . . .

def main():

# 0. get started

print("Begin People predict politics type ")

T.manual_seed(1)

np.random.seed(1)

# 1. create DataLoader objects

# 2. create network

# 3. train model

# 4. evaluate model accuracy

# 5. make a prediction

# 6. save model (state_dict approach)

print("End People predict politics demo")

if __name__ == "__main__":

main()

The demo program places all the control logic in a main() function. Some of my colleagues prefer to implement a program-defined train() function to handle the code that performs the training.

Preparing to Train the Network

Training a neural network is the process of finding values for the weights and biases so that the network produces output that matches the training data. Most of the demo program code is associated with training the network. The terms network and model are often used interchangeably. In some development environments, network is used to refer to a neural network before it has been trained, and model is used to refer to a network after it has been trained.

In the main() function, the training and test data are loaded into memory as Dataset objects, and then the training Dataset is passed to a DataLoader object:

# 1. create DataLoader objects

print("Creating People Datasets ")

train_file = ".\\Data\\people_train.txt"

train_ds = PeopleDataset(train_file) # 200 rows

test_file = ".\\Data\\people_test.txt"

test_ds = PeopleDataset(test_file) # 40 rows

bat_size = 10

train_ldr = T.utils.data.DataLoader(train_ds,

batch_size=bat_size, shuffle=True)

Unlike Dataset objects that must be defined for each specific multi-class problem, DataLoader objects are ready to use as-is. The batch size of 10 is a hyperparameter. The special case when batch size is set to 1 is sometimes called online training.

Although not necessary, it's generally a good idea to set a batch size that evenly divides the total number of training items so that all batches of training data have the same size. In the demo, with a batch size of 10 and 200 training items, each batch will have 20 items. When the batch size doesn't evenly divide the number of training items, the last batch will be smaller than all the others. The DataLoader class has an optional drop_last parameter with a default value of False. If set to True, the DataLoader will ignore last batches that are smaller.

It's very important to explicitly set the shuffle parameter to True. The default value is False. When shuffle is set to True, the training data will be served up in a random order which is what you want during training. If shuffle is set to False, the training data is served up sequentially. This almost always results in failed training because the updates to the network weights and biases oscillate, and no progress is made.

Creating the Network

The demo program creates the neural network like so:

# 2. create network

print("Creating 6-(10-10)-3 neural network ")

net = Net().to(device)

net.train()

The neural network is instantiated using normal Python syntax but with .to(device) appended to explicitly place storage in either "cpu" or "cuda" memory. Recall that device is a global-scope value set to "cpu" in the demo.

The network is set into training mode with the somewhat misleading statement net.train(). PyTorch neural networks can be in one of two modes, train() or eval(). The network should be in train() mode during training and eval() mode at all other times.

The train() vs. eval() mode is often confusing for people who are new to PyTorch in part because in many situations it doesn't matter what mode the network is in. Briefly, if a neural network uses dropout or batch normalization, then you get different results when computing output values depending on whether the network is in train() or eval() mode. But if a network doesn't use dropout or batch normalization, you get the same results for train() and eval() mode.

Because the demo network doesn't use dropout or batch normalization, it's not necessary to switch between train() and eval() modes. However, in my opinion it's good practice to always explicitly set a network to train() mode during training and eval() mode at all other times. By default, a network is in train() mode.

The statement net.train() is rather misleading because it suggests that some sort of training is going on. If I had been the person who implemented the train() method, I would have named it set_train_mode() instead. Also, the train() method operates by reference and so the statement net.train() modifies the net object. If you are a fan of functional programming, this will probably make you want to tear your hair out, and you can write net = net.train() instead.

Training the Network

The code that trains the network is presented in Listing 2. Training a neural network involves two nested loops. The outer loop iterates a fixed number of epochs (with a possible short-circuit exit). An epoch is one complete pass through the training data. The inner loop iterates through all training data items.

Listing 2: Training the Network

# 3. train model

max_epochs = 1000

ep_log_interval = 100

lrn_rate = 0.01

loss_func = T.nn.NLLLoss() # assumes log_softmax()

optimizer = T.optim.SGD(net.parameters(),

lr=lrn_rate)

print("bat_size = %3d " % bat_size)

print("loss = " + str(loss_func))

print("optimizer = SGD")

print("max_epochs = %3d " % max_epochs)

print("lrn_rate = %0.3f " % lrn_rate)

print("Starting training ")

for epoch in range(0, max_epochs):

# T.manual_seed(epoch+1) # reproducibility

epoch_loss = 0 # for one full epoch

for (batch_idx, batch) in enumerate(train_ldr):

X = batch[0] # inputs

Y = batch[1] # correct class/label/politics

optimizer.zero_grad()

oupt = net(X)

loss_val = loss_func(oupt, Y) # a tensor

epoch_loss += loss_val.item() # accumulate

loss_val.backward()

optimizer.step()

if epoch % ep_log_interval == 0:

print("epoch = %5d | loss = %10.4f" % \

(epoch, epoch_loss))

print("Training done ")

The five statements that prepare training are:

max_epochs = 1000

ep_log_interval = 100

lrn_rate = 0.01

loss_func = T.nn.NLLLoss()

optimizer = T.optim.SGD(net.parameters(),

lr=lrn_rate)

The number of epochs to train is a hyperparameter that must be determined by trial and error. The ep_log_interval specifies how often to display progress messages.

The loss function is set to NLLLoss(), which assumes that the output nodes have log_softmax() activation applied. There is a strong coupling between loss function and output node activation.

The demo uses stochastic gradient descent optimization (SGD) with a learning rate of 0.01, which controls how much weights and biases change on each update. PyTorch supports 13 different optimization algorithms. The two most common are SGD and Adam (adaptive moment estimation). SGD often works reasonably well for simple networks, including multi-class classifiers. Adam often works better than SGD for deep neural networks.

PyTorch beginners often fall down a technical rabbit hole trying to learn everything about every optimization algorithm. Most of my experienced colleagues use just two or three algorithms and adjust the learning rate. My recommendation is to use SGD and Adam and try other algorithms only when those two fail.

It's important to monitor training progress because training failure is the norm rather than the exception. There are several ways to monitor training progress. The demo program uses the simplest approach which is to accumulate the total loss for one epoch, and then display that accumulated loss value every so often (ep_log_interval = 100 in the demo).

The inner training loop is where all the work is done:

for (batch_idx, batch) in enumerate(train_ldr):

X = batch[0] # inputs

Y = batch[1] # correct class/label/politics

optimizer.zero_grad()

oupt = net(X)

loss_val = loss_func(oupt, Y) # a tensor

epoch_loss += loss_val.item() # accumulate

loss_val.backward()

optimizer.step()

The enumerate() function returns the current batch index (0 through 19) and a batch of input values (sex, age, state, income) with associated correct target values (0, 1 or 2). Using enumerate() is optional and you can skip getting the batch index by writing "for batch in train_ldr" instead.

The NLLLoss() loss function returns a PyTorch tensor that holds a single numeric value. That value is extracted using the item() method so it can be accumulated as an ordinary non-tensor numeric value. In early versions of PyTorch, using the item() method was required, but newer versions of PyTorch perform an implicit type-cast so the call to item() is not necessary. In my opinion, explicitly using the item() method is better coding style.

The backward() method computes gradients. Each weight and bias has an associated gradient. Gradients are numeric values that indicate how an associated weight or bias should be adjusted so that the error/loss between computed outputs and target outputs is reduced. It's important to remember to call the zero_grad() method before calling the backward() method. The step() method uses the newly computed gradients to update the network weights and biases.

Most multi-class neural classifiers can be trained in a relatively short time. In situations where training takes several hours or longer, you should periodically save the values of the weights and biases so that if your machine fails (loss of power, dropped network connection and so on) you can reload the saved checkpoint and avoid having to restart from scratch.

Saving a training checkpoint is outside the scope of this article. For an example and explanation of saving training checkpoints, see my "PyTorch Training Checkpoint Exact Recovery Reproducibility" blog post.

Computing Model Accuracy

The demo uses a program-defined function to compute model classification accuracy. The function is presented in Listing 3. Computing accuracy is relatively simple in principle. In pseudo-code:

loop each training data item

get the inputs and correct target class

compute predicted class

if predicted == target then

num_correct += 1

else

num_wrong += 1

end-if

end-loop

return num_correct / (num_correct + num_wrong)

This approach processes each data item one at a time, which allows you to add debugging print() statements to see which data items are incorrectly predicted by the model.

Listing 3: Computing Model Classification Accuracy

def accuracy(model, ds):

# assumes model.eval()

# item-by-item version

n_correct = 0; n_wrong = 0

for i in range(len(ds)):

X = ds[i][0].reshape(1,-1) # make it a batch

Y = ds[i][1].reshape(1) # 0 1 or 2, 1D

with T.no_grad():

oupt = model(X) # logits form

big_idx = T.argmax(oupt) # 0 or 1 or 2

if big_idx == Y:

n_correct += 1

else:

n_wrong += 1

acc = (n_correct * 1.0) / (n_correct + n_wrong)

return acc

Although simple in principle, there are a few tricky details to deal with when computing classification accuracy. The accuracy() function accepts two parameters: the network/model to evaluate and a Dataset object that holds training or test data. Instead of using a DataLoader to serve up data items, the accuracy() function iterates through the Dataset argument directly. An alternative approach is to instantiate a local DataLoader with shuffle set to False and batch_size set to 1.

Each data item is a Tuple where the predictor values are at position [0] and the target class label is at position [1]. But when fetched directly, the predictors and targets have the wrong shape. The predictors must be reshaped into a batch of size 1. The -1 argument in the call to reshape() means "whatever is left over," which in the case of the demo data is 6, the number of input values. The target value is reshaped to a 1-dimensional tensor. Dealing with errors related to the shapes of inputs and outputs can be very time-consuming during design and development.

Because the network applies log_softmax() to the output nodes, the predicted output is a PyTorch tensor of log_softmax() values, for example [-1.1315, -0.4618, -3.0511]. Neural network output values that do not sum to 1 are often called logits. The index of the largest logit value is the predicted class. The index of the largest value in a tensor can be found using the built-in torch.argmax() function.

The output of the neural network is called inside a torch.no_grad() block. Briefly, when computing the output of a neural network, you do not use torch.no_grad() during training (because you want gradients to be computed) but you do use torch.no_grad() when not training (because you don't need the gradients to be updated).

The demo program calls the accuracy() function after training using these statements:

# 4. evaluate model accuracy

print("Computing model accuracy ")

net.eval()

acc_train = accuracy(net, train_ds) # item-by-item

print("Accuracy on training data = %0.4f" % acc_train)

acc_test = accuracy(net, test_ds)

print("Accuracy on test data = %0.4f" % acc_test)

Notice that the network is set to eval() mode before calling the accuracy function. In this example, setting eval() mode isn't necessary, but doing so is good style in my opinion.

The demo accuracy() function iterates one data item at a time. For large datasets, this can be very slow. An alternative approach is to process all data items at once:

def accuracy_quick(model, dataset):

X = dataset[0:len(dataset)][0] # all inputs

Y = dataset[0:len(dataset)][1] # all targets

with T.no_grad():

oupt = model(X) # all logits

arg_maxs = T.argmax(oupt, dim=1) # all predicteds

num_correct = T.sum(Y==arg_maxs)

return (num_correct * 1.0 / len(dataset))

The demo accuracy() function computes an overall accuracy for all three classes. In a non-demo scenario, it's often a good idea to compute an accuracy value for each class. For an example, see my "PyTorch Multi-Class Accuracy by Class" blog post.

Using the Model

After the network classifier has been trained, the demo program uses the model to make a politics-type prediction for a new, previously unseen person:

# 5. make a prediction

print("Predicting politics for M 30 oklahoma $50,000: ")

X = np.array([[-1, 0.30, 0,0,1, 0.5000]], dtype=np.float32)

X = T.tensor(X, dtype=T.float32).to(device)

with T.no_grad():

logits = net(X) # do not sum to 1.0

probs = T.exp(logits) # sum to 1.0

probs = probs.numpy() # numpy vector prints better

np.set_printoptions(precision=4, suppress=True)

print(probs)

The input is a person who is male, 30 years old, lives in Oklahoma and makes $50,000 annually. Because the network was trained on normalized and encoded data, the input must be normalized and encoded in the same way.

Notice the double set of square brackets. A PyTorch network expects input to be in the form of a batch. The extra set of brackets creates a data item with a batch size of 1. Details like this can take a lot of time to debug.

Because the neural network has log_softmax() activation on the output nodes, the predicted output is in the form of three logit values that do not sum to 1. The demo applies the exp() function to undo the log() operation which gives three tensor values that do sum to 1 and can be loosely interpreted as pseudo-probabilities. In the demo program these values are (0.6905, 0.3049, 0.0047). The demo converts the tensor values to a NumPy vector using the numpy() method only because it's easier to format for printing.

The predicted class is the index of the largest output value, in this case, [0] = conservative. If you want to print the prediction more explicitly, you can use the NumPy or PyTorch argmax() function.

Saving the Trained Model

The demo program saves the trained model using these statements:

# 6. save model (state_dict approach)

print("Saving trained model state ")

fn = ".\\Models\\people_model.pt"

T.save(net.state_dict(), fn)

print("End People predict politics demo ")

if __name__ == "__main__":

main()

The code assumes there is a directory named Models. There are two ways to save PyTorch model. You can save just the weights and biases that define the network, or you can save the entire network definition including weights and biases. The demo uses the first approach.

The model weights and biases, along with some other information, is saved in the state_dict() Dictionary object. The save() method accepts the Dictionary and a file name that indicates where to save. You can use any file name extension you wish, but .pt and .pth are two common choices.

To use the saved model from a different program, that program would have to contain the network class definition. Then the weights and biases could be loaded like so:

model = Net() # requires class definition

model.eval()

model.load_state_dict(T.load(fn))

# use model to make prediction(s)

When saving or loading a trained model, the model should be in eval() mode rather than train() mode. An alternative approach for saving a PyTorch model is to use ONNX (Open Neural Network Exchange). This allows cross-platform usage.

Wrapping Up

Multi-class classification is used when the variable to predict has three or more possible values. When the variable to predict has just two possible values, the problem is called binary classification. It is possible to use the multi-class classification techniques presented here for binary classification. However, it is more common to use techniques that are specific to binary classification. For example, sigmoid() activation rather than log_softmxc() is used for output nodes, and BCELoss() rather than NLLLoss() is used as the loss function.