The Data Science Lab

Matrix Inverse from Scratch Using QR Decomposition with C#

Dr. James McCaffrey of Microsoft Research guides you through a full-code, step-by-step tutorial on "one of the most important operations in machine learning."

Computing the inverse of a matrix is one of the most important operations in machine learning. If some matrix A has shape n-by-n, then its inverse matrix Ai is n-by-n and the matrix product of Ai * A is the n-by-n Identity matrix (1s on the upper-left to lower-right main diagonal, 0s off the diagonal).

An analogy in ordinary arithmetic is that for a number n, its inverse ni is a number such that n * ni = 1. For example, the inverse of 5 is 0.20 because 5 * 0.20 = 1.

There are several algorithms to compute a matrix inverse, and each algorithm has several variations. Three common algorithms are LUP ("lower upper permutation"), SVD ("singular value decomposition") and QR (not an acronym). This article presents a from-scratch C# language implementation of matrix inverse using the Householder version of the QR algorithm.

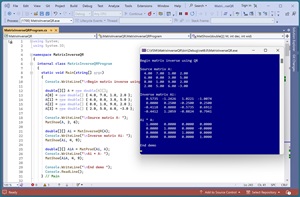

[Click on image for larger view.] Figure 1: Matrix Inverse Via QR Decomposition in Action

[Click on image for larger view.] Figure 1: Matrix Inverse Via QR Decomposition in Action

There are pros and cons of each matrix inverse algorithm. In the early days of programming, when machines had limited memory and slow processors, the practical differences between matrix inverse algorithms were far more important than today. In my subjective opinion, QR-Householder matrix inversion has medium complexity, medium stability and medium efficiency.

A good way to see where this article is headed is to take a look at the screenshot of a demo program in Figure 1. For simplicity, the demo sets up a small 4-by-4 matrix A:

4.00 7.00 1.00 2.00

6.00 0.00 3.00 5.00

8.00 1.00 9.00 2.00

2.00 5.00 6.00 -3.00

The demo computes the inverse Ai:

0.5735 -1.2426 1.0221 -1.0074

0.0000 0.2500 -0.2500 0.2500

-0.4118 0.8088 -0.5735 0.6912

-0.4412 1.2059 -0.8824 0.7941

The demo concludes by computing Ai * A to verify the result is the Identity matrix:

1.0000 0.0000 0.0000 0.0000

0.0000 1.0000 0.0000 -0.0000

0.0000 0.0000 1.0000 0.0000

0.0000 0.0000 0.0000 1.0000

The -0.0000 value at cell [1][3] indicates that the value there is a very small negative number (truncated for display), not a special negative-zero value of some sort.

This article assumes you have intermediate or better programming skill but doesn't assume you know anything about the QR-Householder matrix inverse algorithm. The demo is implemented using C#, but you should be able to refactor the demo code to another C-family language if you wish.

The source code for the demo program is long, but is presented in this article. The complete code is also available in the accompanying file download, and is available online. The demo code has all normal error checking removed to keep the main ideas clear.

The Demo Program

I used Visual Studio 2022 (Community Free Edition) for the demo program. I created a new C# console application and checked the "Place solution and project in the same directory" option. I specified .NET version 8.0. I named the project MatrixInverseQR. I checked the "Do not use top-level statements" option to avoid the program entry point shortcut syntax.

The demo has no significant .NET dependencies, and any relatively recent version of Visual Studio with .NET (Core) or the older .NET Framework will work fine. You can also use the Visual Studio Code program if you like.

After the template code loaded into the editor, I right-clicked on file Program.cs in the Solution Explorer window and renamed the file to the slightly more descriptive MatrixInverseQRProgram.cs. I allowed Visual Studio to automatically rename class Program.

The Main() method starts by loading and displaying a 4-by-4 matrix into memory as an array-of-arrays style matrix:

double[][] A = new double[4][];

A[0] = new double[] { 4.0, 7.0, 1.0, 2.0 };

A[1] = new double[] { 6.0, 0.0, 3.0, 5.0 };

A[2] = new double[] { 8.0, 1.0, 9.0, 2.0 };

A[3] = new double[] { 2.0, 5.0, 6.0, -3.0 };

Console.WriteLine("Source matrix A: ");

MatShow(A, 2, 6);

The MatShow() function is a program-defined helper. The two numeric arguments are the number of decimals to display (2) and the field width (6) for each element. For simplicity, the data is hard-coded. In a non-demo scenario, the source matrix may come from some other part of a software system, or you might load the data from a text file using a program-defined helper function:

string fn = "..\\..\\..\\Data\\source_data.txt";

double[][] X = MatLoad(fn, new int[] { 0, 1, 2, 3 },

',', "#");

The arguments to MatLoad() mean fetch columns 0 through 3 of the comma-separated data, where a "#" character indicates a comment line.

The inverse is computed and displayed like so:

double[][] Ai = MatInverseQR(A);

Console.WriteLine("Inverse matrix Ai: ");

MatShow(Ai, 4, 9);

Because the inverse function is implemented from scratch, you'll be able to modify it in many ways to meet your own needs. For example, you might want to also return the absolute value of the determinant of the matrix argument as an out parameter:

double absDet;

double[][] Ai = MatInverseQR(A, out absDet);

The demo program concludes by verifying Ai * A equals the Identity matrix:

double[][] AiA = MatProd(Ai, A);

Console.WriteLine("Ai * A: ");

MatShow(AiA, 4, 9);

A more robust verification would also compute A * Ai, and programmatically check that the product has 1s on the diagonal and 0s off-diagonal, subject to a small error.

Understanding QR Decomposition

The MatInverseQR() function is based on QR decomposition. If you have an n-by-n matrix A and apply QR decomposition, the result is an n-by-n matrix Q and an n-by-n matrix R such that Q * R = A. The Q matrix is special because its inverse equals the transpose of Q (rows and columns exchanged). The R matrix is special because it is upper triangular -- the values below the diagonal are 0s -- and its inverse is easy to compute.

To compute the inverse of a matrix A = Q * R, the math derivation is:

A = Q * R

inv(A) = inv(Q * R)

= inv(R) * inv(Q)

= inv(R) * trans(Q)

The implementation of the MatInverseQR() function is deceptively simple looking:

static double[][] MatInverseQR(double[][] M)

{

double[][] Q; double[][] R;

MatDecomposeQR(M, out Q, out R);

double[][] Rinv = MatInverseUpperTri(R); // std algo

double[][] Qtrans = MatTranspose(Q); // is inv(Q)

return MatProduct(Rinv, Qtrans);

// 10 nested helper functions go here

}

Nine of the 10 helper functions are relatively simple, but the MatDecomposeQR() function is very complicated.

Not all matrices have an inverse. A common customization is to check if the inverse of the source matrix does not exist. This happens when the determinant of the source matrix is 0. The determinant is 0 when any element on the diagonal of the R matrix is 0, or equivalently, if the product of the diagonal elements of R is 0.

For example, you can add code similar to this:

double absDet = 1.0;

for (int i = 0; i < M.Length; ++i)

absDet *= R[i][i];

if (absDet == 0.0)

Console.WriteLine("Inverse does not exist ");

The product of the diagonal elements of R is the absolute value of the determinant of the source matrix, and so QR decomposition cannot be used to compute the determinant. But you can check if the determinant is 0 or not.

The QR-Householder algorithm is not at all obvious. The Wikipedia entry on "QR Decomposition" has a good explanation of the algorithm, with a worked example.

The Complete Demo Program

The structure of the demo program is presented in Listing 1. The MatInverseQR() contains 10 nested helper functions. The MatProd() function is used to compute Ai * A to verify the inverse result is correct. The MatLoad() function isn't called by the demo but is useful in non-demo scenarios.

Listing 1: Structure of the Demo Program

using System;

using System.IO;

namespace MatrixInverseQR

{

internal class MatrixInverseQRProgram

{

static void Main(string[] args)

{

Console.WriteLine("Begin matrix inverse using QR ");

// set up and display a matrix A

// compute and display the inverse Ai

// compute and display Ai * A

Console.WriteLine("End demo ");

Console.ReadLine();

} // Main

static double[][] MatProd(double[][] matA,

double[][] matB) { . . }

static double[][] MatInverseQR(double[][] M) { . . }

static void MatShow(double[][] M, int dec,

int wid) { . . }

static double[][] MatLoad(string fn, int[] usecols,

char sep, string comment) { . . }

} // Program

} // ns

The code for the MatInverseQR() function is presented in Listing 2. The code for the MatDecomposeQR() helper function assumes that the source matrix is square because the helper is being used to compute a matrix inverse and inverse only applies to square matrices. There are other versions of QR decomposition functions that work for non-square matrices.

Listing 2: The MatInverseQR Function

static double[][] MatInverseQR(double[][] M)

{

// inverse using QR decomp (Householder algorithm)

double[][] Q;

double[][] R;

MatDecomposeQR(M, out Q, out R);

// TODO: check if determinant is zero (no inverse)

double[][] Rinv = MatInverseUpperTri(R); // std algo

double[][] Qtrans = MatTranspose(Q); // is inv(Q)

return MatProduct(Rinv, Qtrans);

// ----------------------------------------------------

// 10 helpers: MatDecomposeQR, MatMake, MatTranspose,

// MatInverseUpperTri, MatIdentity, MatCopy, VecNorm,

// VecDot, VecToMat, MatProduct

// ----------------------------------------------------

static void MatDecomposeQR(double[][] mat,

out double[][] q, out double[][] r)

{

// QR decomposition, Householder algorithm.

int m = mat.Length; // assumes mat is nxn

int n = mat[0].Length; // check m == n

double[][] Q = MatIdentity(m);

double[][] R = MatCopy(mat);

int end = n-1;

// if (m == n) end = n - 1; else end = n;

for (int i = 0; i < end; ++i)

{

double[][] H = MatIdentity(m);

double[] a = new double[n - i];

int k = 0;

for (int ii = i; ii < n; ++ii)

a[k++] = R[ii][i];

double normA = VecNorm(a);

if (a[0] < 0.0) { normA = -normA; }

double[] v = new double[a.Length];

for (int j = 0; j < v.Length; ++j)

v[j] = a[j] / (a[0] + normA);

v[0] = 1.0;

double[][] h = MatIdentity(a.Length);

double vvDot = VecDot(v, v);

double[][] alpha = VecToMat(v, v.Length, 1);

double[][] beta = VecToMat(v, 1, v.Length);

double[][] aMultB = MatProduct(alpha, beta);

for (int ii = 0; ii < h.Length; ++ii)

for (int jj = 0; jj < h[0].Length; ++jj)

h[ii][jj] -= (2.0 / vvDot) * aMultB[ii][jj];

// copy h into lower right of H

int d = n - h.Length;

for (int ii = 0; ii < h.Length; ++ii)

for (int jj = 0; jj < h[0].Length; ++jj)

H[ii + d][jj + d] = h[ii][jj];

Q = MatProduct(Q, H);

R = MatProduct(H, R);

} // i

q = Q;

r = R;

} // QR decomposition

static double[][] MatInverseUpperTri(double[][] U)

{

int n = U.Length; // must be square matrix

double[][] result = MatIdentity(n);

for (int k = 0; k < n; ++k)

{

for (int j = 0; j < n; ++j)

{

for (int i = 0; i < k; ++i)

{

result[j][k] -= result[j][i] * U[i][k];

}

result[j][k] /= U[k][k];

}

}

return result;

}

static double[][] MatTranspose(double[][] m)

{

int nr = m.Length;

int nc = m[0].Length;

double[][] result = MatMake(nc, nr); // note

for (int i = 0; i < nr; ++i)

for (int j = 0; j < nc; ++j)

result[j][i] = m[i][j];

return result;

}

static double[][] MatMake(int nRows, int nCols)

{

double[][] result = new double[nRows][];

for (int i = 0; i < nRows; ++i)

result[i] = new double[nCols];

return result;

}

static double[][] MatIdentity(int n)

{

double[][] result = MatMake(n, n);

for (int i = 0; i < n; ++i)

result[i][i] = 1.0;

return result;

}

static double[][] MatCopy(double[][] m)

{

int nRows = m.Length; int nCols = m[0].Length;

double[][] result = MatMake(nRows, nCols);

for (int i = 0; i < nRows; ++i)

for (int j = 0; j < nCols; ++j)

result[i][j] = m[i][j];

return result;

}

static double[][] MatProduct(double[][] matA,

double[][] matB)

{

int aRows = matA.Length;

int aCols = matA[0].Length;

int bRows = matB.Length;

int bCols = matB[0].Length;

if (aCols != bRows)

throw new Exception("Non-conformable matrices");

double[][] result = MatMake(aRows, bCols);

for (int i = 0; i < aRows; ++i) // each row of A

for (int j = 0; j < bCols; ++j) // each col of B

for (int k = 0; k < aCols; ++k)

result[i][j] += matA[i][k] * matB[k][j];

return result;

}

static double VecDot(double[] v1, double[] v2)

{

double result = 0.0;

int n = v1.Length;

for (int i = 0; i < n; ++i)

result += v1[i] * v2[i];

return result;

}

static double VecNorm(double[] vec)

{

int n = vec.Length;

double sum = 0.0;

for (int i = 0; i < n; ++i)

sum += vec[i] * vec[i];

return Math.Sqrt(sum);

}

static double[][] VecToMat(double[] vec,

int nRows, int nCols)

{

double[][] result = MatMake(nRows, nCols);

int k = 0;

for (int i = 0; i < nRows; ++i)

for (int j = 0; j < nCols; ++j)

result[i][j] = vec[k++];

return result;

}

} // MatInverseQR

The Helper Functions

The purpose of most of the 10 helper functions should be clear from their names or by examining the source code.

Helper function MatInverseUpperTri() is used to compute the inverse of the R matrix that results from QR decomposition. The technique used to compute the inverse of an upper triangular or lower triangular matrix is called back-substitution.

The VecNorm(v) function computes the norm() of a vector, which is the square root of the sum of the squared elements of vector v. The VecDot(v1, v2) function computes the dot product of vectors v1 and v2, which is the sum of the products of corresponding elements of v1 and v2.

Wrapping Up

Inverting a matrix using QR decomposition is a good, general-purpose approach. For special types of matrices, there are more efficient algorithms. If the source matrix is 3-by-3 or smaller, then it's possible to compute the inverse using brute force. If you need the determinant of the source matrix, then using LUP decomposition (Crout or Doolittle algorithm) is useful. If the source matrix is a covariance matrix, then using Cholesky decomposition is efficient.

The implementation of QR-Householder matrix inverse presented in this article emphasizes simplicity and ease-of-modification over robustness and performance. Two other versions of the QR algorithm are Gram-Schmidt and Givens. Computing the inverse of a matrix, regardless of the algorithm used, can fail in many ways, and so in a production system you must add lots of error checking.

It's not feasible to list all the machine learning algorithms that use matrix inverses -- there are just too many. That said however, examples of ML algorithms that use a matrix inverse are linear regression, logistic regression, Gaussian mixture model clustering, Gaussian process regression and kernel ridge regression.