In-Depth

Exploit Multi-Core Processors with .NET 4 and Visual Studio 2010

If you want to take advantage of the power of multi-core machines, you need to start creating applications with parallel processing using PLINQ, the Task Parallel Library and the new features of Visual Studio 2010.

The old rule was, if you wanted to create a program with bugs that you could never hope to track down, you wrote it as a multithreaded application. All that has changed, thanks to the introduction of Microsoft Parallel Extensions for .NET, which provides a layer of abstraction on top of the Microsoft .NET Framework threading model.

Parallel Extensions follows the model Microsoft established with the transaction manager in COM applications and with the Entity Framework and LINQ in the area of data access. Parallel Extensions attempts to bring a sophisticated technology to the masses by building high-level support for a complex process into the .NET Framework. With multi-core processors becoming the norm, developers crave the ability to distribute their applications over all the cores on a computer.

You can access the power of Parallel Extensions either through Parallel LINQ (PLINQ) or through the Task Parallel Library (TPL). Both allow you to write one set of code for single- and multi-core computers and count on the .NET Framework to take maximum advantage of whatever platform your code eventually executes on, while protecting you from the usual pitfalls of creating multithreaded applications.

PLINQ extends LINQ queries to decompose a single query into multiple subqueries that are run in parallel rather than sequentially. TPL allows you to create loops with iterations running in parallel rather than one after another. While the declarative syntax of PLINQ makes it easier to create parallel processes, TPL-oriented operations will be, in general, more lightweight than PLINQ queries.

In many ways, though, choosing between TPL and PLINQ is a lifestyle choice. If you think in terms of parallel loops rather than parallel queries, it can be easier for you to design a TPL solution than a PLINQ solution.

Introducing PLINQ

For business applications, PLINQ will shine anytime you have a LINQ query that involves multiple subqueries. If you're joining rows from a table on a local database with rows from a table in another remote database, PLINQ can be very useful. In those situations, LINQ must run subqueries on each data source separately and then reconcile the results. PLINQ will distribute those subqueries over multiple processors -- if any are available -- so that they run simultaneously.

You won't use fewer processor cycles to get your result -- in fact, you'll use more -- but you'll get your result earlier. (See "Parallel Processing Won't Make Your Apps Faster," for more on the behavior of multithreaded applications.)

Even on a multi-core machine, PLINQ won't always "parallelize" a query, for two reasons. One is that your application won't always run faster when parallelized. The second reason is that, even with another layer of abstraction managing your threads, it's still possible to shoot yourself in the foot -- or someplace higher -- with parallel processing. PLINQ checks for some unsafe conditions and won't parallelize a query if it detects those conditions.

I'll be pointing out some of the problems and conditions that PLINQ won't detect but, in the end, it's your responsibility to only use PLINQ where it won't generate those untraceable bugs.

Processing PLINQ

Invoking PLINQ is easy: just add the AsParallel extension to your data source. This is an example from an application that joins a local version of the Northwind database to the remote version to get Orders based on customer information:

Dim ords As System.Linq.ParallelQuery(Of ParallelExtensions.Order)

ords = From c In le.Customers.AsParallel Join o In re.Orders.AsParallel

On c.CustomerID Equals o.CustomerID

Where c.CustomerID = "ALFKI"

Select o

Because both data sources are marked AsParallel (and, in Join, if one data source is AsParallel, both must be) PLINQ will be used.

As with ordinary LINQ queries, PLINQ queries use deferred processing: Data isn't retrieved until you actually handle it. That means while the LINQ query has been declared as parallel, parallel processing doesn't occur until you process the results. So parallel execution doesn't actually occur until the following block of code, which processes the due date on each of the retrieved Order objects:

For Each ord As Order In ords

ord.RequiredDate.Value.AddDays(2)

Next

Under the hood, PLINQ will use one thread to execute the code in the For...Each loop, while other threads may be used to run the components of the query on as many processors as are available, up to a maximum of 64. (See "Controlling Parallelization," for more on this behavior.)

If the processing that I want to perform on each Order doesn't share a state with the processing on other Orders, I can further improve responsiveness by using a ForAll loop. The ForAll is a method available from collections produced by a PLINQ query that accepts a lambda expression. Unlike a For...Each loop that executes on the application's main thread, the operation passed to the ForAll method executes on the individual query threads generated by the PLINQ query:

ords.ForAll(Sub(ord)

ord.RequiredDate.Value.AddDays(2)

End Sub)

Unlike my For...Each loop, which executes sequentially on a thread of its own, the code in my ForAll processing executes in parallel on the threads that are retrieving the Orders.

Managing Order

As with SQL -- though everyone forgets it -- order is not guaranteed in PLINQ. The order that results are returned in by PLINQ subqueries will depend on the unpredictable response time of the various threads. This query, for instance, is intended to retrieve the next five Orders to be shipped:

ords = From o In re.Orders.AsParallel

Where o.RequiredDate > Now

Select o

Take (5)

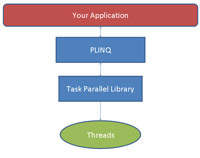

[Click on image for larger view.] |

| Figure 1. PLINQ adds query analysis and standard query operators to the functionality in the Task Parallel Library (TPL). TPL provides the basic constructs, structures and scheduling required to manage the underlying threads of the OS. |

If I don't guarantee order, I'm going to get a random collection of Orders with required dates later than the current time -- I may or may not get the first five Orders. To ensure that I'll get the first five for both SQL and PLINQ, I need to add an Order By clause to the query that sorts the dates in ascending order. And, yes, that will throw away some of the benefits of PLINQ.

Because results returned from multiple threads will turn up unexpectedly, PLINQ doesn't really understand the concept of "previous item" and "next item." If, in your loop, you use the values of one item to process the next item in the loop, you may be introducing an error into your processing. To have items processed in the order that they appeared in the original data source, you'll need to add the AsOrdered extension to the query.

For instance, if I wanted to "batch" my Orders into groups that were below a certain freight charge, I might write a loop like this:

For Each ord As Order In ords

totFreight += ord.Freight

If totFreight > FreightChargeLimit Then

Exit For

End If

shipOrders.Add(ord)

Next

Because of the unpredictable order that items will be returned from parallel processes, I can't guarantee that I'm putting anything but random Orders in each batch. To guarantee that items are processed in the order they appeared in my original data source, I have to add the AsOrdered extension to my data source:

ords = From o In re.Orders.AsParallel.AsOrdered

Where o.RequiredDate > Now

Select o

Introducing TPL

If your processing isn't driven by a LINQ query, you can still use the technology that PLINQ draws on: TPL. Fundamentally, TPL lets you create loops where, instead of processing each pass through the loop after the previous pass completes, passes through the loop are executed in parallel. If you have a quad-core computer, for instance, a loop can potentially be completed in one-third of the time because three passes through the loop can run simultaneously on each core.

Without TPL you would write this code to process all the elements of the Orders collection:

For Each o As Order In le.Orders

o.RequiredDate.Value.AddDays(2)

Next

With TPL, you call the ForEach method of the Parallel class, passing the collection and a lambda expression to process the items in the collection:

System.Threading.Tasks.Parallel.ForEach(

le.Orders, Sub(o)

o.RequiredDate.Value.AddDays(2)

End Sub)

By using the Parallel ForEach, each instance of the method can be processed simultaneously on a separate processor. If each operation takes 1 millisecond, and enough processors exist, all of the Orders can be processed in 1 millisecond instead of 1 millisecond multiplied by the number of Orders.

Any complex processing placed in a lambda expression will quickly get very difficult to read, so you'll often want to call some method from your lambda expression as this example does:

System.Threading.Tasks.Parallel.ForEach(

le.Orders, Sub(o)

ExtendOrders(o)

End Sub)

...

Sub ExtendOrders(ByVal o As Order)

o.RequiredDate.Value.AddDays(2)

End Sub

Under the hood, TPL takes the members of the collection and partitions them into a set of separate tasks that it distributes over the cores on your computer, queuing them up for execution. As each task finishes, freeing up its code, the TPL scheduler pulls another task from the queue to keep the core busy. You can also use the For method to create a loop based on an indexed value.

However, the real power in TPL comes when you create a custom Task. A Task can be created and then launched using its Start method. However, it's easier to use the Task class' static Factory object, whose StartNew method will both create the Task and launch it. To use the StartNew method you only need to pass a lambda function. If your function returns a value, you can use the Generic version of the Task object to specify the return type.

This example creates and starts a Task for each Order Detail object that calculates the Order Detail extended price. The Tasks are added to a List as they're created and launched. The code then loops through the List retrieving the results. If I ask for a result before it's calculated, the second loop will pause until that Task completes:

Dim CalcTask As System.Threading.

Tasks.Task(Of Decimal)

Dim CalcTasks As New List(Of System.

Threading.Tasks.Task(Of Decimal))

For Each ord As Order_Detail In

le.Order_Details

Dim od As Order_Detail = ord

CalcTask = System.Threading.

Tasks.Task(Of Decimal).

Factory.StartNew(Function() CalcValue(od))

CalcTasks.Add(CalcTask)

Next

Dim totResult As Decimal

For Each ct As System.Threading.Tasks.Task(Of Decimal) In CalcTasks

totResult += ct.Result

Next

If I'm lucky, every Task will complete before I ask for its result. But even if I'm unlucky, I'll still finish earlier than if I ran each Task sequentially.

Where the output of a Task depends on another Task completing, you can create dependencies between Tasks or groups of Tasks. The easiest technique is to use a Wait method, which causes your application to stop executing until all of the Tasks in an array complete:

Dim tsks() As System.Threading.Tasks.Task = {

Task(Of Decimal).Factory.StartNew(Function() CalcValue(le.Order_Details(0))),

Task(Of Decimal).Factory.StartNew(Function() CalcValue(le.Order_Details(1)))

}

System.Threading.Tasks.Task.WaitAll(tsks)

A more sophisticated approach is to use the ContinueWith method of a Task object to cause an instance of a Task to proceed (on the same thread) after some other task completes. This example launches multiple threads, each of which calculates the value of the Order Detail, but only after some other operation has been completed on the Order Detail:

For Each ordd As Order_Detail In le.Order_Details

Dim od As Order_Detail = ordd

Dim adjustedDiscount As New Task(Sub() AdjustDiscount(od))

Dim calcedValue As Task(Of Long) =

adjustedDiscount.ContinueWith(Of Long)(Function() CalcValue(od))

adjustedDiscount.Start

Next

[Click on image for larger view.] |

| Figure 2. The Parallel Stacks window provides a graphical view of the threads that are currently executing with additional information about each one. |

When Things Go Wrong

Exceptions can be raised on one or more of the threads that are

executing simultaneously on multiple processors. Once an exception occurs on any thread, your application will eventually be halted. But it's that "eventually" that's the problem: During that time, exceptions may occur on other threads. Errors raised during parallel processing are added to an AggregateException object. This object's InnerExceptions property allows you to see the exceptions thrown by each thread:

Dim Messages As New System.Text.StringBuilder

Try

'PLINQ or TPL processing

Catch aex As AggregateException

For Each ex As Exception In aex.InnerExceptions

Messages.Append(ex.Message & "; ")

Next

End Try

Instead of using Catch statements, you'll need to check the InnerExceptions type to determine what kind of error was thrown by each thread.

Debugging concurrent threads is made more interesting because, while the exception may appear in a loop following a PLINQ query, solving the problem may require restructuring the PLINQ query. Fortunately, Visual Studio 2010 includes additional tools for debugging parallel errors.

The Parallel Stacks window goes beyond the old Threads window to provide a single view that shows all of the threads that are currently executing. For instance, it allows you by default to see the Call Stacks for several threads at the same time. You can zoom this display and filter which threads are being displayed. More importantly, if you're using TPL, you can switch to a task-based view that corresponds to the Task objects in your code or a Method View to show the Tasks calling a method.

If you're using TPL, though, the Parallel Tasks window may be more useful to you because it organizes information around Tasks. This window not only shows currently running Tasks, but also tasks that are scheduled and waiting to run (shown in the Status column). You can check to see if you've created the correct dependencies between Tasks by checking whether the currently running Task has waited until the appropriate tasks have completed.

In previous versions of Visual Studio, stepping through a multithreaded program was a nightmare as the debugger leapt from the current statement in one thread to the current statement in another thread. Parallel Tasks lets you freeze or thaw the threads associated with Tasks when debugging to control which one will run to completion.

Using these two windows together simplifies the diagnosing pf parallel processing problems. For instance, Visual Studio will now "break in" when it detects a deadlock. When the debugger detects that two or more Tasks aren't proceeding because each is waiting on an object locked by another, Visual Studio freezes processing just as if you'd hit a break point. The Parallel Tasks window will display what object each Task is waiting on, and which thread holds it. The Method View of the Parallel Stacks window graphically shows which Tasks were calling which methods when the deadlock occurred.

Other Debugging Features

In addition to these tools, Visual Studio includes several other features for debugging parallel processing. When stepping through your code, hovering your mouse over a Task object brings up a tooltip with details on the task's Id, the method associated with it and its current status (for example, "Waiting to Execute"). That tooltip can be further expanded to see the current value for the Task's properties, including its Result. Examining the Task's InternalCurrent property in a Watch Window lets you get to information about the currently executing Task. A TaskScheduler's tooltip can be expanded to show all of the Tasks that it's managing.

Before using any of these tools, you'll also need to get up to speed in the best practices for creating applications that take advantage of parallel processing. What you've seen here, though, are your primary tools: PLINQ, TPL and Visual Studio. Together they allow you to access the power of all the processors of whatever computer your application runs on.