In-Depth

Breathe New Life for Your ASP.NET Apps with Docker, Part 2

Last time, we used Docker to modernize an ASP.NET WebForms app. In this second part, we take a feature-driven approach to extending it and improving performance.

- By Elton Stoneman

- 05/31/2017

Last time, I showed how to take a monolithic ASP.NET WebForms app that connects to a SQL Server database, and we modernized it by taking advantage of the Docker platform. We then took it a step further and moved the whole app as is to Docker, without any code changes, and ran the Web site and database in lightweight containers.

This time, we'll take a feature-driven approach to extending the app, improving performance and giving users self-service analytics. With the Docker platform you'll see how to iterate with new versions of the app, upgrade the components quickly and safely, and deploy the complete solution to Microsoft Azure.

[Editor's Note: The Code Download link in part 2 is provided as a convenience; it's the same as the link in part 1.]

Splitting Features from Monolithic Apps

Now that the application is running on a modern platform, I can start to modernize the application itself. Breaking a monolithic application down into smaller services can be a significant project of work, but you can take a more targeted approach by working on key features, such as those that change regularly, so you can deploy updates to a changed feature without regression testing the whole application. Features with non-functional requirements that can benefit from a different design without needing a full re-architecture of the app can also be a good choice.

I'm going to start here by fixing a performance issue. In the existing code, the application makes a synchronous connection to the database to save the user's data. That approach doesn't scale well--lots of concurrent users would make a bottleneck of SQL Server. Asynchronous communication with a message queue is a much more scalable design. For this feature, I can publish an event from the Web app to a message queue and move the data-persistence code into a new component that handles that event message.

This design does scale well. If I have a spike of traffic to the Web site I can run more containers on more hosts to cope with the incoming requests. Event messages will be held in the queue until the message handler consumes them. For features that don't have a specific SLA, you can have one message handler running in a single container and rely on the guarantees of the message queue that all the events will get handled eventually. For SLA-driven features you can scale out the persistence layer by running more message-handler containers.

The source code that accompanies this article has folders for version 1, version 2 and version 3 of the application. In version 2, the SignUp.aspx page publishes an event when the user submits the details form:

var eventMessage = new ProspectSignedUpEvent

{

Prospect = prospect,

SignedUpAt = DateTime.UtcNow

};

MessageQueue.Publish(eventMessage);

Also in version 2 there's a shared messaging project that abstracts the details of the message queue, and a console application that listens for the event published by the Web app and saves the user's data to the database. The persistence code in the console app is directly lifted from the version 1 code in the Web app, so the implementation is the same but the design of the feature has been modernized.

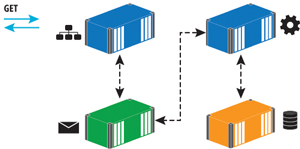

The new version of the application is a distributed solution with many working parts, as shown in Figure 1.

[Click on image for larger view.]

Figure 1: The Modernized Application Has Many Working Parts

[Click on image for larger view.]

Figure 1: The Modernized Application Has Many Working Parts

There are dependencies between the components, and they need to be started in the correct order for the solution to work properly. This is one of the problems of orchestrating an application running across many containers, but the Docker platform deals with that by treating distributed applications as first-class citizens.

Orchestrating Applications with Docker Compose

Docker Compose is the part of the Docker platform that focuses on distributed applications. You define all the parts of your application as services in a simple text file, including the dependencies between them and any configuration values they need. This is part of the Docker Compose file for version 2, showing just the configuration for the Web app:

product-launch-web:

image: sixeyed/msdn-web-app:v2

ports:

- "80:80"

depends_on:

- sql-server

- message-queue

networks:

- app-net

Here, I'm specifying the version of the image to use for my Web application. I publish port 80 and then I explicitly state that the Web app depends on the SQL Server and message queue containers. To reach these containers, the Web container needs to be in the same virtual Docker network, so all the containers in the Docker Compose file are joined to the same virtual network, called app-net.

Elsewhere in the Docker Compose file I define a service for SQL Server, using the Microsoft image on Docker Hub, and I'm using the NATS messaging system for my message queue service, which is a high-performance open source message queue. NATS is available as an official image on Docker Hub. The final service is for the message handler, which is a .NET console application packaged as a Docker image, using a simple Dockerfile.

Now I can run the application using the Docker Compose command line:

docker-compose up -d

Then Docker Compose will start containers for each of the components in the right order, giving me a working solution from a single command. Anyone with access to the Docker images and the Docker Compose file can run the application and it will behave in the same way—on a Windows 10 laptop, or on a Windows Server 2016 machine running in the datacenter or on Azure.

For version 2, I made a small change to the application code to move a feature implementation from one component to another. The end-user behavior is the same, but now the solution is easily scalable, because the Web tier is decoupled from the data tier, and the message queue takes care of any spikes in traffic. The new design is easy to extend, as well, as I've introduced an event-driven architecture, so I can trigger new behavior by plugging in to the existing event messages.

Adding Self-Service Analytics

For my sample app, I'm going to make one more change to show how much you can do with the Docker platform, with very little effort. The app currently uses SQL Server as a transactional database, and I'm going to add a second data store as a reporting database. This will let me keep reporting concerns separate from transactional concerns, and also gives me free choice of the technology stack.

In version 3 of the sample code, I've added a new .NET console app that listens for the same event messages published by the Web application. When both console apps are running, the NATS message queue will ensure they both get a copy of all events. The new console app receives the events and saves the user data in Elasticsearch, an open source document store you can run in a Windows Docker container. Elasticsearch is a good choice here because it scales well, so I can cluster it across multiple containers for redundancy, and because it has an excellent user-facing front end available called Kibana.

I haven't made any changes to the Web application or the SQL Server message handler from version 2, so in my Docker Compose file I just add new services for Elasticsearch and Kibana, and for the new message handler that writes documents to the Elasticsearch index:

index-prospect-handler:

image: sixeyed/msdn-index-handler:v3

depends_on:

- elasticsearch

- message-queue

networks:

- app-net

Docker Compose can make incremental upgrades to an application, and it won't replace running containers if their definition matches the service in the Docker Compose file. In version 3 of the sample application, there are new services but no changes to the existing services, so when I run docker-compose up –d, Docker will run new containers for Elasticsearch, Kibana and the index message handler, but leave the others running as is—which makes for a very safe upgrade process where you can add features without taking the application offline.

This application prefers convention over configuration, so the host names for dependencies like Elasticsearch are set as defaults in the app, and I just need to make sure the container names match in the Docker Compose setup.

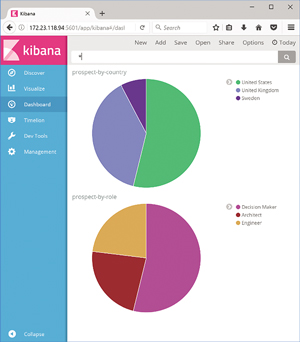

When the new containers have started, I can use "docker inspect" to get the IP address of the Kibana container, and browse to port 5601 on that address. Kibana has a very simple interface and in a few minutes I can build a dashboard that shows the key metrics for people signing up with their details, as shown in Figure2.

[Click on image for larger view.]

Figure 2: A Kibana Dashboard

[Click on image for larger view.]

Figure 2: A Kibana Dashboard

Power users will quickly find their way around Kibana, and they'll be able to make their own visualizations and dashboards without needing to involve IT. Without any downtime I've added self-service analytics to the application. The core of that feature comes from enterprise-grade open source software I've pulled from Docker Hub into my solution. The custom component to feed data into the document store is a simple .NET console application, with around 100 lines of code. The Docker platform takes care of plugging the components together.

Running Dockerized Solutions on Azure

Another great benefit of Docker is portability. Applications packaged into Docker images will run the exact same way on any host. The final application for this article uses the Windows Server and SQL Server images owned by Microsoft; the NATS image curated by Docker; and my own custom images. All those images are published on the Docker Hub, so any Windows 10 or Windows Server 2016 machine can pull the images and run containers from them.

Now my app is ready for testing, and deploying it to a shared environment on Azure is simple. I've created a virtual machine (VM) in Azure using the Windows Server 2016 Datacenter with Containers option. That VM image comes with Docker installed and configured, and the base Docker images for Windows Server Core and Nano Server already downloaded. One item not included in the VM is Docker Compose, which I downloaded from the GitHub release page.

The images used in my Docker Compose file are all in public repositories on Docker Hub. For a private software stack, you won't want all your images publicly available. You can still use Docker Hub and keep images in private repositories, or you could use an alternative hosted registry like Azure Container Registry. Inside your own datacenter you can use an on-premises option, such as Docker Trusted Registry.

Because all my images are public, I just need to copy the Docker Compose file onto the Azure VM and run docker-compose up –d. Docker will pull all the images from the Hub, and run containers from them in the correct order. Each component uses conventions to access the other components, and those conventions are baked into the Docker Compose file, so even on a completely fresh environment, the solution will just start and run as expected.

If you've worked on enterprise software releases, where setting up a new environment is a manual, risky and slow process, you'll see how much benefit is to be had from Windows Server 2016 and the Docker platform. The key artifacts in a Docker solution--the Dockerfile and the Docker Compose file--are simple, unambiguous replacements for manual deployment documents. They encourage automation and they make it straightforward to build, ship and run a solution in a consistent way on any machine.

Next Steps

If you're keen to try Docker for yourself, the Image2Docker PowerShell module is a great place to start; it can build a Dockerfile for you and jump-start the learning process. There are some great, free, self-paced courses on training.docker.com, which provisions an environment for you. Then, when you're ready to move on, check out the Docker Labs on GitHub, which has plenty of Windows container walk-throughs.

There are also Docker MeetUps all over the world where you can hear practitioners and experts talk about all aspects of Docker. The big Docker conference is DockerCon, which is always a sell-out; this year it was in Texas in April, but another one is taking place in Copenhagen in October.

Last, check out the Docker Captains--they're the Docker equivalent of Microsoft MVPs. They're constantly blogging, tweeting and speaking about all the cool things they're doing with Docker, and following them is a great way to keep a pulse on the technology.

Note: Thanks to Mark Heath, who reviewed this article.

Mark Heath is a .NET developer specializing in Azure, creator of NAudio, and an author for Pluralsight. He blogs at markheath.net and you can follow him on Twitter @mark_heath.

About the Author

Elton Stoneman is a seven-time Microsoft MVP and a Pluralsight author who works as a developer advocate at Docker. He has been architecting and delivering successful solutions with Microsoft technologies since 2000, most recently API and Big Data projects in Azure, and distributed applications with Docker.