News

VS Live! Keynote: Optimization and Deployment After Coding an Angular Web App

After coding an Angular JavaScript app, there's still a lot of work to be done to move it to the cloud, and Microsoft's John Papa explained how to use various tools to ease that process at last week's Visual Studio Live! conference in Orlando.

Papa, principal developer advocate at Microsoft, demonstrated various tools and techniques to optimize, build and deploy an Angular app to Azure, "Because it's not real until it gets to production."

"We like to write out code, but our managers like to see it shipped," Papa said in a conference keynote presentation. "Our business users like to see it happen. Our security folks like to make sure that it's all tight and secure when it gets deployed to the Web. And one of the most important things is that when we have changes to make, we like to make sure that those changes that work on my machine work on every machine."

He explained in hands-on detail exactly how to prepare an app for the cloud, using tools like Node.js, Visual Studio Code, Docker containers, Continuous Integration/Continuous Deployment (CI/CD) techniques and more for the optimize -> build -> deploy workflow.

Papa questioned if developers always think about optimizing their code after a project is complete in order to eke out the best performance possible and avoid a slow-loading site and other problems.

"People get scared about that word optimization because what is it exactly that you're going to do? he said. "Does it just mean that you're going to bundle your code and minify it? There's actually a lot more that we can do."

John Papa at Visual Studio Live! Keynote

John Papa at Visual Studio Live! Keynote

To do those things, a command-line interface (CLI) comes with leading JavaScript libraries and frameworks like Angular, React and Vue.js, because there's a lot more to finalizing JavaScript apps than inserting script tags like the old days, Papa, said.

"Now there's so much more going on, they're trying to wrap up, to make it easier for everybody to run, create, build, test, deploy their applications."

For Angular, he explained how the judicious use of CLI "ng build" commands with different flags can optimize code, combine and shrink files and much more. Getting into the nitty-gritty details, he demoed an optimization by setting different flags on the CLI to work with various files, with one about 40 KB in size and the largest clocking in at about 2.7 MB. The CLI "prod" flag combined and optimized those files.

"So the 2.7 meg and the 40k from our old code actually gets trimmed down to about 400k total, so we go from about 2.7 megs down to 500k, an 80 percent reduction, simply by running a prod flag inside of a library."

Even better was a brand-new "build-optimizer" flag that does "tree shaking," or removing "dead" code that isn't used, much like dead leaves fall off a shaken tree. "So now we're going from about 3 megs down to about 300k, almost a 90 percent reduction of our code simply by running flags on our CLI."

Other useful tools demoed by Papa included source-map-explorer and webpack-bundle-analyzer, both available via the npm JavaScript package manager. They're especially handy for further investigating your code after using automated optimization tools and discovering more ways to speed and shrink your apps.

So what about after your code is finally as small, tight and fast as you can get it? How do you make sure it runs everywhere?

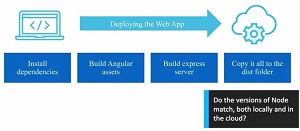

In deploying a Web app to the cloud, he said, think about what needs to be done to reproduce your same environment so it works in all scenarios:

- Install dependencies

- Build your assets for Angular, or whatever platform you're using (.NET, React and so on)

- Build an express Web server

- Copy it all to the distribution folder, likely on another server

But things can break for multiple reasons, such as mis-matched Node.js versions locally and in the cloud, and after you hand off your code and it's managed by someone else in the cloud, you can't "physically touch it."

[Click on image for larger view.] Deploying a Web App to the Azure Cloud

[Click on image for larger view.] Deploying a Web App to the Azure Cloud

So the goal is to automate the build/deploy steps with consistency and confidence to make sure your app just works, which is where Docker containers come into play. Even when projects are bundled into containers, developers can do the same things they're accustomed to doing locally, such as debugging Node.js with Visual Studio Code, accessing the file system, checking the logs while the server is running and more, Papa said.

Docker containers use Dockerfiles, which are self-contained scripts that provide a recipe for all the things developers would do locally to create and run an app, such as get the correct version of Node, get npm, install scripts, build a project, put it in the correct folder and automatically adapt to different environments.

"I want to make sure that what runs locally runs the same as it does on someone else's machine in staging or production with different environment variables, so I have to build my application with that in mind."

Papa demonstrated "this cool Docker extension" that lets him explore and work with containers and images with various functionalities, such as examining logs, getting server/port information, seeing files contained in an image, doing multi-stage builds in both production and development environments and more. The tool was especially useful in working with databases, including MongoDB instances running locally and in the cloud, where Cosmos DB was also leveraged. (Editor's note: This paragraph previously included an incorrect URL for the Docker extension.)

Finally, Papa explained how one more issue was addressed: "How do you take these images that we're building and make sure anybody can build them?" That's where CI/CD comes into play. "The workflow we want here, CI/CD, is we want to take our code and we simply just want to push it to GitHub. I'll make changes, I want to push it to GitHub and then I want GitHub to take that code and let it know -- let the CI/CD servers I have know -- that the code has changed," whether using Jenkins or Visual Studio Team Services (VSTS) or some other CI/CD tool.

A build process then builds out a container with same "Docker compose" command used in VS Code earlier. It also automates the steps of putting it in the container registry and deploying it to a Web app.

"What this does is, effectively everything it did manually, it's now going to do locally by pushing," Papa said. "We just write our code push to GitHub and then we back away from the keyboard" so GitHub can ping a CI server (VSTS in this case) and perform any testing, push the container to Azure, launch it and so on. (Editor's note: this paragraph previously incorrectly stated that GitHub performed unit testing).

To recap, the deployment of JavaScript to the cloud involved these steps:

- Build, run and debug locally with Docker

- Continuously build and deploy with VSTS

- Host in the cloud with Azure App Service on Linux, or Windows, whatever's in your container

JavaScript CLIs optimize the build, Docker makes it "run everywhere," a CI/CD server automates building and deployment, and VS Code tooling makes it all easier.

In summarizing his keynote, Papa said: "I'm a developer at heart, and I like writing code. That's what I want to do, so what I really want is I want to make sure that I can spend the most time doing the things I love, which is writing code, and to do that I have to minimize the things that I don't enjoy as much, which is deploying, quite frankly.

"I like to see my code run in production but I don't like watching it get up there and do all that. You probably don't either, right, like figuring out how to get there? Maybe you do, but what's nice about it is, why have all the ceremony of what we used to in the past now we can have these really cool tools that make just work everywhere. We could use Docker to script out what's happening, give it a good recipe; we can then push it up into the cloud using these automated tools.

"We can use VS Code so we never have to leave our editor. As a developer, that makes me feel comfortable. It makes me feel safe, it makes me productive and efficient and fast at doing everything right inside the environment that I know I can use. Docker just makes it work everywhere, which makes everybody at your company and all your users happy."

Links provided for Papa's presentation included:

The Live! 360 conference hosted several individual shows for developer, IT and administrator communities, including the Visual Studio Live! event. The next Visual Studio Live! show will be in Las Vegas March 11-16.

In the meantime, Papa's presentation and other videos from Orlando are available here.

About the Author

David Ramel is an editor and writer at Converge 360.