News

New 'Project Teleport' Speeds Azure Container Creation

- By Scott Bekker

- 11/21/2019

While containers can be generated almost instantly when a cloud application needs more capacity, the design of Docker containers can slow things down in serverless environments on platforms like Azure, where such dynamic flexibility is a prime benefit.

Microsoft is trying to address this disconnect with the new "Project Teleport".

This project -- a registry transport protocol that enables container layers to be teleported from the registry directly to a container host -- was detailed in a keynote presentation at the Live! 360 conference in Orlando by Steve Lasker, program manager for Azure Container Registries at Microsoft.

"We want to be able to teleport the containers to the machine instantaneously so you don't have to pay that pain," Lasker said of the time it takes to start a new container in Azure.

One part of the problem stems from the differences in expectations between virtual machines (VMs) and containers.

"VMs were built to run forever. They weren't worried about the amount of time it takes to start up when the technology was invented. In the .NET Framework, we optimized for being able to change code on the fly, dynamic compilation, because the VM had to stay running. When we think about containers, it's actually the opposite. Containers, we think about start-up time. I want it to be subsecond. If it fails, I don't care. Start another one," Lasker said.

A number of layers need to be loaded before the container can start. Organizations running containers on servers they own, using Kubernetes or other methods, can pre-cache some layers on a VM so additional containers can be started quickly. That doesn't work in a serverless environment.

"In the serverless world, you don't have the machine. Every time we start the environment, it is fresh. While we can pre-cache some layers, we can take Ubuntu and Windows and so forth and put them on there, the reality is since they're changing so often, there's no way we're going to keep up with every base layer that you guys need for every one of those environments. So we need to be able to optimize bringing that first container to the machine every time," Lasker explained.

The Project Teleport approach involves relying on the fact that Azure datacenters have blazing fast internal networks. Docker containers were designed with the public Internet in mind, and the awareness that each layer would need to be compressed to arrive in a reasonable amount of time. Then the system relies on local memory and CPU to decompress the layer. In Azure, the decompression on the CPU is one of the main limiting factors for starting up new containers.

"This Teleport-enabled client is going to go, 'Hey, I'm in the same region but Teleport-enabled. Can you give me layer URLs?' What we'll do is we'll hand back expanded layers. So I don't have to download the layers and I don't have to expand them. I'm just going to mount them real-time with SMB," Lasker said. Orca is the codename for the Teleport-enabled client.

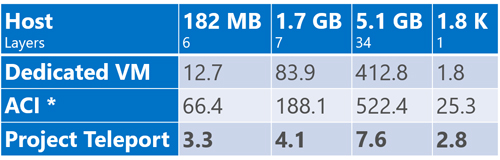

[Click on image for larger view.] Initial performance metrics across different image sizes. (Source: Microsoft)

[Click on image for larger view.] Initial performance metrics across different image sizes. (Source: Microsoft)

Lasker posted a chart showing internal test results for comparative startup times of various-sized containers using three methods -- dedicated VMs, Azure Container Instances (ACI) and Project Teleport. The Project Teleport approach was faster than a dedicated VM for all but the tiniest containers, and orders of magnitude faster than ACI in all cases.

In keeping with the Star Trek origin of the codename, Lasker made a Star Trek joke in urging conference attendees to sign up for the preview.

"We're taking redshirt volunteers that want to try Teleporters," Lasker said in a reference to the stock characters in the 1960s science fiction TV show who would often die shortly after being introduced in an episode. "Our goal is 90 percent performance in a pre-cached image, because obviously if it's there we can't beat that. I don't know if I want to beam humans at 90 percent -- that doesn't really scale very well, but for containers, that's where we're going."

A sign-up page for a limited preview is here.

About the Author

Scott Bekker is editor in chief of Redmond Channel Partner magazine.