How the Harvard Business Review Can Help Reduce Testing

Here’s a way of thinking about your test plans that will help you decide how you can reduce your testing costs.

Stick with me here: I'm going to start out very abstract, but by the end of this post, you'll have got what I hope you'll consider a practical way of thinking about how to reduce testing costs -- including why you can skip running some tests altogether.

Testing (finding and removing defects) is not a value-added activity in the eyes of your user. At best, testing removes "dissatisfiers." You can prove this: If you go to your users and tell them that you've got a way for them to get their applications both without defects and at the same cost, but without you doing any testing, not one of them will say "But, hold it -- I'll miss the testing so much!!!"

Testing is necessary but not value-added. You should, then, be thinking about how to reduce your testing costs so you can spend time on what your users actually value (mostly, delivering functionality). Picking up some tools from the Harvard Business Review (HBR) will help you here.

Categorizing Your Tests

First: There are lots of ways to slice up your tests into different categories. You may think about your tests as the ones that test for functional/non-functional features, that test the "itities" (scalability, reliability), that test units vs. whole applications and so on. Here's an article that outlines all the tests I could think of and how they fit into the software development process.

But none of those schemes will help you think about reducing testing.

When you make up your tests, you're allocating time and money -- costs, in other words. Your tests must be cheaper than what you get from it: the measurable benefits. With testing, the benefit is reduced risk and the measure is the costs of defects not found by the testing process: the cost to recover from failure caused by a defect, the cost of lost productivity among users due to defects, the cost of losing customers/business due to defects, the cost of eliminating the defect and so on. Even defects that are found by a test are expensive if they're found late in the process. Testing reduces risk by finding and eliminating defects as early as possible.

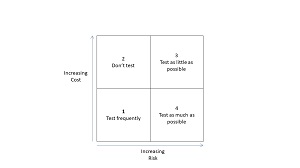

The most popular tool for any HBR article is the four-square model. In that spirit, here's a model that you can slot all your tests into, based on cost and risk with advice on what to do with the tests in each category:

[Click on image for larger view.] You Can Put Your Tests in One of Four Categories

[Click on image for larger view.] You Can Put Your Tests in One of Four Categories

You'll notice that there is no place for a low-cost, high-risk test. A low-cost test can be run frequently, which automatically reduces the risk of the test by finding defects earlier. Defects that that are discovered earlier provide better feedback to the developer, preventing defects from propagating. Defects that are found earlier are cheaper to fix than defects discovered later and "baked into" the application. Since we measure risk by the "cost of the defects," a low-cost test always reduces risk.

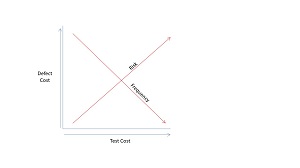

That relationship is shown in this diagram:

[Click on image for larger view.] As the Cost of Running a Test Increases, So Does its Risk

[Click on image for larger view.] As the Cost of Running a Test Increases, So Does its Risk

Alternatives to Testing

Second: You can always test less ... though, the less frequently you test, the greater your risk. But, in the real world of testing, you're always making trade-offs, just as you do every day when you make any buying decision.

As Michael Porter pointed out in an HBR article discussing why different industries have different levels of competition, any producer must deal with four forces, one of which is the available substitutes ... and there are always available substitutes. If the costs of owning a car and driving to work get too high, over time, you will substitute carpooling, public transit, cycling or moving closer to work (and there are probably more). There are costs associated with those substitutes, but if those costs are cheaper than the product, you will take advantage of the substitute.

The substitutes for testing are: not testing (or not doing it as often), changing development/implementation practices to reduce defects/risks, or not making a change to an application. The costs vary depending on the substitute: not testing increases risk, changing development practices requires training/replacing staff/lost productivity/new toolsets and not making a change means living with a substandard application.

You see examples of organizations picking the "not testing" substitute all the time. Minor bugs in applications that are fixed in later versions are examples of an organization deciding to live with the risk in that area. In more serious scenarios, the risk turns out be paying more to fix a defect that would have been cheaper to address if it had been discovered earlier (been there, got the t-shirt).

You've seen the result of picking the other alternatives, also. Many of the changes you've seen in your development tools are there to reduce the risk of creating applications with defects. Implementing applications by moving them to the cloud can eliminate scalability problems. One of the benefits of adopting a microservice architecture is to isolate defects and reduce the costs of fixing them.

You've even seen the "no change" substitute. I bet your organization has at least one application that sees no changes because the risk of any change is too high.

Leveraging the Four Categories

Here's the practical part I promised you: You're already making decisions about your testing process based on this four-square model, but you can get better at it. You already look at changing development practices to reduce risk (i.e. moving tests towards the bottom of the model) and at reducing testing costs so you can run tests more often (i.e. move tests to the left).

When it comes to reducing test costs, like most ways for reducing cost in the modern world, you look at automation. You're probably already automating your unit tests or considering it. If you've automated your unit tests, you're now looking at automating integration tests, end-to-end testing and user acceptance tests.

Getting all your tests into category 1 is, obviously, the ideal. Unit tests fall into this category. Assuming you've successfully automated your unit testing, you've made running these tests so cheap that you're probably running them far more frequently than you actually need to.

For example, after I add functionality to an application and get the corresponding unit test to run, I re-run all my unit tests even though I know that most of those tests are unaffected by the change. While scheduled test runs may try to select only the parts of an application affected by a change, those selection processes always err on the side of caution and run more tests than are needed.

You'd like category 2 to be empty, but where the risk is sufficiently low, skipping the tests in this area is an acceptable substitute. You have, for example, applications that you don't do scalability tests on because the risk of scaling problems is too low and the cost of setting up a good test too high.

Still, you'd be happier if those tests were in category 1, so you consider ways of reducing the costs of these tests through automation (i.e. predefined, easy to load test environments). If that's not possible, you consider how you can further reduce risk (e.g. running the application in the cloud).

Reducing Risk and Cost in Categories 3 and 4

Categories 3 is the very scary category. When tests are run infrequently, they are typically run long after the problems they uncover are created. This both reduces the value of the test's feedback to the developer and increases the cost of fixing the problem. Category 4 is less scary than category 3 but only because the tests are run sooner after the defect is created, improving feedback to the developer and lowering the costs of fixing the defect.

Moving tests out of categories 3 or 4 leverage similar strategies. The most obvious approach is to reduce the costs of these tests so they can be run more frequently. Category 4 tests could, then, fall into category 1. Since tests that run more frequently automatically reduce risk, increasing the frequency of the category 3 tests reduces their risk and moves them into category 4 (and, perhaps, on to category 1).

An alternative is to reduce risk for the tests in category 3 and 4 by extending category 1 tests to discover more defects that are currently caught by tests in categories 3 and 4 (without increasing the costs of the category 1 tests). That may reduce the cost of the category 3 and 4 tests but also reduces the risk associated with those tests. If the risk of the category 3 and 4 tests is reduced, you may be able to move them into category 2, where you stop running the tests altogether.

Failing that, it may be possible to segment category 3 and 4 tests to create new tests that are cheap enough to fall into category 1.

Finally, you can change development or implementation practices. You're looking for two payoffs here: to sufficiently reduce risk that more tests move into category 2 or to reduce the costs of tests so they can be run more frequently and move into category 1.

Visual Studio gives you your choice of testing frameworks to start automating your test, lowering the costs of your testing. With these tools in hand, what makes the most sense to you, for your application and your team to reduce the cost of testing?

About the Author

Peter Vogel is a system architect and principal in PH&V Information Services. PH&V provides full-stack consulting from UX design through object modeling to database design. Peter tweets about his VSM columns with the hashtag #vogelarticles. His blog posts on user experience design can be found at http://blog.learningtree.com/tag/ui/.