News

Microsoft Researchers Tackle Low-Code LLMs

Microsoft researchers published a paper on low-code large language models (LLMs) that could be used for machine learning projects such as ChatGPT, the sentient-sounding chatbot from OpenAI.

Recent advancements in generative AI have proven a natural fit for the low-code/no-code software development approach. Low-code development typically involves drag-and-drop GUI composition, wizard-driven workflows and other techniques that replace the traditional type-all-your-code approach.

As just one example of that natural fit, Microsoft has recently infused AI tech throughout its low-code Power Platform, including the Power Apps software development component (see the Visual Studio Magazine article, "AI Is Taking Over the 'Low-Code/No-Code' Dev Space, Including Microsoft Power Apps").

Now, low-code LLMs are in the works, as explained in the paper "Low-code LLM: Visual Programming over LLMs," authored by a dozen Microsoft researchers.

Submitted last week, the paper's abstract reads in part:

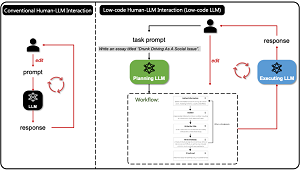

Effectively utilizing LLMs for complex tasks is challenging, often involving a time-consuming and uncontrollable prompt engineering process. This paper introduces a novel human-LLM interaction framework, Low-code LLM. It incorporates six types of simple low-code visual programming interactions, all supported by clicking, dragging, or text editing, to achieve more controllable and stable responses. Through visual interaction with a graphical user interface, users can incorporate their ideas into the workflow without writing trivial prompts.

The project, called TaskMatrix.AI, involves two LLMs integrated into a framework. A Planning LLM is used to plan a workflow to be used to execute complex tasks, while the Executing LLM generates responses.

[Click on image for larger view.] System Overview (source: Microsoft).

[Click on image for larger view.] System Overview (source: Microsoft).

So, as an example of a low-code technique, the separate workflow steps, generated text that appears in a string of boxes in the design stage, can be edited and graphically reordered by dragging and dropping the boxes.

In a scenario where an LLM is prompted to write an essay, for example, the generated workflow flowchart could include boxes to research, organize, write a title, write a body and so on. Individual steps, or boxes, can also be deleted or added. Developers can also add or remove "jump logic," which determines when one step jumps to another.

[Click on image for larger view.] Example Task Flowchart (source: Microsoft).

[Click on image for larger view.] Example Task Flowchart (source: Microsoft).

That eases the burden of trying to chain together multiple typed prompts that are often needed to get an LLM to respond appropriately to a complex task. That is called "prompt engineering," an emerging discipline that can pay north of $300,000 per year.

Low-code LLMs also inject more human interactivity into the machine learning process process instead of solely relying on tech.

"Through this approach you can easily control large language models to work according to your ideas rather than through complex and difficult-to-control prompt design forms," Microsoft explained in a video published a few days ago. "We believe that no matter how powerful large language models are, humans always need to be involved in the creative process for complex tasks."

The project's GitHub repo boils down the human/machine system components and workflow.

- A Planning LLM that generates a highly structured workflow for complex tasks.

- Users editing the workflow with predefined low-code operations, which are all supported by clicking, dragging, or text editing.

- An Executing LLM that generates responses with the reviewed workflow.

- Users continuing to refine the workflow until satisfactory results are obtained.

Advantages of the system, according to Microsoft, include:

- Controllable Generation. Complicated tasks are decomposed into structured conducting plans and presented to users as workflows. Users can control the LLMs' execution through low-code operations to achieve more controllable responses. The responses generated followed the customized workflow will be more aligned with the user's requirements.

- Friendly Interaction. The intuitive workflow enables users to swiftly comprehend the LLMs' execution logic, and the low-code operation through a graphical user interface empowers users to conveniently modify the workflow in a user-friendly manner. In this way, time-consuming prompt engineering is mitigated, allowing users to efficiently implement their ideas into detailed instructions to achieve high-quality results.

- Wide applicability. The proposed framework can be applied to a wide range of complex tasks across various domains, especially in situations where human's intelligence or preference are indispensable.

The paper said the new system will soon be available at LowCodeLLM.

Incidentally, Microsoft noted the system was actually aided by GPT-4, the latest and most advanced LLM from Microsoft partner OpenAI.

"Part of this paper has been collaboratively crafted through interactions with the proposed Low-code LLM," the GitHub repo states. "The process began with GPT-4 outlining the framework, followed by the authors supplementing it with innovative ideas and refining the structure of the workflow. Ultimately, GPT-4 took charge of generating cohesive and compelling text."

But it still needed human help.

About the Author

David Ramel is an editor and writer at Converge 360.