News

Semantic Kernel: Microsoft Answers Your Questions, Updates Docs for AI Integration SDK

Large language models (LLMs) for generative AI might be the sexy new "it" thing for machine learning right now, but all that advanced magic wouldn't be possible without under-the-hood integration and orchestration services provided by tooling like Microsoft's Semantic Kernel.

So what is the open source Semantic Kernel? Microsoft handily provides the answer in "What is Semantic Kernel?" documentation: "Semantic Kernel is an open-source SDK that lets you easily combine AI services like OpenAI, Azure OpenAI, and Hugging Face with conventional programming languages like C# and Python. By doing so, you can create AI apps that combine the best of both worlds."

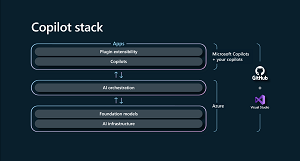

Semantic Kernel provides that integration for developers to use in their apps by acting as an AI orchestration layer in Microsoft' stack of AI models and "copilot" assistants, providing interaction services for those constructs to work with underlying foundation models and AI infrastructure.

"Semantic Kernel supports and encapsulates several design patterns from the latest in AI research, such that developers can infuse their applications with plugins like prompt chaining, recursive reasoning, summarization, zero/few-shot learning, contextual memory, long-term memory, embeddings, semantic indexing, planning, retrieval-augmented generation and accessing external knowledge stores as well as your own data," Microsoft said.

[Click on image for larger view.] Semantic Kernel as the Orchestration Layer of the Copilot Stack (source: Microsoft).

[Click on image for larger view.] Semantic Kernel as the Orchestration Layer of the Copilot Stack (source: Microsoft).

With Semantic Kernel being a free, key tool to use for creating advanced AI-infused apps, Microsoft recently held a Q&A session to answer developer questions about the offering, while also updating its documentation. Here's a look.

Semantic Kernel Q&A

Microsoft unveiled its latest AI solutions at the recent Microsoft Build 2023 developer conference, where it also held a Q&A session for Semantic Kernel, titled "Building an AI Copilot with Semantic Kernel in the GPT-4 era, Q&A."

While the presentation isn't available for on-demand viewing, Microsoft helpfully provided this summary in a post last week:

-

Creating New Documents Based on Historical Examples

Question: "I have a use case to fill in sections of new drafts of documents based on historical documents for our business. Can I use AI for this?"

Answer: This is a common use case that we hear from many customers.

To get started with this you will need to:

- Select a vector memory storage solution -- this allows the AI to find your documents and leverage those

- If they are large documents, you will likely need to select a chunking strategy -- this is how the documents will be broken apart before they are sent to the vector memory storage solution

- Think about what UI you want to use for your end users

Microsoft's answer goes on to list supported several vector memory providers and info about the Copilot chat starter app that can be used to see the solution in practice.

Allowing Employees to Talk to Their Enterprise Data

Question: "How do I securely allow my employees to talk to their data which is in SQL and do it in a trusted manner so the users can't do prompt injection?"

Answer: This is the other top use case that we hear from many customers.

You will want to start by having your users auth into your app, so you know who they are. Use that authorization to pass over to your SQL database or other enterprise database. This will ensure the user has access to only the data that you gave them in the past, so you do not get data leakage.

Using views and stored procedures is a great way to increase your security posture with users. Rather than having the LLMs create SQL statements to execute you can keep them on track using these methods.

-

Adding Consistency with AI LLMs

Question: "Are there any best practices for creating these new AI solutions so they are consistent?"

Answer: One way to add consistency for your end users is to create static plans. You can create plans in our VS Code Extension and then use those static plans to run the same steps each time users ask for the same thing.

-

Multi-Tenant Solutions with LLM

Question: "How should I think about multi-tenant solutions using AI?"

Answer: With multi-tenant solutions, the same rules apply as for keeping SQL secure. You will want to segment out the users by tenant by having them auth into your solution. LLMs don't hold onto or cache any information on their own. Any data cross-talk that happens in a multi-tenant AI solution will be based on permissions and/or data systems not being configured correctly.

-

Multi-User Chat Solutions

Question: "How can I allow users to invite other employees into a chat and how would data sharing work in that use case?"

Answer: Our Copilot chat starter app is a good reference app to see how this can work. It allows you to invite others into a chat with a user and the LLM bot. Just like a Microsoft Word doc, when you share the document with another user, they can see what is in the document. The chat would work the same way.

Updated Semantic Kernel Documentation

Microsoft also recently updated its documentation for the SDK. "We have a lot of new content for you to explore that we believe will make it easier for you to get started with Semantic Kernel, whether you use C# or Python," the company said in a June 21 post. "Our updated content also does a better job describing how Semantic Kernel fits in with the rest of the Microsoft ecosystem and other applications like ChatGPT." Here are the highlights:

Get to know plugins: "We've added a new section to our docs that explains how plugins work, how you can use them with Semantic Kernel, and our plans to converge with the ChatGPT plugin model."

Python samples everywhere: "We've added Python samples to nearly every tutorial and sample in the docs," said Microsoft about its effort to make using the Python SDK easier as the team brings that section up to par with the .NET flavor of the SDK.

New tutorials and samples: "As part of this update, we also wanted to provide tutorials that were more relevant to what the community was building, so in the Orchestrating AI plugins section of the docs, we walk you through how to build an AI app with plugins from beginning to end.

- Start by learning about semantic functions to derive intent.

- Give your AI the power of computation with native functions.

- Chain functions together to get the best of AI and native code.

- And finally use planner to automatically generate a plan with AI."

Create issues on the docs: "Finally, we've moved our entire doc site to a public GitHub repo," the team said. "This means that you can now create issues on the docs themselves. If you see something that's confusing or incorrect, please let us know by creating an issue on the docs repo. We'll also accept PRs if you want to make the change yourself."

And More

Microsoft also noted:

- It created a kit of hackathon materials that developers can use to host their own hackathon, which the company said is a good way learn about Semantic Kernel. The kit includes a sample agenda, prerequisites, and a presentation.

- It created a page that highlights some of the best in-depth tutorials the team has seen, which help developers walk through how to build a full app from beginning to end.

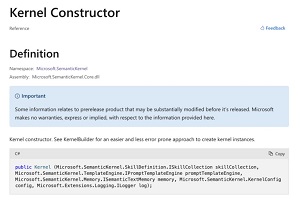

- It's working on creating reference docs for the .NET and Python SDK so developers can easily see what each class and method does on the Learn site. For example, here's a preview of a reference doc item:

[Click on image for larger view.] Kernel Constructor Reference Doc Preview (source: Microsoft).

[Click on image for larger view.] Kernel Constructor Reference Doc Preview (source: Microsoft).

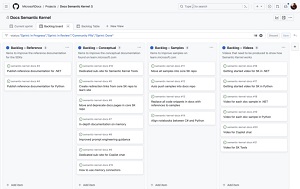

- It's working on creating project in GitHub that will serve as a publicly available backlog that lets developers see what the team is actively working on in the docs. Here's a preview of what the backlog will look like:

[Click on image for larger view.] Publicly Available Backlog Preview (source: Microsoft).

[Click on image for larger view.] Publicly Available Backlog Preview (source: Microsoft).

"And last, but not least, we're working on creating more great content for the docs!" Microsoft said. "We want to create additional explainers with code samples on memory, authentication, Copilot chat, and more. But most importantly, we want to hear from you. What do you want to see next? Let us know by creating an issue or letting us know in our Discord server."

About the Author

David Ramel is an editor and writer at Converge 360.