News

Microsoft's Semantic Kernel AI SDK Adds Java, Integrates with Azure Cognitive Search

Microsoft has been busy updating its Semantic Kernel open source SDK for creating AI-infused applications, recently adding Java support and integration with Azure Cognitive Search.

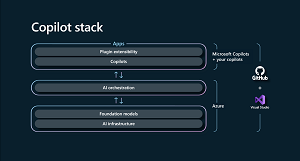

The SDK helps developers use programming languages like C# and Python to leverage AI services and serves as the foundation of the company's "Copilot" stack of AI assistants, even helping them build their own Copilot experiences on top of AI plugins. That's done with out-of-the-box functionality for prompting large language models (LLMs) including templating, chaining and planning capabilities.

[Click on image for larger view.] Semantic Kernel as the Orchestration Layer of the Copilot Stack (source: Microsoft).

[Click on image for larger view.] Semantic Kernel as the Orchestration Layer of the Copilot Stack (source: Microsoft).

After a series of updates, Microsoft this week introduced Semantic Kernel for Java, housed in a GitHub repo.

"Semantic Kernel for Java is an open source library that empowers developers to harness the power of AI while coding in Java," the July 19 announcement says. "It is compatible with Java 8 and above, ensuring flexibility and accessibility to a wide range of Java developers. By integrating AI services into your Java applications, you can unlock the full potential of artificial intelligence and large language models without sacrificing the familiar Java development environment."

The post features a sample, and plenty more are available for exploration at github.com/microsoft/semantic-kernel/tree/experimental-java/java/samples/sample-code.

On the same day -- speaking to the pace of Semantic Kernel development -- Microsoft announced the integration with Azure Cognitive Search, specifically that service's Vector Search, described by the company as a method for searching for information within data types including image, audio, text, video and more. A public preview of Vector Search was just announced the day before.

Benefits of the Cognitive Search integration, Microsoft said, include:

- Access to Vector Search: Utilize the capabilities of Azure Cognitive Services Vector Search to index datastores including Cosmos DB, Azure SQL Server and blob storage to perform vectors searches across a various data types including image, audio, text and video. Vector search compares the vector representation of the query and content to find relevant results for users with high efficiency and accuracy.

- Managed Service on Azure: Say goodbye to spinning up VMs and storing your data outside Azure. With Azure Cognitive Search, everything is managed within the platform on multiple Azure regions with high availability and low latency. This simplifies your search implementation and reduces overall complexity.

- Bring Your Own Embedding Vectors: Developers can now integrate their own embedding vectors, e.g. generated by OpenAI, for an even more precise similarity search using cosine similarity. This allows for more accurate and efficient search experiences within the Semantic Kernel framework, and it's particularly useful for grounding AI responses with relevant information, enhancing the overall value of AI-generated content.

- Integration with Azure Active Directory: enhance the security of your authentication process by integrating with Azure Active Directory, ensuring a secure and seamless user experience.

- Simplified Memory Management: with the integration of Azure Cognitive Search into Semantic Kernel, developers can easily add memory to prompts and plugins, following the existing Semantic Memory architecture. This simplifies the process of managing memory in your applications and enhances overall efficiency.

"By leveraging the power of Azure Cognitive Search with Semantic Kernel, you can now enjoy advanced search capabilities, seamless integration with other Azure services, and simplified memory management, taking your search experience to the next level," Microsoft said.

Topping off a busy Semantic Kernel announcement week for Microsoft were two other blog posts:

Also, earlier in the month, Microsoft published: "Semantic Kernel Roadmap: Fall Release Preview." It shows hat upcoming work involves:

- Plugin testing

- Dynamic planners

- End-to-end telemetry

- Streaming support

- Enhanced VS Code extension

It also indicates that the dev team's focus is on delivering key developments and enhancements across three pillars: open source and trustworthiness, reliability and performance, and integration of the latest AI innovations, fleshing those out thusly:

- To ensure openness and trust, we are adopting the OpenAI Plugin open standard, allowing users to create plugins that seamlessly work across OpenAI, Semantic Kernel, and the Microsoft platform. Our team is dedicated to scalability and reliability in the core kernel and planner.

- We are committed to enhancing reliability and performance by improving Planners, making them more efficient and capable of handling global-scale deployments effortlessly. Expect features like cold storage plans for consistency and dynamic planners that automatically discover Plugins.

- When it comes to the latest AI innovations, we are excited to integrate popular vector databases such as Pine Cone, Redis, Weaviate, Chroma, along with Azure Cognitive Search and Services. Additionally, we are developing a document chunking service and enhancing the VS Code extension for Semantic Kernel.

About the Author

David Ramel is an editor and writer at Converge 360.