News

'Azure AI Content Safety' Service Targets Developer Online Environments

Microsoft shipped an Azure AI Content Safety service to help AI developers build safer online environments.

In this case, "safety" doesn't refer to cybersecurity concerns, but rather unsafe images and text that AI developers in the Azure cloud might run across in various content categories and languages.

The service used advanced machine language models to help organizations identify various types of undesirable content and then assign it a severity score that humans can use to prioritize and review the content, all in the aim of helping users feel safe and enjoy online experiences.

"These capabilities empower businesses to effectively prioritize and streamline the review of both human and AI-generated content, enabling the responsible development of next-generation AI applications," Microsoft said of Azure AI Content Safety earlier this month.

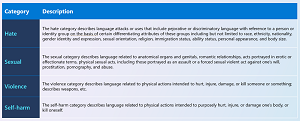

The service leverages text- and image-detection capabilities to find content that is offensive, risky or undesirable, Microsoft said in an Oct. 17 post announcing general availability of the cloud service. Examples of such dangerous content include profanity, adult content, gore, violence, hate speech and more.

[Click on image for larger view.] Harmful Content (source: Microsoft).

[Click on image for larger view.] Harmful Content (source: Microsoft).

"The impact of harmful content on platforms extends far beyond user dissatisfaction," Microsoft said. "It has the potential to damage a brand's image, erode user trust, undermine long-term financial stability, and expose the platform to potential legal liabilities."

That impact can be more significant with the recent proliferation of AI-created content enabled by advanced AI systems such as the ChatGPT chatbot from Microsoft partner OpenAI, which last year unleashed brand-new generative AI capabilities on the public.

"It is crucial to consider more than just human-generated content, especially as AI-generated content becomes prevalent," Microsoft said. "Ensuring the accuracy, reliability, and absence of harmful or inappropriate materials in AI-generated outputs is essential. Content safety not only protects users from misinformation and potential harm but also upholds ethical standards and builds trust in AI technologies. By focusing on content safety, we can create a safer digital environment that promotes responsible use of AI and safeguards the well-being of individuals and society as a whole."

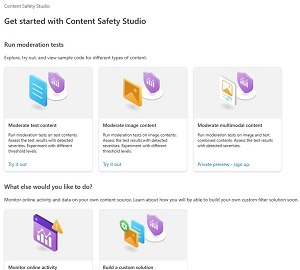

The company pointed to the interactive Azure AI Content Safety Studio as a tool developers can use to view, explore, and try out sample code for detecting harmful content across different modalities. At this point, it can moderate text and image content, while the capability to moderate multimodal (combined image/text) content is in preview.

[Click on image for larger view.] Azure AI Content Safety Studio

[Click on image for larger view.] Azure AI Content Safety Studio

The studio includes a dashboard to help developers keep track of images/text, which can also be done via an API used to analyze text content.

This week, the company published another post discussing the new service in the context of its overall Responsible AI and content safety.

"The new Azure Content Safety service can be a part of your AI toolkit to ensure your users have high-quality interactions with your app," this week's post said. "It is a content moderation platform that can detect offensive or inappropriate content in user interactions, and provide your app with a content risk assessment based on different types of problem (eg. violence, hate, sexual, and self-harm) and severity. This high level of granularity provides your app with the tools to block interactions, trigger moderation, add warnings, or otherwise handle each safety incident appropriately to provide the best experience for your users."

More information is available in What is Azure AI Content Safety? documentation, along with the Azure AI Content Safety Sample Repo on GitHub and an introductory video.

The service uses a pay-as-you-go pricing plan.

About the Author

David Ramel is an editor and writer at Converge 360.