News

Microsoft's Semantic Kernel SDK Ships with AI Agents, Plugins, Planners and Personas

Semantic Kernel, Microsoft's open source AI SDK, has shipped in v1.0.1.

The new release sports new documentation to explain the SDK's capability to create AI agents that can interact with users, answer questions, call existing code, automate processes and perform various other tasks.

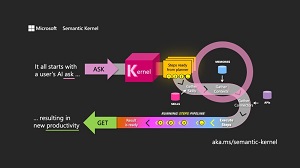

The SDK acts like an AI orchestration layer for Microsoft's stack of AI models and Copilot AI assistants, providing interaction services to work with underlying machine language foundation models and AI infrastructure. The company explains more in its documentation: "Semantic Kernel is an open-source SDK that lets you easily combine AI services like OpenAI, Azure OpenAI, and Hugging Face with conventional programming languages like C# and Python. By doing so, you can create AI apps that combine the best of both worlds."

[Click on image for larger view.] Semantic Kernel (source: Microsoft).

[Click on image for larger view.] Semantic Kernel (source: Microsoft).

Shortly after shipping a release candidate, Microsoft today (Dec. 19) announced Semantic Kernel V1.0.1, describing it as a solid foundation with which to build those AI agents for AI-powered applications.

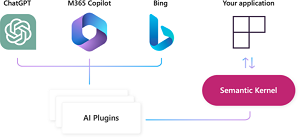

[Click on image for larger view.] Semantic Kernel in the Microsoft Ecosystem (source: Microsoft).

[Click on image for larger view.] Semantic Kernel in the Microsoft Ecosystem (source: Microsoft).

The announcement post details how to build your first agent with the help of new packages and docs.

Updated documentation on learn.microsoft.com helps users get familiar with the new UI. While still explaining the core concepts in Semantic Kernel like prompts and the kernel, core components necessary to build AI agents are also covered, including plugins, planners and personas.

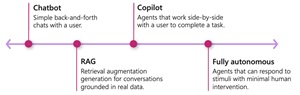

[Click on image for larger view.] Semantic Kernel Core Components (source: Microsoft).

[Click on image for larger view.] Semantic Kernel Core Components (source: Microsoft).

"We are extremely excited about our updated docs because it's never been easier to learn how to build agents for your AI applications," said Matthew Bolanos of the dev team. "With Semantic Kernel, we make it incredibly easy to build an agent with a personalized persona that can automatically call plugins using planners or with automated function calling. Once you've gotten the basics down, you can then build anything from a simple chatbot, to a fully autonomous agent."

[Click on image for larger view.] Semantic Kernel Agents (source: Microsoft).

[Click on image for larger view.] Semantic Kernel Agents (source: Microsoft).

While small bits of newer functionality were detailed in the release candidate announcement and other posts, today's post provides an overview, focuses on the docs, lists various available packages (including preview and alpha) and points to Discord as the best place to get help in building a first agent.

Going forward, in January 2024 the team will focus on three core themes:

- AI connectors -- for example, Phi, Llama, Mistral, Gemini, and the long tail of Hugging face and local models.

- Memory connectors -- updating the current memory connectors to better leverage the features of each service.

- And additional agent abstractions -- e.g., provide abstractions that allow developers to build non-OpenAI based assistants.

"Since the core team still needs to iron out the abstractions and interfaces for #2 and #3, we recommending that the community help us focus on #1," Bolanos said. "Our hope is to have AI connectors for all of the top models as quickly as we can in the new year."

About the Author

David Ramel is an editor and writer at Converge 360.