News

Semantic Kernel AI SDK Gets Autonomous Agents (Experimental)

As AI matures, agents are one of the most significant areas of development, and Microsoft's Semantic Kernel AI dev tooling is getting them as an experiment for now.

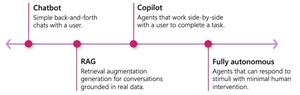

Agents go beyond the initial model of AI, which was more about reacting to user inputs with data and algorithms, providing a more autonomous, self-directed approach to AI. They can perform tasks, interact with their environment and make decisions based on predefined objectives or learned behavior. They can operate in various domains, including natural language processing (NLP), robotics, gaming and simulations.

Other Microsoft efforts to develop agents include the AutoGen Framework (see the article, "Researchers Take AI Agents to the Next Level with the AutoGen Framework").

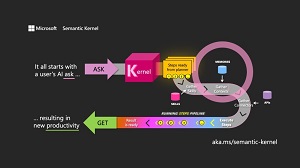

Now, they have been unveiled in yet another framework -- Agent Framework -- as an experimental feature in Semantic Kernel, Microsoft's SDK designed to help developers create and manage AI-driven applications that utilize natural language understanding and generation. It focuses on integrating advanced AI models, such as those for NLP, into various applications, enabling more intelligent and context-aware interactions.

[Click on image for larger view.] Semantic Kernel (source: Microsoft).

[Click on image for larger view.] Semantic Kernel (source: Microsoft).

Agents actually appeared in Semantic Kernel v1.0.1 late last year, but that was mostly in the form of documentation and core components necessary to build AI agents (see the article, "Microsoft's Semantic Kernel SDK Ships with AI Agents, Plugins, Planners and Personas").

[Click on image for larger view.] Semantic Kernel Agents (source: Microsoft).

[Click on image for larger view.] Semantic Kernel Agents (source: Microsoft).

The new Agent Framework is parked on GitHub as an open-source project, like SK itself.

"We are excited to announce support for agents as part of the Semantic Kernel ecosystem: Agent Framework (Semantic Kernel GitHub Repo)," said Microsoft's Chris Rickman in an Aug. 1 blog post. "An agent may be thought of as an AI entity that is focused on a performing a particular role. Multiple agents may collaborate to accomplish a complex task. In addition to their role definition, each agent may be defined with its own tool-set. In Semantic Kernel, this means each agent may be associated with plug-ins and functions that support its role."

[Click on image for larger view.] Multiple Agents Interacting (source: Microsoft).

[Click on image for larger view.] Multiple Agents Interacting (source: Microsoft).

As far as the experimental nature of the project, Microsoft said:

Note: The Agent Framework is currently marked as experimental along with other certain Semantic Kernel features. Even though we strive to maintain stable development patterns, there may be updates that break compatibility until we are able to graduate the Agent Framework.

Initial workable agents include the ChatCompletionAgent designed to, yes, complete chats, and the OpenAIAssistantAgent based on the OpenAI Assistant API.

Rickman explained the Agent Framework allows for two modes for interacting with an agent: directly (no-chat) or via an AgentChat, going on to provide more details.

He also foreshadowed future development, with features in the works including:

- Semantic Kernel Python Agent Framework (in progress)

- Support for Open AI Assistant V2 features (soon!)

- Enable for serializing and restoring

AgentChat (soon!)

- Expose for streaming for all agents and

AgentChat

- Enable history truncation strategy for

ChatCompletionAgent

- Improved chat patterns

Rickman invited feedback on the Semantic Kernel GitHub Discussion Channel.

About the Author

David Ramel is an editor and writer at Converge 360.