It's 1:30 a.m., you're sweating bullets and your head is pounding. You can feel the stress wracking your body as you try to concentrate. The code-complete deadline for your part of the module is coming up, and the bean-counters are on everyone's back. Product has to ship on time -- period.

You've almost got that last, thorny problem licked, but testing all the different security scenarios with this new cloud-based database-conversion tool takes soooo much time. A potential "connection string pollution" vulnerability has popped up in the back of your mind, but you're unsure how dangerous it is -- or even if it's possible. It's a brand-new threat. It will take forever to investigate.

What the heck. No one will ever find it. You can't be the one holding things up -- again. There are thousands of talented coders out there who would jump at the chance to slide into your job at half your salary. You've got mouths to feed. Let QA worry about it.

Could this happen? Has it? Who's to blame for the sorry state of application security these days?

Here's a chilling quote:

"Security is not something app developers have prioritized in the past. Their focus has been getting a product that has a competitive edge in terms of features and functionality to market as quickly as possible. That's not a criticism, it's just a factor of commercial priority."

That just happens to have come from the world's biggest app dev shop, shipping more "product" than anyone else: Microsoft. David Ladd, principal security program manager at Microsoft, said it in a news release earlier this week announcing that the company is "helping the developer community by giving away elements of its Security Development Lifecycle process."

It couldn't come at a better time. "Today, in the middle of the worst economic downturn in thirty years, information security has an enormously important role to play," reads the 2010 Global State of Information Security Survey from PricewaterhouseCoopers.

"Not surprisingly, security spending is under pressure," the report states. "Most executives are eyeing strategies to cancel, defer or downsize security-related initiatives."

But the report is generally optimistic, finding that "Global leaders appear to be 'protecting' the information function from budget cuts -- but also placing it under intensive pressure to 'perform.' "

That makes sense to me. It would have to be the short-sighted executive indeed who would risk all the associated costs of a data breach just to meet a deadline. How bad can those costs be? Just ask TJX.

Then again, that executive has mouths to feed, and "commercial priority" and "intense pressure to perform" could cloud judgment.

What about your shop? Where do the priorities lie? Know any juicy stories about security breaches caused by economic pressures? I'd love to hear about it. Comment here or send me an e-mail. But for goodness' sake, don't put any sensitive information in it. There are bad guys out there.

Posted by David Ramel on 02/04/20100 comments

I previously wrote about an interesting demonstration that involved a 44-milliion-record data set imported into PowerPivot, Microsoft's coming "self-service business intelligence" add-in for Excel 2010 and SharePoint 2010.

Connecting to a cloud data service, importing a feed into Excel and culling BI info from the data is remarkably easy. You can try it for free with the Microsoft Office 2010 Beta (requires registration) and PowerPivot for Excel 2010.

Here's how.

Click on the PowerPivot tab in the main Excel menu, then click on the PowerPivot window, Launch button. It will take a few moments to load, displaying the message: "Preparing PowerPivot environment, please wait."

Now in the PowerPivot window, click on From Data Feeds under the Home menu item. You are presented with two options: From Reporting Services and From Other Feeds. Click on the latter. This brings up the Table Import Wizard (Fig. 1), where you input the data feed URL.

|

| Figure 1. Table Import Wizard. (Click image to view larger version.) |

You can find online data feeds with a Web search. I used the Open Government Data Initiative, specifically the Crime Incidents data set under the District of Columbia "Container"

(Fig. 2).

|

| Figure 2. Crime Incidents data is found in the District of Columbia container. (Click image to view larger version.) |

Interestingly, clicking on Advanced brings up a window with "Provider: Microsoft Data Feed Providers for Gemini" as the only option. This is one of several leftover references to the former code-name of the project, Gemini. The Advanced button brings up settings for Context, Initialization, Security and Source that adjust the connection string, along with a Test Connection button.

If you're not into Advanced settings, just paste in the source data set URL, click the Test Connection button, and a window comes up with "Test connection successful" (in a window titled "Gemini Client"; see Fig. 3).

|

| Figure 3. Testing the connection with the cloud data. (Click image to view larger version.) |

Click on Next. The Table Import Wizard shows a window where you can "Select Tables and Views" if you just want to download parts of the feed (Fig. 4). There is also a Preview & Filter button that lets you see the data as it will appear and deselect different columns to tailor what you want to download.

|

| Figure 4. Selecting Tables and Views you want to work with. (Click image to view larger version.) |

Click Finish. It takes a few seconds to import, then displays "Success"; under Status it shows you how many rows transferred (368 in this case).

Click close, and a PowerPivot window comes up with all the data in tabular format (Fig. 5).

|

| Figure 5. Time for some PowerPivot magic. (Click image to view larger version.) |

Click on PivotTable, and you are presented with various options for the number/type of tables to display, with or without charts, vertical or horizontal, and so on. I chose two vertical charts. You are asked whether you want to "Insert Pivot into New Worksheet or Existing Worksheet." Placeholders for charts come up, with a "Gemini Task Pane" (there's that code-name again) that lets you choose which fields to add to your charts, along with options for vertical and horizontal slicers, report filter, legend fields, axis fields and values.

I wanted to see which parts of D.C. are the most dangerous and when, so I chose Offense, Shift and Ward fields from the Crime Incidents data feed (Fig. 6).

|

| Figure 6. Selecting Offense, Shift and Ward fields in the Crime Incidents table. |

I then set up tables and charts to show crimes by Ward and by Shift, and with a simple formula constructed a pie chart displaying what percentage of crimes were committed during each shift. My resulting "BI" informs me that most crimes (38 percent) are committed during the Evening shift and most crimes occur in Ward 2 (Fig. 7).

|

| Figure 7. Crimes by Ward and Shift, with a handy pie chart . (Click image to view larger version.) |

There you go: practical, hands-on advice from the cloud, presented here free for your personal safety. Don't mess around in D.C.'s Ward 2 during the Evening police shift.

What danger areas are you avoiding these days? Comment below or e-mail me.

Posted by David Ramel on 02/01/20100 comments

At the front of a crowded room, Carmen Taglienti stood over his laptop, hovered the cursor over the "Sort" button and clicked. Instantly, some 44 million records in his Excel app were sorted, newest to oldest, top to bottom. That's right:

44 million records. Welcome to the world of "self-service BI."

Taglienti, a Microsoft technology architect, was at the monthly meeting of the New England SQL Server User Group to show off the PowerPivot for Excel 2010 add-in (formerly code-named "Gemini"). He said Microsoft developed PowerPivot to rein in the growing enterprise problem of "spreadmarts," basically tech-savvy users developing their own sophisticated spreadsheets to cull business intelligence out of various data sets and shipping the resulting -- sometimes gigantic -- files all over the company via e-mail, with no organization, security or management.

With PowerPivot, he said, users can create much more useful BI and share it throughout the company via SharePoint 2010, for example (PowerPivot is also a SharePoint add-in).

His presentation elicited some "oohs" and "aahs"from the 40-plus developers at the meeting, with the 44-million-record example generating special interest. Previously, Excel users were limited to using 1 million records.

"It's amazing," said Taglienti, as several people inquired about the demonstration. It did seem hard to believe that 44 million records could be instantly sorted on a laptop.

Granted, he was using a 64-bit machine with 4GB of RAM, but he said the remarkable speed of his demonstration was primarily attributable to compression technology that shrinks huge data sets so that they can be manipulated entirely in-memory. In fact, Taglienti said, the 44 million records were squeezed into an XLSX file of less than 5MB. That also got everybody's attention: 44 million records in a 5MB file. PowerPivot's data engine technology (called VertiPaq) has achieved up to about 20-to-1 compression, he said.

Surprisingly, it turns out that 44 million records is nothing special. There has actually been a 100-million-record PowerPivot experiment. Taglienti also demonstrated how PowerPivot can access data from the cloud. He showed how easy it was to connect, in just a few clicks, to an Open Government Data Initiative data feed and download it into PowerPivot. The OGDI data is hosted -- of course -- in Windows Azure, Microsoft's fledgling cloud platform.

Other usable data sources include several different kinds of databases, files and Microsoft Reporting Services feeds. The strategy behind PowerPivot is "to empower the end user" to do their own data analysis and intuitively build their own custom BI solutions, Taglienti said. This lets IT concentrate more on infrastructure and data management instead of numerous custom BI projects.

He stressed that it's all beta technology at the moment, and Microsoft isn't entirely certain how it will be leveraged in real-world, day-to-day use. He posited the question of whether-if a company has some 10,000 PowerPivot cubes floating around in SharePoint, for example-PowerPivot should have its own server. While questions such as that get sorted out, Microsoft is out in the front lines trying to convince users that, as Taglienti says, "it's a game-changer."

The mood at the meeting seemed to be wait-and-see.

Teresa DeLuca, a Web developer at International Data Group, which is headquartered in Boston, said she didn't anticipate using PowerPivot. But she said, "I do work with pivot tables a lot," so there might be some benefit to checking the product out.

Dean Serrentino, a software developer/consultant at Paradigm Information Systems in Wilmington, Mass., said before the meeting, "I'm totally unfamiliar with it" and that he was at the meeting to learn more about it. "I usually don't go bleeding edge," he said. After the meeting, he said in an e-mail, "I don't anticipate using it for two reasons. First, I think it is too complicated for use by the audience that would most benefit from it. Second, it is an SQL Server Enterprise version feature. Most of my clients are small to medium sized businesses and don't need the features in SS Enterprise, or have the resources to afford it."

Gary Chin, an independent developer in Newton, Mass., said, "I don't see using it immediately, but it's going to come in handy for BI and SQL Server applications ... the whole idea of storing the live data from SQL Server and downloading it down to financial analysts or other users that need to manipulate the data to see what happens within the data."

Some people are more skeptical. Readthis entertaining, semi-contentious exchangebetween one of PowerPivot's founding engineers and a user arguing about what capabilities PowerPivot provides that plain old Excel doesn't.

What do you think? Marketing hype or must-have technology? Have you tried it? Are you going to? Comment here or send me an e-mail.

Posted by David Ramel on 01/22/20102 comments

Some interesting salary data shows that there's still a strong need for software and Web developers stateside.

Jobs: Software engineer rated No. 2 on CareerCast.com's recent listing of the top 200 jobs for 2010, ranked on factors such as physical demands, work environment, income, etc.

Web developer was No. 15, and computer programmer was No. 34. (On a sad personal note, the jobs I have held rank no. 65 (publication editor); 79 (public relations executive, though I was a mere "PR specialist" -- didn't even make the list); 184 (newspaper reporter); and 199 (lumberjack -- only a "roustabout" was ranked lower).)

CareerCast.com listed these salaries for the above positions:

- Software engineer: $85,139

- Web developer: $60,090

- Computer programmer: $70,178

That got me wondering about the IT job market, especially related to developers. It wasn't that long ago that everybody was bemoaning the impending death of the profession-at least in the U.S.-because the jobs were going to offshore outsourcers who could pay coders much less than what they were making here.

So I poked around the Web to answer some questions. Just what is the consensus on developer salaries (especially database-related, of course)? What are the hot technologies? Where is the action? What issues are database coders dealing with on the front lines?

Salaries: The Redmond 2009 Salary Survey, in a survey of Microsoft IT compensation, reported salaries of:

- Programming project lead (Non-Supervisory): $100,635

- Database administrator/developer: $80,894

- Programmer/analyst: $78,818

- Average base salary of respondents: $83,113

The 2009 IOUG Salary Survey (International Oracle User Group) reported an average base salary of about $96,000 per year for "Oracle technology professionals."

Dice.com reports current average salaries of:

- Software engineer: $90,031

- Database administrator: $89,742

- Database developer: $84,176

CareerBuilder's salary site reports current average salaries of:

- Software engineer: $90,530

- Computer programmer: $72,661

- Database developer: $95,951

(On a sad personal note, it says "features editors," of which I am one now, earn an average of $49,686.)

Clouds in the news: It appears I'm not the only one infatuated with the cloud lately (see blogs below). A quick scan of some major IT publications' home pages last week revealed the term "cloud" was quite popular:

Insights & Trends: Google Insights reveals how many searches have been done for some common database technologies, relative to the total number of U.S. searches in the "Computers & Electronics" category worldwide in 2009:

- SQL Server: 90

- MySQL: 78

- DB2: 18

- Oracle 10g+Oracle 11g: 10

Oracle/Sun acquisition thing.

Issues: So what are database developers struggling with? Here are the top forum topics in terms of views on various DBForums.com categories in 2009:

Microsoft SQL Server

DB2

MySQL

Oracle

Let's get some more info from the front lines. What are you struggling with? What do you like about your job, or dislike? What are the pros and cons of the different technologies and products? Where is the industry heading? Is the cloud where it's at, or just a passing fad? How much do you think features editors should make? Comment below or send me an e-mail.

P.S.: Don't ever call a logger a "lumberjack," at least in Montana. Nobody uses that term.

Posted by David Ramel on 01/15/20100 comments

Imagine the world's information at your fingertips. Imagine being able to slice and dice it as you choose. Imagine you're a sales exec who, with just a few clicks, can pull up reports on consumer spending and demographics, mapped to your sales areas -- or your competitors' sales areas -- just about anywhere.

And the best part is that you don't have to invest in your own data warehouse, with all the accompanying costs and hassles. You can just pull the data out of the cloud.

This will soon be a reality as Microsoft's Windows Azure platform goes commercial, and vast repositories of public and private data are made available via subscription through Microsoft's Dallas project. These data feeds will consist of everything from AP news headlines to business analysis services to topographic maps to global U.N. statistics on just about anything you can think of.

I've been fascinated with Dallas since it was announced at the recent PDC 09 with much fanfare. I keep thinking of all that information out there and what people will be able to do with it. Maybe the yottabytes of government statistics we've been paying for will actually be put to good use.

If you haven't checked out Dallas, you should. It's still free through the end of the month.

Just to show how easy it is, I signed up, subscribed to some feeds and displayed them in a Windows Forms app. I then went further and fed U.S. crime data into a live ASP.NET site that lets me massage and cull information not readily apparent, such as cities with the highest crime rate in each state. (By the way, I'm using MaximumASP's service to host the site; it offers free beta accounts for developers to play around with ASP.NET 4 and Visual Studio 2010.)

I even analyzed the data with Power Pivot and Excel. BI for the masses is here.

Here's how to get started. First, check out the Dallas Quick Start. From there go to the Dallas Developer Portal to sign in with a Live ID, create an account and request an invitation token.

After you get the token you log in and you're in business. At the home page, you will see tabs for the catalog of available subscriptions, your subscriptions, your account keys and an access report that shows you how many times your subscriptions have been tapped.

Clicking on Catalog brings up dozens of feeds to which you can subscribe. Most of them are still in the "coming soon" stage, but available feeds include infoUSA business stats, U.S crime statistics, AP headlines, NASA Mars images and U.N. data. Here's a sampling of some coming feeds:

|

|

[Click image to view larger version.] |

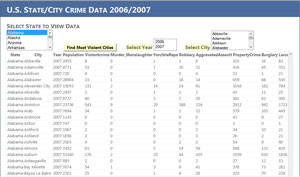

I subscribed to "2006 and 2007 Crime in the United States" from DATA.gov. Clicking on that link from your subscription page brings you to a page where you can preview the data and invoke the service, selecting state, city and year, with your choice of a table display or Atom 1.0 and Raw XML formats. Here is how the crime statistics look for Alabama cities for the year 2007, with the preview function:

|

|

[Click image to view larger version.] |

Of special interest to developers are the capability to copy the URL for the service to your clipboard, the request header used to call the service, and downloadable C# "service classes" that you can plug into your Visual Studio projects. You can use the request header to invoke the service with REST APIs in just about any programming environment. For .NET developers, all you need to do is plug your account number and a unique user ID (GUID) into the downloaded proxy class code and it will take care of all the nuts and bolts of accessing the service.

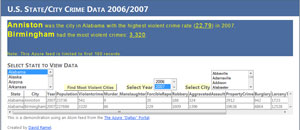

I built an ASP.NET Web page and used the crime stats proxy class to display the feed data in a GridView. It looks much like the preview of the data on the portal, with options provided through ListBoxes to display data by state, city and year:

|

|

[Click image to view larger version.] |

To demonstrate rudimentary data analysis, I added a "Find Most Violent Cities" button to display the cities in a particular state that had the highest crime rate (using a standard formula) and highest number of violent crimes in 2006 or 2007. Here's the result:

|

|

[Click image to view larger version.] |

As mentioned, I published the project so you can check it out yourself to get an idea of what can be done with minimal effort.

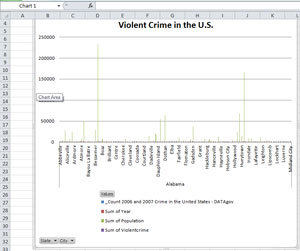

For more serious data analysis and business intelligence, you might want to use Microsoft's Power Pivot. To test it, I downloaded the Office 2010 beta and PowerPivot for Excel 2010 (it's also available for SharePoint 2010).

I'm not a numbers guy, so I was lost from there on, but by clicking on the "Analyze" button on my Dallas crime statistics page, I downloaded the feed's data directly into PowerPivot/Excel:

|

|

[Click image to view larger version.] |

I only managed to put some data into a useless, cheesy looking chart, but you get the idea of what could be done.

From here on, the possibilities are endless, especially when all the promised feeds come online. I wish my free trial subscriptions could go on forever, but Microsoft will soon start commercializing Dallas and the other Azure offerings. I haven't found any subscription pricing info.

In the meantime, I would love to see other ways that developers are using Dallas. As Microsoft's Dave Campbell said at PDC 09, Dallas could be the "killer app" for the cloud. There must be some cool experimentation going on out there.

Please share your project via the comments section here or shoot me an e-mail.

Posted by David Ramel on 01/06/20100 comments

Got Azure? I do!

Here's a ringing endorsement for the simplicity of the Windows Azure platform: I was able to migrate a database into a SQL Azure project and display its data in an ASP.NET Web page. What's more, I actually developed a Windows Forms application that displayed some of the vast store of public data accessible via Microsoft's Dallas project.

And believe me, if I can do it, anybody who knows what a connection string is can do it. It's wicked easy, as they say here in the Boston area.

I've long been fascinated by the cloud. In fact, almost exactly three years ago I commissioned an article on the nascent technology, having identified it as the future of... well, just about everything. For me, a coding dilettante, there's just something cool about the novelty of being in the cloud. For IT pros, it must be exciting having no worries about hardware, the nitty gritty of administration minutiae, and so on.

So if you haven't yet, you should check out the cloud. Microsoft's Windows Azure platform services are free until Feb. 1.

Here's how I did it. I used a laptop running Windows 7, with Visual Studio 2010 Beta 2, SQL Server Management Studio, SQL Server Express, the Windows Azure SDK, the Windows Azure Toolkit for Visual Studio 2010, and the Windows Azure Platform Training Kit.

To start out, I asked our friends in Redmond for a SQL Azure October CTP invitation through Microsoft Connect. This just involved signing in with a Windows Live ID and answering a few questions. The invitation code e-mail arrived in a few days. I went to the SQL Azure management portal, entered my invitation code, provided an administrator user name and password, created an AppFabric, a new Service Namespace and validated the name, created an account and started my first project.

[Click on image for larger view.] |

Clicking on the Firewall Settings tab lets you specify a range of IP addresses that can access your project. And there's a checkbox to allow Microsoft services to access the box.

After passing a Database Connectivity Test, I was off to SQL Server Management Studio (SSMO) to start playing around in the cloud. You can create a database schema from the portal, but to populate it I used SSMO. It was easy to create a simple database and run queries against it using Transact-SQL, after I learned you had to provide a clustered index.

[Click on image for larger view.] |

The only catch to remember is that when starting up SSMO, you need to close out of the initial Connect to Server box that pops up, for some reason. Then you need to click on New Query and enter your SQL Azure server name in the same exact dialog box. The server name is handily provided via the portal, where you can also get your connection strings by selecting the database you want to work with and clicking on "Connection Strings" and choosing ADO.NET or ODBC. Here's a snapshot of a database list and the accompanying functionality provided by the portal:

[Click on image for larger view.] |

After creating a simple database and populating it, I moved on to the more complicated project of migrating an existing database to SQL Azure, which presently doesn't handle some common data types and functions.

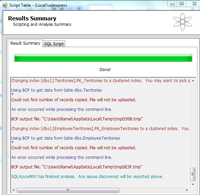

Luckily, Microsoft provides a handy SQL Azure Migration Wizard, which I got to work after a few hiccups. You can see all the different conversion options it offers here:

[Click on image for larger view.] |

I also created the sample database ("School.mdf") from this tutorial explaining how to migrate databases via Transact-SQL scripts.

Here's what a failed script looks like:

[Click on image for larger view.] |

Here's what a successful script looks like:

[Click on image for larger view.] |

From there I tackled the task of using Visual Studio to build some apps to access the SQL Azure databases. I created a console app, a Windows Form app, and an ASP.NET page with data displayed via GridView and more. I then secured a Dallas account and started displaying public information like news feeds, crime statistics and more from a vast repository of public information. Being a rank amateur, it took me much longer than I care to admit. I'll give you some of the gory details and maybe a few helpful tips on what not to do later.

In the meantime, if you've dabbled in Dallas and have something cool to share, tell us about it -- and provide a link if possible -- in the comments section below, or drop me a line at [email protected]. I would love to find out what's going on out there in this new playground in the sky.

Posted by David Ramel on 12/16/20091 comments

Database-related programming has to be right up there on the boring scale. But if you want to improve your coding skills, why not do something interesting while you learn?

Microsoft's Clint Rutkas has the right idea. One of the hits at the recent Professional Developer's Conference was his automated bartender robot, a contraption with tubes, valves and a compressed CO2 tank that serves up cocktails.

"It pours drinks," he told Scott Hanselman during a Channel 9 video feed from the conference. "I basically built a machine to pour mixed drinks."

Who says Microsoft isn't cool?

Rutkas and two friends from the Coding4Fun department were on hand to demonstrate the lighter side of application development at Redmond. "We get to literally figure out cool, crazy items that people want us to build, or we want to build, and we basically blog about it," Rutkas said.

Tim Higgins showed off his Wi-Fi Warthog Nerf weapon, and Brian Peek revealed a video game controlled by brainwaves.

I was more interested in the bartender.

Rutkas said he came up with the idea because "I was always getting yelled at for mixing drinks too strong." So he said "I'm building a robot" to serve drinks and "be the McDonalds of the bar world."

The robot bartender is powered by a touch-screen laptop running Windows 7 and SQL Server. "The front end is WPF; it uses LINQ to SQL to poll data," Rutkas said. "Basically the entire app was my excuse to learn WPF and LINQ to SQL, and improve my knowledge of threads."

He said all the parts were readily available off the shelf and no soldering was required. A relay board, which Rutkas described as "a glorified light switch," opens up the correct valves, and then the pressurized system pours the liquid. He said the relay board mounts as a COM port, or serial port, and "I created a wrapper to talk to it."

"The entire system actually has one moving part," Rutkas said. The relay board is operated by Bluetooth. Rutkas said he had to go wireless because he "blew up a laptop" in his first try and had to enact "a harsh separation of church and state" to keep the liquids away from the electronics.

He said he would be posting all the details of how he built the bartender at the Coding4Fun blog page.

You can see more Coding4Fun videos from PDC on MSDN.

The "Iron Bartender" really got my attention, so I did a quick search and found other fun, database-related tutorials featuring dogs, galaxies, Facebook apps, memory games and more. I'll write about them later. When I do, I would love to include your examples, too.

Tell me about your favorite database-related tutorials that inject some levity into the learning process, or are just more interesting than the average "display Customer data from the Northwind database" variety.

List them in the comments section below or drop me a line at [email protected]. I'm sure you can come up with some fun stuff-even without alcohol.

Posted by David Ramel on 12/09/20090 comments

Think the "NoSQL" movement isn't prominent on Microsoft's radar screen?

Think again. Not only is the company tracking it, some people inside Microsoft have actually jumped on the anti-SQL bandwagon. This came to light when Microsoft Technical Fellow Dave Campbell took some pot-shots at the latest threat to the company's bread-and-butter database strategy during the recent Professional Developer's Conference.

"The relational database model has stood the test of time," the database guru said in an interview with Charles Torre on Microsoft's Channel 9 video feed from PDC. "And it's interesting in that there's this anti-SQL movement, if you will," he continued. "You know some people follow that. The challenge is that you're throwing the baby out with the bathwater. "

Some people? How about your people? Here's an exact transcription of his subsequent remarks:

"I've been doing this database stuff for over 20 years and I remember hearing that the object databases were going to wipe out the SQL databases. And then a little less than 10 years ago the XML databases were going to wipe out.... We actually ... you know... people inside Microsoft, [have said] 'let's stop working on SQL Server, let's go build a native XML store because in five years it's all going....'"

Campbell, and others during the show, pointed to the cloud as Microsoft's answer to the future of database technology.

He said, "I think a lot of people in this anti-SQL movement, they're really looking for the cloud benefits, and the thing that we're trying to achieve with SQL Azure is to give you the cloud benefits, but not throw out the model that has worked for so long and people are so familiar with. So [we're] trying to retain the best of both worlds."

The "NoSQLers" weren't his only target. He also took some shots at a certain unnamed company trying to get with the "database service" program.

"Another company made some noise ... tried to make some noise in front of our noise, a week or two ago, by announcing what they're calling a relational database service," Campbell said. "All they did is they took a relational database server and they stuck it in a VM, okay. So you still have to buy the VM. So if you've got a very small database, and you want to run it for a month, you're paying like 11 cents an hour or so. The smallest database you can get up there, is approaching $100 a month, just to have it, because you need to rent the whole infrastructure to go off and do that."

I don't know what that unnamed company is, but I found this announcement interesting: "Introducing Amazon RDS - The Amazon Relational Database Service." This came in an Oct. 26 blog posting on the Amazon Web Services Blog page.

Campbell went on to compare the pricing structure of that unnamed service with Microsoft's database service: "Our cost of SQL Azure in a 1GB database is about one-tenth of what you could do in the other thing that was recently announced ... from some other company."

And truth be told, there were some very cool presentations around the cloud and new ways of using data at PDC. I found the Dallas project particularly interesting.

What about you? What do you think of the whole thing? Comment below or drop me a line at [email protected]. Let's talk.

Posted by David Ramel on 12/01/200923 comments

I'm as cynical as the next guy, but this note I just got really made me stop and think about things like the meaning of Thanksgiving, and the fact that there are real people behind that 10.2% unemployment rate statistic.

Here it is, presented raw and unedited -- kinda like life:

dear mr.ramel

forgive my lack of proper punctuation and caps and spelling.i never took typing and dont know how to use word. I was happy to see someone who taght themselves C languages. I have been doing that for about three years. up until recently i had been working 60 and 70 hours aweek at a hotel dining room bussing tables for about 15 years. most of the guests in this melourne florida hotel work for NASA,Grumman,Harris,Rockwell etc. over time i made friends with some of the IT people who work for these places. i decided to try to improve myself and asked some of them what it is they do and what should i learn to aim myself in their direction. one guy said"if you learn c you can go anywhere". so I started with c++.its been like trying to learn how to fly a martian spacecraft. being computer illiterate five years ago didnt help.stumbling around barnes and noble i have managed to pick up quite a few tiles on the subject of programming.At someone elses urging i have dipped my toe into sql but it seems to be quite a frigid subject.Another of my guests got me interested in cisco so i now have several books on telecommunications and internetworking.VB (or studio whatever) and java are a little in the mix.Ruby and php too. I'm far along enough now thatthe rules and sytax of these languages are starting to resemble each other. Now my goal is certification or a degree from whatever accredited institution I can get myself onboard with. I've been unemployed for several months now so I'm trying to take advantage of offerings the state of florida hasas far as scholarships and grants and whathaveya.Nothing definite yet.Not ruling anything out elseware as far as that is concerned. I can tell you one thing though. It's that I have been bitten by the IT bug and I'm gonna persue it and hopefully make it my career. I'll be happy to get involved with your blog or just contribut in some way as an IT neophyte. I like to just surf around and glean whatever flavor of IT is sittiing out there for free. It's guilty pleasure to register for some free webinar and type ":student" as my proffession just to get in. I'm just looking forward to getting paid for having so much fun. vty josh

Posted by David Ramel on 11/19/20098 comments

Speaking of good books, Microsoft blogger Dan Jones last week

passed on some info about a brand-new SQL Server book that benefits what looks like a worthy organization,

www.WarChild.org, which is described as "a network of independent organisations, working across the world to help children affected by war."

The book, SQL Server MVP Deep Dives, was written by 53 SQL Server MVPs who volunteered their efforts and are donating all royalties to WarChild.org.

Posted by David Ramel on 11/18/20090 comments

Hi folks. I'm David Ramel, and I'll be posting to Data Driver from here on, taking over for Jeffrey Schwartz, who has moved on to other duties at

Redmond magazine and

Redmond Channel Partner.

I've been an IT journalist for more than 10 years, and I do love programming. Now, I'm not an accomplished coder by any means. I think that left-brain, right-brain thing gets in the way of any true talent (which is why I'm a writer). But, as a former colleague once wrote, I never get tired of making all the bells and whistles work.

Long ago I taught myself C++. That actually resulted in a real, totally original, working executable, but it almost killed me.

I found Java and Visual J++ (remember that?) more my speed. Lately I've been experimenting with C# and Visual Studio.

Since I was recently named the database guy, I've been fooling around with LINQ and ADO.NET and such in my spare time -- which ain't much.

So it was quite satisfying to figure out Data Connections, DataSets, ConnectionStrings and the like and hook up to the good old Northwind database and run some SQL Server queries to populate a DataGridView.

I'm eager to learn more, even if to get just the slightest inkling of what you readers are dealing with out there.

So hey, readers, drop me a line. That's the key message here.

Suggest some good books or Web sites I could use to further my education. Maybe some good tools or add-ons for VS. Let me know what problems you're having, what topics you'd like to see covered, what complaints you have -- heck, even what music you like to listen to while you code. Anything at all (well, you know, almost anything).

Let's make this space an exchange of information and ideas. Let you be the ones to dictate what we cover and talk about.

See, that way, I won't have to work, and I can finally finish that cool C# BlackJack game I've been working on. JK!

Really, just kidding.

Posted by David Ramel on 11/18/20094 comments

You may have read about Microsoft's far-reaching Oslo project -- launched in 2007 with great ambitions -- transitioning to a group of technologies to be incorporated into SQL Server called SQL Server Modeling.

Kathleen Richards at Redmond Developer News explained the change last week in her RDN Express blog.

The move generated a strong reaction from developers. Douglas Purdy's blog post announcing the shift in strategy had garnered more than 25 mostly negative comments -- with terms like "disappointment" and "wrong direction" being thrown around -- when Purdy responded.

Here's one choice tidbit from his comment: "The great irony to all these comments is that all we did was change the name from 'Oslo' to SQL Server Modeling and now we get the #fail tag."

The next day Purdy followed up with a posting titled "On DSLs and a few other things...." Two days after that a second follow-up post came out titled "On M." (That's the new programming language that constitutes one of the three main components of SQL Server Modeling, if you didn't know.)

So I'm looking forward to news coming out of the Professional Developer's Conference taking place right now in Los Angeles. Especially the Thursday presentation: "Oslo Modeling and DSL."

Should be interesting.

Posted by David Ramel on 11/18/20091 comments