Mobile Corner

Cortana, How Do I Add You To My Windows Apps?

With the Universal Windows Platform, you can now build apps that truly interact with the new digital assistant for Windows. In this article, Nick Randolph walks through using Cortana to launch and interact with your application.

- By Nick Randolph

- 12/01/2015

In the post-PC era of computing one of the most challenging frontiers is how to improve the usability of technology. While there had been earlier players in the touchscreen arena, the iPhone brought with it a realization that touch interfaces were the way of the future. However, despite voice forming the foundation for how humans communicate, voice integration has always been a niche area, with only basic support for launching applications or doing dictation, on most devices.

Recently, the three mobile platforms, iOS, Android and Windows, have all been racing to bring to market improvements to their digital assistants. Siri, on iOS, "Ok, Google," on Android and Cortana, on Windows, all offer a simple interface to search device- and cloud-based information. With deep integration into Bing, Office 365 and local data sources, Cortana offers a wide range of features to help you organize and manage your life. Cortana also exposes an interface to developers that permits applications to integrate into the Cortana search experience. In this article I'll walk through adding Cortana support to an application for a fictitious bank called MyBank.

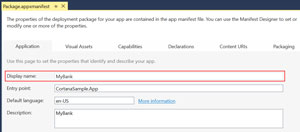

Before I start adding any explicit Cortana integration, it's worth noting that Cortana already knows how to launch any installed application. Press and hold the search button on the phone to direct Cortana to start listening; then simply say "Launch MyBank" and the MyBank application will be launched. The name of the application that Cortana listens for is derived from the Display name, set on the Application tab of the Package.appxmanifest designer, as shown in Figure 1.

[Click on image for larger view.]

Figure 1. The Application Name Is Taken from the Display Name

[Click on image for larger view.]

Figure 1. The Application Name Is Taken from the Display Name

Cortana also knows some permutations to this phrase, such as "Open MyBank" that will also work to launch the application. Interestingly, it appears that just saying the application name, "MyBank," doesn't work.

Previously, the only way you could integrate with Cortana on Windows Phone was to provide a voice command definition (VCD) file, which would control how Cortana invoked the application (and the voice capability within search that pre-dated Cortana and is available in markets where Cortana isn't available). In a Universal Windows Platform (UWP) app, it's now possible to interact with Cortana, making the experience more personal and functional.

In addition to launching an application into the foreground, Cortana can interact with a background task that can process user input and respond back via the Cortana interface. Whilst the Cortana interface is currently quite limited, it is possible to keep the entire interaction within Cortana, without an app needing to be launched.

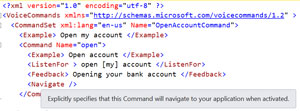

I'm going to start with the example of a VCD file (shown in Listing 1), which will be used to launch the application in order to show my bank account.

Listing 1: VCD File Launching the MyBank App

<?xml version="1.0" encoding="utf-8" ?>

<VoiceCommands xmlns="http://schemas.microsoft.com/voicecommands/1.2" >

<CommandSet xml:lang="en-us" Name="OpenAccountCommand">

<Example> Open my account </Example>

<Command Name="open">

<Example> Open account </Example>

<ListenFor> open [my] account </ListenFor>

<Feedback> Opening your bank account </Feedback>

<Navigate />

</Command>

</CommandSet>

</VoiceCommands>

I'll come back and discuss the format of this file, and some of the options that are available.

First, I need to include this in my application and then install the voice commands defined in this file when the application starts. A VCD file is just an .XML file and can be included in the application the same way any other content file is. After adding the file to the application, I select the file in Solution Explorer and from the Properties window set Build Action to Content, and the Copy to Output Directory to either Copy Always or Copy If Newer. If you don't set the Copy to Output Directory, you may find that during development your application isn't installing the latest version of your VCD, which can be frustrating to diagnose because your changes might work sometimes, whereas other times your changes won't appear to make any difference.

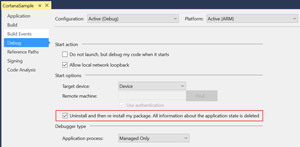

While iterating over your VCD and experimenting with the best voice commands for Cortana, I would suggest checking the "Uninstall and then reinstall …" option on the Debug tab of your application Properties (shown in Figure 2). This will ensure there are no existing voice commands that might interfere with the commands being installed from your VCD.

[Click on image for larger view.]

Figure 2. Debug Properties

[Click on image for larger view.]

Figure 2. Debug Properties

Now that I've included the VCD file into the application, the next step is to install the voice commands when the application starts up. For example, the following code can be included at the end of the OnLaunched method in the App.xaml.cs:

try

{

var vcd = await Package.Current.InstalledLocation.GetFileAsync(@"VoiceDefinition.xml");

await VoiceCommandDefinitionManager.InstallCommandDefinitionsFromStorageFileAsync(vcd);

}

catch

{

Debug.WriteLine("Error installing voice commands");

}

Be aware that the process of installing the voice commands (that is, the call to InstallCommandDefinitionsFromStorageFileAsync) can take more than 30 seconds to execute, so make sure your application doesn't block, waiting for the commands to be installed. If your application is reliant on the successful installation of the voice commands (not recommended, but necessary for some applications), it's worth providing some user experience indicating the installation of voice commands is in progress and that the user should wait.

At this point I'll jump back to take a look at the structure of the VCD file, which has a single element, VoiceCommands, that follows the XML declaration. The VoiceCommands element specifies the default namespace, http://schemas.microsoft.com/voicecommands/1.2, which references version 1.2 of the VCD schema (previous versions of Windows Phone used version 1.1 of this schema). This schema, which contains quite useful descriptions of each element, can be found installed with Visual Studio at C:\Program Files (x86)\Microsoft Visual Studio 14.0\xml\Schemas\VoiceCommandDefinition_v12.xsd. Luckily, you don't need to open the schema because Visual Studio will automatically provide the descriptions as IntelliSense when you're typing in the VCD file, having picked up the schema from the default namespace. Figure 3 shows a helpful hint via IntelliSense for the Navigate element.

[Click on image for larger view.]

Figure 3. Voice Command Intellisense

[Click on image for larger view.]

Figure 3. Voice Command Intellisense

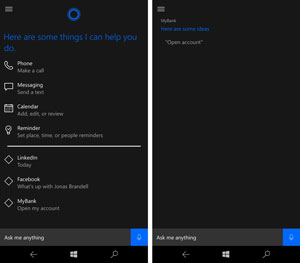

The next element in the VCD is a CommandSet, which is a language-specific set of commands for which Cortana will listen. In this case the VCD only has a single CommandSet that targets U.S. English; I could subsequently define an additional CommandSet for each locale that I want to support. Each CommandSet starts with an example that should give the user a hint as to how to invoke the application using a voice command. A CommandSet then contains a number of Command elements, each of which also requires an Example that should be specific to that Command. The examples, for both CommandSet and Command elements, appear within Cortana if the user says, "Help," or, "What can I say?" as shown in Figure 4. The example under MyBank in the left image is the CommandSet example, whilst the right image shows the examples for each Command within the CommandSet (note that Cortana selects the appropriate CommandSet based on the region information set on the device).

[Click on image for larger view.]

Figure 4. What Can I Say?

[Click on image for larger view.]

Figure 4. What Can I Say?

In this case the open command can be invoked by the user saying, "MyBank, Open Account." The Command element contains one or more ListenFor elements that determines what phases Cortana will listen for. In this case, the ListenFor element has brackets around the word My, which means it will match both Open My Account and Open Account; in other words, My is optional.

Cortana requires that the application name, MyBank, is said as a prefix to the command. In some cases, this doesn't make sense, or the phrase would make more sense with the application name placed at the end of, or midway through, the sentence. This can be controlled by specifying the RequireAppName attribute in the ListenFor element. For example, the following would allow the application name to be said before or after the phase:

<ListenFor RequireAppName="BeforeOrAfterPhrase">open [my] account</ListenFor>

If the application name needs to appear within the phase, the keyphase {builtin:AppName} can be used to identify where it should appear, such as in the following phase:

<ListenFor RequireAppName="ExplicitlySpecified">open my {builtin:AppName} account</ListenFor>

Sometimes the application name defined in the package.appxmanifest isn't easy for Cortana to match against, for example if it's similar to a voice command of another application. In this case the CommandSet can also contain either a CommandPrefix or AppName (supersedes the CommandPrefix element) element, which will override the application name.

Once Cortana has determined a match with a Command, the Feedback element will be used to confirm the match to the user. Finally, the Navigate element indicates that the application should be launched in the foreground. Adding a Target attribute to the Navigate element will provide a way for the application to determine which Command was matched:

<Navigate Target="openbankaccount" />

When Cortana matches a Command and then launches the application, the OnActivated method of the Application instance will be invoked. The following code prints out the properties passed in from Cortana, which in this case includes a CommandMode, with value Voice, and NavigationTarget, with value openbankaccount:

protected override void OnActivated(IActivatedEventArgs args)

{

base.OnActivated(args);

var voiceArgs = args as VoiceCommandActivatedEventArgs;

var props = voiceArgs.Result.SemanticInterpretation.Properties;

foreach (var kvp in props)

{

Debug.WriteLine($"{kvp.Key} - {string.Join(",",kvp.Value)}");

}

It's important to remember that the OnActivated method, not the OnLaunched method, will be invoked, even if the application isn't already running. Often developers forget this and don't initialize the frame, navigate to the appropriate page and activate the window.

The ListenFor element can also contain one or more of the following:

- { * } : This represents a wildcard for words that the user might say, but aren't relevant to the command match.

- {phraseList}: This is a label for a PhraseList that defines a list of words that Cortana should match against. This list of words can be updated dynamically by the application without having to reload the entire set of voice commands.

- {phraseTopic}: This is a label for a PhraseTopic which defines how Cortana should match the part of the phase. This is useful for allowing free text entry via voice. For example, if the user needs to supply a phone number or an address a PhraseTopic can be inserted into the ListenFor phrase.

Adding these items into the VCD file produces the code in Listing 2.

Listing 2: Adding Labels to the ListenFor Element

<?xml version="1.0" encoding="utf-8" ?>

<VoiceCommands xmlns="http://schemas.microsoft.com/voicecommands/1.2" >

<CommandSet xml:lang="en-us" Name="OpenAccountCommand">

<Example> Open my account </Example>

<Command Name="open">

<Example> Open account </Example>

<ListenFor RequireAppName="BeforeOrAfterPhrase" >

open [my] {accounttype} account </ListenFor>

<ListenFor RequireAppName="ExplicitlySpecified">

open my {builtin:AppName} {anyaccounttype} account </ListenFor>

<Feedback> Opening your bank account </Feedback>

<Navigate Target="openbankaccount" />

</Command>

<PhraseList Label="accounttype">

<Item>Savings</Item>

<Item>Credit</Item>

<Item>Cheque</Item>

</PhraseList>

<PhraseTopic Label="anyaccounttype" Scenario="Natural Language" />

</CommandSet>

</VoiceCommands>

As an alternative to launching an application, Cortana can invoke a background task. The background task can provide feedback back to the user via the Cortana interface. I'll create a background task by first adding a new project, CortanaService, based on the Windows Runtime Component project template, and adding a reference to the new project into the application. In the new project, I'll add a new class, MyBankVoiceCommandService, which will implement the IBackgroundTask interface, which defines a Run method that will be called when the background task is invoked.

There are two additional steps to wiring up the background task. First, an Extensions element needs to be defined in the package.appxmanifest file. I need to add the following Extensions element to the Application element either via code or via the Declarations pane on the designer:

<Extensions>

<uap:Extension Category="windows.appService"

EntryPoint="CortanaService.MyBankVoiceCommandService">

<uap:AppService Name="MyBankVoiceCommandService"/>

</uap:Extension>

<uap:Extension Category="windows.personalAssistantLaunch"/>

</Extensions>

The first Extension defines the app services entry point, which will be invoked by the voice command matched by Cortana. The second Extension wires up the ability for the background task to launch the application in the foreground. Note that there is an issue with the RTM release of Visual Studio 2015 where the personalAssistantLaunch extension doesn't appear in the Declarations tab of the package.appxmanifest designer, and making any changes in this designer (or creating a package with auto-increment version set to true) will result in this extension being removed. If you're experiencing issues with Cortana where your application doesn't launch or seems to crash on startup, check to make sure this extension is in the package.appxmanifest file.

The second step to wire up the background task is to replace the Navigate element of the Command in the VCD file, with a VoiceCommandService element:

<VoiceCommandService Target="MyBankVoiceCommandService"/>

The Target attribute of the VoiceCommandService element needs to match the Name attribute of the AppService element of the Extension added to the package.appxmanifest file.

Listing 3 provides an implementation of a background task that provides a number of account options from which the user can select. After being launched, the background task retrieves the trigger details and determines the CommandName that was matched by Cortana. For the open command, the ShowAccountOptions method first displays a progress message, while loading the list of accounts (in this case simulated by a two-second delay) that are then passed back to the user from which to select.

Listing 3: MyBankVoiceCommandService Class

public sealed class MyBankVoiceCommandService : IBackgroundTask

{

VoiceCommandServiceConnection voiceServiceConnection;

BackgroundTaskDeferral serviceDeferral;

public async void Run(IBackgroundTaskInstance taskInstance)

{

serviceDeferral = taskInstance.GetDeferral();

taskInstance.Canceled += OnTaskCanceled;

var triggerDetails = taskInstance.TriggerDetails as AppServiceTriggerDetails;

try

{

voiceServiceConnection =

VoiceCommandServiceConnection.FromAppServiceTriggerDetails(

triggerDetails);

voiceServiceConnection.VoiceCommandCompleted += OnVoiceCommandCompleted;

var voiceCommand = await voiceServiceConnection.GetVoiceCommandAsync();

// Find the command name which should match the VCD

if (voiceCommand.CommandName == "open")

{

await ShowAccountOptions();

}

else

{

// If not a command match, launch the app

LaunchAppInForeground();

}

}

catch

{

Debug.WriteLine("Unable to process voice command");

}

}

private async Task ShowAccountOptions()

{

await ShowProgressScreen();

await Task.Delay(2000); // Simulate looking up accounts

var accounts = new[] { "Savings", "Chequing", "Offset", "Loan" };

var userMessage = new VoiceCommandUserMessage();

var destinationsContentTiles = new List<VoiceCommandContentTile>();

userMessage.DisplayMessage = $"You have {accounts.Length} accounts";

userMessage.SpokenMessage = $"You have {accounts.Length} accounts,

{string.Join(",", accounts)}";

foreach (var acc in accounts)

{

var destinationTile = new VoiceCommandContentTile

{

ContentTileType = VoiceCommandContentTileType.TitleOnly,

AppLaunchArgument = acc,

Title = $"{acc} Account"

};

destinationsContentTiles.Add(destinationTile);

}

var response = VoiceCommandResponse.CreateResponse(userMessage,

destinationsContentTiles);

await voiceServiceConnection.ReportSuccessAsync(response);

}

private async Task ShowProgressScreen()

{

var userProgressMessage = new VoiceCommandUserMessage();

userProgressMessage.DisplayMessage = userProgressMessage.SpokenMessage =

"Loading accounts....";

var response = VoiceCommandResponse.CreateResponse(userProgressMessage);

await voiceServiceConnection.ReportProgressAsync(response);

}

private async void LaunchAppInForeground()

{

var userMessage = new VoiceCommandUserMessage { SpokenMessage = "Launching My Bank" };

var response = VoiceCommandResponse.CreateResponse(userMessage);

response.AppLaunchArgument = "";

await voiceServiceConnection.RequestAppLaunchAsync(response);

}

private void OnVoiceCommandCompleted(

VoiceCommandServiceConnection sender, VoiceCommandCompletedEventArgs args)

{

if (this.serviceDeferral != null)

{

this.serviceDeferral.Complete();

}

}

private void OnTaskCanceled(IBackgroundTaskInstance sender,

BackgroundTaskCancellationReason reason)

{

System.Diagnostics.Debug.WriteLine("Task cancelled, clean up");

if (this.serviceDeferral != null)

{

// Complete the service deferral

this.serviceDeferral.Complete();

}

}

}

When the user selects an account to open, the application is launched in the same way as if the application had been launched from a custom protocol. In the OnActivated method, the IActivatedEventArgs can be cast to a ProtocolActivatedEventArgs and the Uri property can be examined to retrieve the LaunchContext. For example, selecting the Loan account will launch the application with the following Uri:

windows.personalAssistantLaunch:?LaunchContext=Loan

In this example I've shown how a series of accounts can be returned to the user after they request that the MyBank app open their account. The Cortana experience can be customized to limit the need for users to continually open and close applications, thereby delivering a faster and more efficient interface for the user. In conjunction with other UWP features, such as live tiles and interactive notifications, Cortana allows you to drive richer engagement with your apps.