The Data Science Lab

Neural Network Back-Propagation Using Python

You don't have to resort to writing C++ to work with popular machine learning libraries such as Microsoft's CNTK and Google's TensorFlow. Instead, we'll use some Python and NumPy to tackle the task of training neural networks.

Over the past year or so, among my colleagues, the use of sophisticated machine learning (ML) libraries, such as Microsoft's CNTK and Google's TensorFlow, has increased greatly. Most of the popular ML libraries are written in C++ for performance reasons, but have a Python API interface. This means that if you want to work with ML, it's becoming increasingly important to have a familiarity with the Python language and with basic neural network concepts.

In this article, I'll explain how to implement the back-propagation (sometimes spelled as one word without the hyphen) neural network training algorithm from scratch, using just Python 3.x and the NumPy (numerical Python) package. After reading this article you should have a solid grasp of back-propagation, as well as knowledge of Python and NumPy techniques that will be useful when working with libraries such as CNTK and TensorFlow.

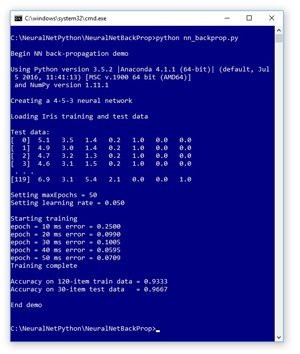

A good way to see where this article is headed is to take a look at the screenshot of a demo program in Figure 1. The demo Python program uses back-propagation to create a simple neural network model that can predict the species of an iris flower using the famous Iris Dataset. The demo begins by displaying the versions of Python (3.5.2) and NumPy (1.11.1) used. Although it is possible to install Python and NumPy separately, it’s becoming increasingly common to use an Anaconda distribution (4.1.1) as I did.

[Click on image for larger view.]

Figure 1. Python Neural Network Back-Propagation Demo

[Click on image for larger view.]

Figure 1. Python Neural Network Back-Propagation Demo

The Iris Dataset has 150 items. Each item has four numeric predictor variables (often called features): sepal length and width, and petal length and width, followed by the species ("setosa," "versicolor" or "virginica"). The demo program uses 1-of-N label encoding, so setosa = (1,0,0) and versicolor = (0,1,0) and virginica = (0,0,1). The goal is to predict species from sepal and petal length and width.

The 150-item dataset has 50 setosa items, followed by 50 versicolor, followed by 50 virginica. Before writing the demo program, I created a 120-item file of training data (using the first 30 of each species) and a 30-item file of test data (the leftover 10 of each species).

The demo program creates a simple neural network with four input nodes (one for each feature), five hidden processing nodes (the number of hidden nodes is a free parameter and must be determined by trial and error), and three output nodes (corresponding to encoded species). The demo loaded the training and test data into two matrices.

The back-propagation algorithm is iterative and you must supply a maximum number of iterations (50 in the demo) and a learning rate (0.050) that controls how much each weight and bias value changes in each iteration. Small learning rate values lead to slow but steady training. Large learning rates lead to quicker training at the risk of overshooting good weight and bias values. The max-iteration and leaning rate are free parameters.

The demo displays the value of the mean squared error, every 10 iterations during training. As you'll see shortly, there are two error types that are commonly used with back-propagation, and the choice of error type affects the back-propagation implementation. After training completed, the demo computed the classification accuracy of the resulting model on the training data (0.9333 = 112 out of 120 correct) and on the test data (0.9667 = 29 out of 30 correct). The classification accuracy on a set of test data is a very rough approximation of the accuracy you'd expect to see on new, previously unseen data.

This article assumes you have a solid knowledge of the neural network input-output mechanism, and intermediate or better programming skill with a C-family language (C#, Python, Java), but doesn’t assume you know much about the back-propagation algorithm. The demo program is too long to present in its entirety in this article, but the complete source code is available in the accompanying file download.

Understanding Back-Propagation

Back-propagation is arguably the single most important algorithm in machine learning. A complete understanding of back-propagation takes a lot of effort. But from a developer's perspective, there are only a few key concepts that are needed to implement back-propagation. In the discussion that follows, for simplicity I leave out many important details, and take many liberties with the underlying mathematics.

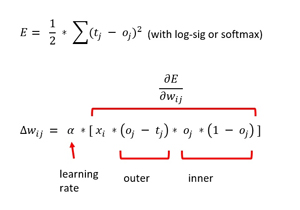

Take a look at the two math equations for back-propagation in Figure 2. The top equation defines a sum of squares error metric and is the starting point for back-propagation. The tj stands for a target value and the oj stands for a computed output value. Suppose a target value is (1, 0, 0) corresponding to setosa. And suppose that for a given set of weight and bias values, and a set of four input values, the computed output values are (0.70, 0.10, 0.20). The squared error is 1/2 * [ (1 - 0.70)^2 + (0 - 0.10)^2 + (0 - 0.20)^2 ] = 1/2 * (0.09 + 0.01 + 0.04) = 0.07. Notice the seemingly arbitrary 1/2 term.

[Click on image for larger view.]

Figure 2. Back-Propagation Update for Hidden-to-Output Weights

[Click on image for larger view.]

Figure 2. Back-Propagation Update for Hidden-to-Output Weights

The goal of back-propagation training is to minimize the squared error. To do that, the gradient of the error function must be calculated. The gradient is a calculus derivative with a value like +1.23 or -0.33. The sign of the gradient tells you whether to increase or decrease the weights and biases in order to reduce error. The magnitude of the gradient is used, along with the learning rate, to determine how much to increase or decrease the weights and biases.

Using some very clever mathematics, you can compute the gradient. The bottom equation in Figure 2 is the weight update rule for a single output node. The amount to change a particular weight is the learning rate (alpha) times the gradient. The gradient has four terms. The xi is the input associated with the weight that’s being examined. The (oj - tj) is the derivative of the outside part of the error function: the 2 exponent drops to the front, canceling the 1/2 (which is the only reason the 1/2 term is there), then you multiply by the derivative of the inside, which is -1 times the derivative of the function used to compute the output node.

The third and fourth terms of the gradient come from the activation function used for the output nodes. For classification, this is the softmax function. As it turns out, the derivative of an output node oj is, somewhat surprisingly, oj * (1 - oj). To summarize, the back-propagation weight update rule depends on the derivative of the error function and the derivative of the activation function.

There are some important additional details. The squared error term can be defined using (target -output)^2 instead of (output - target)^2 and give the same error because of the squaring operation. But reversing the order will change the sign of the resulting (target - output) term in the gradient. This in turn affects whether you should add the delta-w term or subtract it when you update weights and biases.

OK, so updating the weights and biases for hidden-to-output weights isn't too difficult. But what about the weight update rule for input-to-hidden weights? That equation is more complicated and in my opinion is best understood using code rather than a math equation, as I'll present shortly. The Wikipedia article on back-propagation has a very good derivation of the weight update rule for both output and hidden nodes.

Overall Demo Program Structure

The overall demo program structure is presented in Listing 1. To edit the demo program, I used the simple Notepad++ program. Most of my colleagues prefer using one of the many nice Python editors that are available.

I commented the name of the program and indicated the Python version used. I added four import statements to gain access to the NumPy package's array and matrix data structures, and the math and random modules. The sys module is used only to programmatically display the Python version, and can be omitted in most scenarios.

Listing 1: Overall Program Structure

# nn_backprop.py

# Python 3.x

import numpy as np

import random

import math

import sys

# ------------------------------------

def loadFile(df): . . .

def showVector(v, dec): . . .

def showMatrix(m, dec): . . .

def showMatrixPartial(m, numRows, dec, indices): . . .

# ------------------------------------

class NeuralNetwork: . . .

# ------------------------------------

def main():

print("\nBegin NN back-propagation demo \n")

pv = sys.version

npv = np.version.version

print("Using Python version " + str(pv) +

"\n and NumPy version " + str(npv))

numInput = 4

numHidden = 5

numOutput = 3

print("\nCreating a %d-%d-%d neural network " %

(numInput, numHidden, numOutput) )

nn = NeuralNetwork(numInput, numHidden, numOutput, seed=3)

print("\nLoading Iris training and test data ")

trainDataPath = "irisTrainData.txt"

trainDataMatrix = loadFile(trainDataPath)

print("\nTest data: ")

showMatrixPartial(trainDataMatrix, 4, 1, True)

testDataPath = "irisTestData.txt"

testDataMatrix = loadFile(testDataPath)

maxEpochs = 50

learnRate = 0.05

print("\nSetting maxEpochs = " + str(maxEpochs))

print("Setting learning rate = %0.3f " % learnRate)

print("\nStarting training")

nn.train(trainDataMatrix, maxEpochs, learnRate)

print("Training complete")

accTrain = nn.accuracy(trainDataMatrix)

accTest = nn.accuracy(testDataMatrix)

print("\nAccuracy on 120-item train data = %0.4f " % accTrain)

print("Accuracy on 30-item test data = %0.4f " % accTest)

print("\nEnd demo \n")

if __name__ == "__main__":

main()

# end script

----------------------------------------------------------------------------------

The demo program consists mostly of a program-defined NeuralNetwork class. I created a main function to hold all program control logic. I started by displaying some version information:

def main():

print("\nBegin NN back-propagation demo \n")

pv = sys.version

npv = np.version.version

print("Using Python version " + str(pv) +

"\n and NumPy version " + str(npv))

...

Next, I created the demo neural network, like so:

numInput = 4

numHidden = 5

numOutput = 3

print("\nCreating a %d-%d-%d neural network " %

(numInput, numHidden, numOutput) )

nn = NeuralNetwork(numInput, numHidden, numOutput, seed=3)

The NeuralNetwork constructor accepts a seed value to initialize a class-scope random number generator object. The RNG object is used to initialize all weights and bias values to small random numbers between -0.01 and +0.01 using class method initializeWeights. The RNG object is also used during training to scramble the order in which training items are processed. The seed value of 3 is arbitrary.

The constructor assumes that the tanh function is used for hidden node activation. As you'll see shortly, if you use a different activation function such as logistic sigmoid or rectified linear unit (ReLU), the back-propagation code for updating the hidden node weights and bias values will be affected.

The demo loads training and test data using these statements:

print("\nLoading Iris training and test data ")

trainDataPath = "irisTrainData.txt"

trainDataMatrix = loadFile(trainDataPath)

print("\nTest data: ")

showMatrixPartial(trainDataMatrix, 4, 1, True)

testDataPath = "irisTestData.txt"

testDataMatrix = loadFile(testDataPath)

Helper function loadFile does all the work. The function is hardcoded to assume that the source data is comma-delimited, is ordered with features followed by encoded species, and does not have a header line. Writing code from scratch allows you to be very concise, as opposed to writing general-purpose library code, which requires you to take into account all kinds of scenarios and add huge amounts of error-checking code.

The back-propagation training is invoked like so:

maxEpochs = 50

learnRate = 0.05

print("\nSetting maxEpochs = " + str(maxEpochs))

print("Setting learning rate = %0.3f " % learnRate)

print("\nStarting training")

nn.train(trainDataMatrix, maxEpochs, learnRate)

print("Training complete")

Behind the scenes, method train uses the back-propagation algorithm and displays a progress message with the current mean squared error, every 10 iterations. It's important to monitor progress during neural network training because it's not uncommon for training to stall out completely, and if that happens you don't want to wait for an entire training run to complete.

In non-demo scenarios, the maximum number of training iterations/epochs can be in the thousands, so printing errors every 10 iterations might be too often. You might want to consider passing a parameter to the train method that controls when to print progress messages.

The demo program concludes with these statements:

...

accTrain = nn.accuracy(trainDataMatrix)

accTest = nn.accuracy(testDataMatrix)

print("\nAccuracy on 120-item train data = %0.4f " % accTrain)

print("Accuracy on 30-item test data = %0.4f " % accTest)

print("\nEnd demo \n")

if __name__ == "__main__":

main()

# end script

Notice that during training you’re primarily interested in error, but after training you’re primarily interested in classification accuracy.

The Neural Network Class

The structure of the Python neural network class is presented in Listing 2. Python function and method definitions begin with the def keyword. All class methods and data members have essentially public scope as opposed to languages like Java and C#, which can impose private scope. The built-in __init__ method (with two leading and two trailing underscore characters) can be loosely thought of as a constructor. All class method definitions must include the "self" keyword as the first parameter, except for methods that are decorated with the @staticmethod attribute.

Listing 2: NeuralNetwork Class Structure

class NeuralNetwork:

def __init__(self, numInput, numHidden, numOutput, seed): ...

def setWeights(self, weights): ...

def getWeights(self): ...

def initializeWeights(self): ...

def computeOutputs(self, xValues): ...

def train(self, trainData, maxEpochs, learnRate): ...

def accuracy(self, tdata): ...

def meanSquaredError(self, tdata): ...

@staticmethod

def hypertan(x): ...

@staticmethod

def softmax(oSums): ...

@staticmethod

def totalWeights(nInput, nHidden, nOutput): ...

# end class NeuralNetwork

----------------------------------------------------------------------------------

The NeuralNetwork.train method implements the back-propagation algorithm. The definition begins:

def train(self, trainData, maxEpochs, learnRate):

hoGrads = np.zeros(shape=[self.nh, self.no], dtype=np.float32)

obGrads = np.zeros(shape=[self.no], dtype=np.float32)

ihGrads = np.zeros(shape=[self.ni, self.nh], dtype=np.float32)

hbGrads = np.zeros(shape=[self.nh], dtype=np.float32)

...

Each weight and bias has an associated gradient. The prefix "ho" stands for "hidden-to-output." Similarly, "ob" means "output bias," "ih" means "input-to-hidden" and "hb" means "hidden bias." Class members ni, nh, and no are the number of input, hidden, and output nodes, respectively. When working with neural networks, it's common, but not required, to work with the float32 rather than float64 data type.

Next, two scratch arrays are instantiated:

oSignals = np.zeros(shape=[self.no], dtype=np.float32)

hSignals = np.zeros(shape=[self.nh], dtype=np.float32)

Each hidden and output node has an associated signal that’s essentially a gradient without its input term. These arrays are mostly for coding convenience.

The main training loop is prepared like so:

epoch = 0

x_values = np.zeros(shape=[self.ni], dtype=np.float32)

t_values = np.zeros(shape=[self.no], dtype=np.float32)

numTrainItems = len(trainData)

indices = np.arange(numTrainItems)

The x_values and t_values arrays hold a set of feature values (sepal length and width, and petal length and width) and target values (such as 1, 0, 0), respectively. The indices array holds integers 0 through 119 and is used to shuffle the order in which training items are processed. The training loop begins with:

while epoch < maxEpochs:

self.rnd.shuffle(indices)

for ii in range(numTrainItems):

idx = indices[ii]

...

The built-in shuffle function uses the Fisher-Yates mini-algorithm to scramble the order of the training indices. Therefore, variable idx points to the current training item being processed. Inside the main loop, the input and target values are peeled off the current training item, and then the output node values are computed using the input values and the current weights and bias values:

for j in range(self.ni):

x_values[j] = trainData[idx, j]

for j in range(self.no):

t_values[j] = trainData[idx, j+self.ni]

self.computeOutputs(x_values)

Note that instead of copying the input values from the matrix of training items into an intermediate x_values array and then transferring those values to the input nodes, you could copy the input values directly. The computeOutputs method stores and returns the output values, but the explicit rerun is ignored here.

The first step in back-propagation is to compute the output node signals:

# 1. compute output node signals

for k in range(self.no):

derivative = (1 - self.oNodes[k]) * self.oNodes[k]

oSignals[k] = derivative * (self.oNodes[k] - t_values[k])

Recall that the derivative variable holds the derivative of the softmax activation function. The oSignals variable includes that derivative and the output minus target value. Next, the hidden-to-output weight gradients are computed:

# 2. compute hidden-to-output weight gradients using output signals

for j in range(self.nh):

for k in range(self.no):

hoGrads[j, k] = oSignals[k] * self.hNodes[j]

The output node signal is combined with the associated input from the associated hidden node to give the gradient. As I mentioned earlier, the oSignals array is mostly for convenience and you can compute the values into the hoGrads matrix directly if you wish. Next, the gradients for the output node biases are computed:

# 3. compute output node bias gradients using output signals

for k in range(self.no):

obGrads[k] = oSignals[k] * 1.0

You can think of a bias as a special kind of weight that has a constant, dummy 1.0 associated input. Here, I use an explicit 1.0 value only to emphasize that idea, so you can omit it if you wish. Next, the hidden node signals are computed:

# 4. compute hidden node signals

for j in range(self.nh):

sum = 0.0

for k in range(self.no):

sum += oSignals[k] * self.hoWeights[j,k]

derivative = (1 - self.hNodes[j]) * (1 + self.hNodes[j])

hSignals[j] = derivative * sum

This is the trickiest part of back-propagation. The sum variable accumulates the product of output node signals and hidden-to-output weights. This isn't at all obvious. You can find a good explanation of how this works by reading the Wikipedia article on back-propagation. Recall that the NeuralNetwork class has a hardcoded tanh hidden node activation function. The derivative variable holds the calculus derivative of the tanh function. So, if you change the hidden node activation function to logistic sigmoid or ReLU, you'd have to change the calculation of this derivative variable.

Next, the input-to-hidden weight gradients, and the hidden node bias gradients are calculated:

# 5. compute input-to-hidden weight gradients using hidden signals

for i in range(self.ni):

for j in range(self.nh):

ihGrads[i, j] = hSignals[j] * self.iNodes[i]

# 6. compute hidden node bias gradients using hidden signals

for j in range(self.nh):

hbGrads[j] = hSignals[j] * 1.0

As before, a gradient is composed of a signal and an associated input term, and the dummy 1.0 input value for the hidden biases can be dropped.

If you imagine the input-to-output mechanism as going from left to right (input to output to hidden), the gradients must be computed from right to left (hidden-to-output gradients, then input-to-hidden gradients). After all the gradients have been computed, you can update the weights in either order. The demo program starts by updating the input-to-hidden weights:

# 1. update input-to-hidden weights

for i in range(self.ni):

for j in range(self.nh):

delta = -1.0 * learnRate * ihGrads[i,j]

self.ihWeights[i, j] += delta

The weight delta is the learning rate times the gradient. Here, I multiply by -1 and then add the delta because error is assumed to use (target - output)^2 and so the gradient has an (output - target) term. I used this somewhat awkward approach to follow the Wikipedia entry on back-propagation. Of course you can drop the multiply by -1 and just subtract the delta if you wish.

Next, the hidden node biases are updated:

# 2. update hidden node biases

for j in range(self.nh):

delta = -1.0 * learnRate * hbGrads[j]

self.hBiases[j] += delta

If you look at the loop structure s carefully, you'll notice that you can combine updating the input-to-hidden weights and updating the hidden biases if you wish. Next, the hidden-to-output weights and the output node biases are updated using these statements:

# 3. update hidden-to-output weights

for j in range(self.nh):

for k in range(self.no):

delta = -1.0 * learnRate * hoGrads[j,k]

self.hoWeights[j, k] += delta

# 4. update output node biases

for k in range(self.no):

delta = -1.0 * learnRate * obGrads[k]

self.oBiases[k] += delta

Notice that all updates use the same learning rate. An advanced version of back-propagation called Adam ("adaptive moment estimation") was developed in 2015. Adam uses different learning rates and a few other tricks, and is considered state-of-the-art.

The main training loop finishes by updating the iteration counter and printing a progress message, and then method NeuralNetwork.train wraps up, like so:

...

epoch += 1

if epoch % 10 == 0:

mse = self.meanSquaredError(trainData)

print("epoch = " + str(epoch) + " ms error = %0.4f " % mse)

# end while

result = self.getWeights()

return result

# end train

Here, a progress message will be displayed every 10 iterations. You might want to parameterize the interval. You can also print additional diagnostic information here. The final values of the weights and biases are fetched by class method getWeights and returned by method train as a convenience.

Wrapping Up

The Python language is too slow to create serious neural networks from scratch. But implementing a neural network in Python gives you a complete understanding of what goes on behind the scenes when you use a sophisticated machine learning library like CNTK or TensorFlow. And the ability to implement a neural network from scratch gives you the ability to experiment with custom algorithms.

The version of back-propagation presented in this article is basic. In future articles I'll show you how to implement momentum and mini-batch training -- two important techniques that increase training speed. Another important variation is to use a different measure of error called cross entropy error.