Microsoft's AI-Driven Dev Tool Turns Whiteboard Sketches into Code

Microsoft introduced a Web tool driven by AI technologies and Azure cloud services that turns whiteboard sketches into HTML code for text boxes, check boxes, buttons and so on.

Aptly named Sketch2Code, the tool was introduced by a company AI program manager on the Azure dev site, Tara Jana, who said, "We hope this post helps you get started with AI and motivates you to become an AI developer."

Part of that motivation might be the company's claim that the tool can radically condense the time-consuming process of converting whiteboard designs into development code and provide instant results.

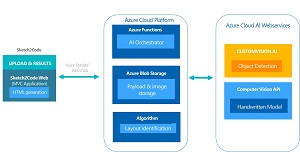

Sketch2Code uses AI computer vision technology -- providing object detection and text recognition -- to scan uploaded images and, leveraging pretrained custom models, translate sketched Web components into HMTL snippets. The Custom Vision Service is used to train models and detect drawn HTML objects, whereupon the text-recognition functionality of the service extracts the handwritten text.

"A layout algorithm uses the spatial information from all the bounding boxes of the predicted elements to generate a grid structure that accommodates all," Microsoft said. Finally, an HTML generation engine creates HTML markup code reflecting the result, using all of the gathered data.

[Click on image for larger view.] Sketch2Code Architecture (source: Microsoft).

[Click on image for larger view.] Sketch2Code Architecture (source: Microsoft).

"By combining these two pieces of information, we can generate the HTML snippets of the different elements in the design," the experimental tool's site says. "We then can infer the layout of the design from the position of the identified elements and generate the final HTML code accordingly."

The process is aided by several Azure cloud services. Specifically, Microsoft listed the following components that power the tool:

- A Microsoft Custom Vision Model: This model has been trained with images of different handwritten designs tagging the information of most common HTML elements like buttons, text box, and images.

- A Microsoft Computer Vision Service: To identify the text written into a design element a Computer Vision Service is used.

- An Azure Blob Storage: All steps involved in the HTML generation process are stored, including the original image, prediction results and layout grouping information.

- An Azure Function: Serves as the backend entry point that coordinates the generation process by interacting with all the services.

- An Azure Web site: User font-end to enable uploading a new design and see the generated HTML results.

Source code for the project is available on GitHub.

About the Author

David Ramel is an editor and writer at Converge 360.