News

New Open Source ONNX Runtime Web Does Machine Learning Modeling in Browser

Microsoft introduced a new feature for the open source ONNX Runtime machine learning model accelerator for running JavaScript-based ML models running in browsers.

The new ONNX Runtime Web (ORT Web) was introduced this month as a new feature for the cross-platform ONNX Runtime used to optimize and accelerate ML inferencing and training. It's all part of the ONNX (Open Neural Network Exchange) ecosystem that serves as an open standard for ML interoperability.

Microsoft says ONNX Runtime inference can enable faster customer experiences and lower costs, as it supports models from deep learning frameworks such as PyTorch and TensorFlow/Keras along with classical ML libraries like scikit-learn, LightGBM, XGBoost and more. It's compatible with different hardware, drivers and OSes, providing optimal performance by leveraging hardware accelerators where applicable, alongside graph optimizations and transforms.

The JavaScript-based ORT Web for the ONNX Runtime isn't a brand-new product, however, as it replaces Microsoft's ONNX.js Javascript library for running ONNX models on browsers and on Node.js, supposedly providing an enhanced user experience and improved performance.

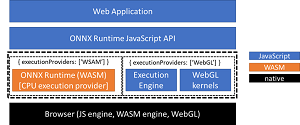

Specifically, the new tool is said to provide a more consistent developer experience between packages for server-side and client-side inferencing, along with improved inference performance and model coverage. Key to all that is a two-pronged back-end approach to accelerate model inference in the browser with the help of both machine CPUs and their graphics-oriented GPU counterparts. That involves WebAssembly back-end functionality on the CPU side (it lets C++ code be used in the browser instead of JavaScript), whereas the GPU side of things leverages WebGL, a popular standard for accessing GPU capabilities.

[Click on image for larger view.] ORT Web Overview (source: Microsoft).

[Click on image for larger view.] ORT Web Overview (source: Microsoft).

"Running machine-learning-powered web applications in browsers has drawn a lot of attention from the AI community," Microsoft said in an announcement early this month. "It is challenging to make native AI applications portable to multiple platforms given the variations in programming languages and deployment environments. Web applications can easily enable cross-platform portability with the same implementation through the browser. Additionally, running machine learning models in browsers can accelerate performance by reducing server-client communications and simplify the distribution experience without needing any additional libraries and driver installations."

Microsoft is soliciting developer feedback on the project, which can be provided on the ONNX Runtime GitHub repo. As it continues to work on and improve the tool's performance and model coverage and introduce new features, one possible enhancement being considered is on-device model training.

About the Author

David Ramel is an editor and writer at Converge 360.