News

New Azure AI VMs Immediately Claim Top500 Supercomputer Rankings

Visual Studio coders who dabble in artificial intelligence projects can now take advantage of new Azure virtual machines (VMs) featuring 80 GB NVIDIA GPUs that immediately claimed four spots on the TOP500 supercomputers list, Microsoft said.

The company in June claimed to have the "fastest public cloud supercomputer" in announcing scale-out NVIDIA A100 GPU Clusters.

Building on those instances, Microsoft last week announced new NDm A100 v4 Series VMs that sport NVIDIA A100 Tensor Core 80 GB GPUs, which the company said expands Azure leadership-class AI supercomputing scalability in the public cloud while also claiming four official places in the TOP500 supercomputing list.

The company positioned the new high-memory NDm A100 v4 series as bringing AI/supercomputer power to the masses, giving organizations opportunities to use them to attain a competitive advantage. That's done with the help of a class-leading design that features:

- In-Network Computing

- 200 GB/s

- GPUDirect RDMA for each GPU

- An all-new PCIe Gen 4.0-based architecture

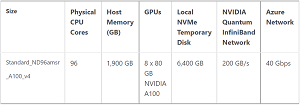

[Click on image for larger view.] The Specs (source: Microsoft).

[Click on image for larger view.] The Specs (source: Microsoft).

"We live in the era of large-scale AI models, the demand for large scale computing keeps growing," said Sherry Wang, senior program manager, Azure HPC and AI. "The original ND A100 v4 series features NVIDIA A100 Tensor Core GPUs each equipped with 40 GB of HBM2 memory, which the new NDm A100 v4 series doubles to 80 GB, along with a 30 percent increase in GPU memory bandwidth for today’s most data-intensive workloads. RAM available to the virtual machine has also increased to 1,900 GB per VM- to allow customers with large datasets and models a proportional increase in memory capacity to support novel data management techniques, faster checkpointing, and more."

About the Author

David Ramel is an editor and writer at Converge 360.