News

Microsoft Pushes Open Source 'Semantic Kernel' for AI LLM-Backed Apps

Since recently introducing the open source Semantic Kernel to help developers use AI large language models (LLMs) in their apps, Microsoft has been busy improving it, publishing new guidance on how to use it and touting its capabilities.

Developers can use Semantic Kernel (SK) to create natural language prompts, generate responses, extract information, invoke other prompts or perform other tasks that can be expressed with text.

LLM prompting is an emerging discipline for getting the most out of queries to machine learning models that power advanced AI systems like ChatGPT from Microsoft partner OpenAI. The recently introduced GPT-4 is the latest LLM from OpenAI, which claims it significantly outperforms ChatGPT.

Being able to write effective LLM prompts, called prompt engineering, is one of the most sought-after skills in IT these days, with job postings for the position citing salary ranges up to $335,000.

When Microsoft announced Semantic Kernel on March 17, prompting was a key part of the project's description: "Semantic Kernel (SK) is a lightweight SDK that lets you mix conventional programming languages, like C# and Python, with the latest in Large Language Model (LLM) AI 'prompts' with prompt templating, chaining, and planning capabilities."

Better prompting is also one of the four key benefits of the open source project:

- Fast integration: SK is designed to be embedded in any kind of application, making it easy for you to test and get running with LLM AI.

- Extensibility: With SK, you can connect with external data sources and services -- giving their apps the ability to use natural language processing in conjunction with live information.

-

Better prompting: SK's templated prompts let you quickly design semantic functions with useful abstractions and machinery to unlock LLM AI's potential.

- Novel-But-Familiar: Native code is always available to you as a first-class partner on your prompt engineering quest. You get the best of both worlds.

In fact, that open source GitHub repo has an entire section devoted to prompt templating, which is done with a bespoke prompt template language.

As part of its post-debut push to publicize SK, Microsoft just today (March 30) published a post titled "Semantic Kernel Planner: Improvements with Embeddings and Semantic Memory," which details improvements to the project's Planner skill, allowing users to create and execute plans based on semantic queries. The post explains a recent tweak that made the Planner skill even more versatile.

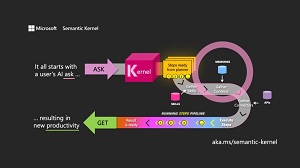

[Click on image for larger view.] Semantic Kernel (source: Microsoft).

[Click on image for larger view.] Semantic Kernel (source: Microsoft).

The tweak involved the integration of embeddings into the Planner skill in order to enhance its usability and functionality. Embeddings are vectors or arrays of numbers that represent the meaning and the context of tokens processed by the model. Used to encode and decode input and output texts, embeddings can help an LLM understand the relationships between tokens and generate relevant and coherent texts. They are used for text classification, summarization, translation and generation, including image and code generation.

"As the Semantic Kernel evolves in its Alpha stage, we'll prioritize other methods of using embeddings for plan creation that will be even more powerful," Microsoft said.

Semantic Kernel embeddings were also showcased in an earlier post titled "Semantic Kernel Embeddings and Memories: Explore GitHub Repos with Chat UI," which explains how they can help developers ask questions about or explore a GitHub repo with natural language queries. Along with embeddings, that's done with the help of SK memories (collections of embeddings), which help provide broader context to a prompt (or an ASK in the SK world).

[Click on image for larger view.] A Semantic Kernel ASK (source: Microsoft).

[Click on image for larger view.] A Semantic Kernel ASK (source: Microsoft).

The post highlights a sample app, GitHub Repo Q&A Bot, which shows how developers can use a SK function to download any GitHub repo, store it in memories (collections of embeddings), and query it with a chat UI.

Microsoft said the sample can be used as a guide for storing and querying items like:

- Large internal procedure manuals

- Educational materials for students

- Corporate contracts

- Product documentation

Microsoft's push to publicize SK also involved a post this week titled "How to Deploy Semantic Kernel to Azure in Minutes." That's done with Azure functions, Microsoft's serverless computing service that lets users run code without managing servers or infrastructure.

The demo uses Visual Studio Code and requires installation of the Azure Tools extension.

"If you have been following the Semantic Kernel GitHub repo, you have probably experimented with some of the examples and seen how powerful and flexible the platform is for adding intelligence to your own apps. You might be eager to take your Semantic Kernel skills to the next level and deploy our SDK to the cloud," Microsoft said.

If the above three posts published in the two weeks since the introduction of Semantic Kernel are any indication, Microsoft plans on taking it to a lot more places than that.

About the Author

David Ramel is an editor and writer at Converge 360.