News

Microsoft's 'Semantic Kernel' AI SDK Ships as Release Candidate

After Microsoft's Semantic Kernel SDK for AI projects was set for a revamp upon the company finding "unexpected uses," it has this week shipped in near-final form as a Release Candidate 1.

"Our current API is being used in unexpected ways," the team said last month. "As we make changes, we are using an obsolescence strategy with guidance on how to move to the new API. This doesn't work well for unexpected uses on our current APIs, however, so we need help from the community."

The community has apparently chipped in, judging from RC1's announcement post: "Since the interface is getting extremely close to its final v1.0.0 structure, we're excited to release v1.0.0 RC1 of the .NET Semantic Kernel SDK. During the next two weeks we'll be focused on bug fixes and making minor adjustments to finish the shape of the API."

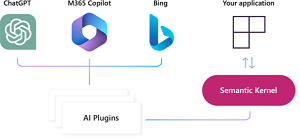

The SDK basically provides an AI orchestration layer for Microsoft's stack of AI models and Copilot AI assistants, providing interaction services to work with underlying foundation models and AI infrastructure. The company explains more in its "What is Semantic Kernel?" documentation: "Semantic Kernel is an open-source SDK that lets you easily combine AI services like OpenAI, Azure OpenAI, and Hugging Face with conventional programming languages like C# and Python. By doing so, you can create AI apps that combine the best of both worlds."

[Click on image for larger view.] Semantic Kernel in the Microsoft Ecosystem (source: Microsoft).

[Click on image for larger view.] Semantic Kernel in the Microsoft Ecosystem (source: Microsoft).

In the RC1 announcement, Microsoft detailed how it's easier to get started with the SDK via automated OpenAI function calling, which reduces the multiple steps required to set up a function.

The company also described the dev team's efforts to increase the value of the kernel and make it easier to use, which was described as "making it the property bag for your entire AI application" with multiple AI services and plugins joining other services including loggers and HTTP handlers. One benefit of those efforts is that it's now easier to use dependency injection with Semantic Kernel.

One member of the dev team explained how it's also now easier to get responses from AI. "To further improve the kernel, we wanted to make sure you could invoke any of your logic directly from it," he said. "You could already invoke a function from it, but you couldn't 1) stream from the kernel, 2) easily run an initial prompt, or 3) use non-string arguments. With V1.0.0 RC1, we've made enhancements to support all three." Those enhancements help devs to:

- Use the simple

InvokePromptAsync methods

- Invoke function directly from the kernel with kernel arguments

- Easily stream directly from the kernel

Another team member explained how creating templates has never been so easy or powerful, detailing, for example, how developers can now share prompts via YAML files.

That power is also furthered by the "Handlebars" templating language. "If you want even more power (i.e., loops and conditionals), you can also leverage Handlebars," he said. "Handlebars makes a great addition to support any type of input variable. For example, you can now loop over chat history messages."

Among many other changes, the team aligned names to more align with the rest of the industry replaced custom implementations with .NET standard implementations.

"Previously, we had classes and interfaces like IAIServiceProvider, HttpHandlerFactory, and retry handlers," the announcement explained. "With our move to align with dependency injection, these implementations are no longer necessary because developers can use existing standard approaches that are already in use within the .NET ecosystem."

- With the bits nearly finished, developers can try them out in two updated starter projects:

- Hello world: This shows how to quickly get started using prompt YAML files and streaming.

- Console chat This shows how to use Semantic Kernel with .NET dependency injection.

While the team said it is basically just fixing bugs and implementing minor new functionality at this point, developers wanting to see what the team is working on for v1.0.0 can examine the V1 burndown table.

About the Author

David Ramel is an editor and writer at Converge 360.