News

For Build Developer Conference, Semantic Kernel AI SDK Aims for 'First-Class Agent Support'

Microsoft is shaping up its Semantic Kernel SDK for AI development for the company's Build developer conference, which might be coming in late May.

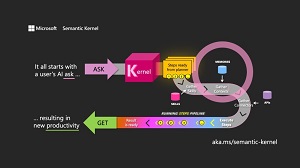

The SDK acts like an AI orchestration layer for Microsoft's stack of AI models and Copilot AI assistants, providing interaction services to work with underlying machine language foundation models and AI infrastructure. The company explains more in its "What is Semantic Kernel?" documentation: "Semantic Kernel is an open-source SDK that lets you easily combine AI services like OpenAI, Azure OpenAI, and Hugging Face with conventional programming languages like C# and Python. By doing so, you can create AI apps that combine the best of both worlds."

[Click on image for larger view.] Semantic Kernel (source: Microsoft).

[Click on image for larger view.] Semantic Kernel (source: Microsoft).

Build, according to recent social media chatter, might be coming in the third week of May. The dev team's effort to shape up the SDK in time for the developer conference is proceeding on a number of fronts including: parity among different language options; connectors; and agents, the latter being one of the latest trends in advanced AI development. As AI advances, developers are taking advantage of the ability to create autonomous AI agents that can be assigned personalized "personas" with the ability to take independent actions on the behalf of users like calling plugins using planners or calling functions.

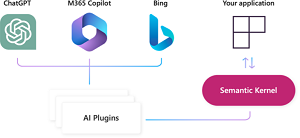

[Click on image for larger view.] Semantic Kernel in the Microsoft Ecosystem (source: Microsoft).

[Click on image for larger view.] Semantic Kernel in the Microsoft Ecosystem (source: Microsoft).

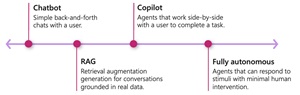

Agents

"We already have an experimental implementation that uses the OpenAI Assistants API (check out John Maeda's SK basics samples), but as part of our final push, we want to fully abstract our agent interface to support agents built with any model," Microsoft's Matthew Bolanos from the SK dev team said in a post today (Feb. 12) outlining the spring roadmap for the open source project.

[Click on image for larger view.] Semantic Kernel Core Components (source: Microsoft).

[Click on image for larger view.] Semantic Kernel Core Components (source: Microsoft).

Bolanos explained more in an accompanying video, starting with the current experimental tech.

[Click on image for larger view.] Semantic Kernel Agents (source: Microsoft).

[Click on image for larger view.] Semantic Kernel Agents (source: Microsoft).

"If you use it, you can already do some of the advanced capabilities that have been popularized by SDKs like AutoGen, where you actually have multiple agents collaborating working together to solve a user's needs," Bolanos explained. "It's really, really powerful stuff. And it points to a vision where as part of building an AI application, you build these teams of AIs of different skill sets, of different domains that can work together, kind of like a diverse set of humans would, right? We have different disciplines like PM, designer, coders -- the same patterns, I expect to happen in the agent space as well. Now our current agent abstraction is very tied to the existing Assistants API. And as part of the work that we want to do to make it non experimental is we want to improve the abstractions so that you could build an agent with any model or any API, whether that's Gemini or Llama, or any of the other connectors and models. We're really excited with what people potentially build with agents. So please share as you build out, as you do your tests, so we can make sure those same features are supported as part of the final attraction that we land on."

Parity Across Python and Java

Microsoft shipped v1.01 of the SDK for .NET/C# development last December, describing it as a solid foundation with which to build those AI agents for AI-powered applications. As noted, the team is now seeking to bring other language versions up to par.

That means aiming for either Beta or Release Candidate offerings of the Python and Java libraries by next month and attaining full parity with v1.0 versions by the time of Build, which one social media source indicated might be coming May 21-23..

"As part of V1.0, Python and Java will get many of the improvements that came to the .NET version that made it much easier and more powerful to use," Bolanos said. "This includes automatic function calling, events, YAML prompt files, and Handlebars templates. With the YAML prompt files, you'll be able to create prompt and agent assets in Python and then reshare it with .NET and Java developers."

More Connectors

Here, the team will provide connectors to the most popular models and their deployment types -- including Gemini, Llama-2, Phi-2, Mistral and Claude -- that can be deployed on Hugging Face, Azure AI, Google AI, Bedrock or locally.

As part of this effort, the team is increasing multi-modal support so the AI constructs can work with more than text, including audio, images and video.

As an open source project, Microsoft depends upon community developers for much of the SK work, and Bolanos appealed for more involvement. "If you have recommendations to any of the features we have planned for Spring 2024 (or even recommendations for things that aren't on our radar), let us know by filing an issue on GitHub, starting a discussion on GitHub, or starting a conversation on Discord."

About the Author

David Ramel is an editor and writer at Converge 360.