Inside VSTS

Visual Studio's Lab Management Testing Virtualization Platform

Jeff Levinson explores the new Lab Management platform for testing virtualization, which is included in Microsoft Visual Studio Test Professional 2010 and Visual Studio 2010 Ultimate.

- By Jeff Levinson

- 03/18/2010

Lab Management is Microsoft's testing virtualization platform that sits on top of mature technologies such as System Center Virtual Machine Manager (SCVMM) and Hyper-V. It is designed for testing teams, and can be effectively used by developers, to execute code in environments that mimic the actual production environment without requiring new hardware. Using the features of Microsoft Test Manager, which is included as part of Microsoft Visual Studio Test Professional 2010 and Microsoft Visual Studio 2010 Ultimate, Lab Management provides a solid environment for managing your testing.

First, how does Lab Management work?

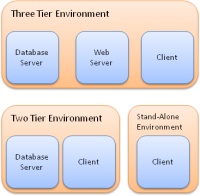

This is the cool part. It works by allowing you to compose re-usable environments. What is an environment? An environment is one or more virtual machines that your application can run on. Figure 1 shows a few common environments. These are just some basic configurations, but you can go nuts and put in software load balancers, multiple clients, multiple Web servers and clustered databases if you want. In general I have found that working with the basic environments I have laid out here is workable -- that is, it isn't too difficult to set up and once you have it set up it just works.

[Click on image for larger view.] |

| Figure 1. Lab Management works with a variety of configurations and environments. |

What these environments are made up of is a set of coordinated Hyper-V images that you can re-use. For example, let's say you had 10 testers working on a two-tier application -- you only have to create a single two-tier environment and then all 10 testers can have their own copy because the environment is simply duplicated. And you don't have to worry about issues like computer name and identifier conflicts, because Lab Management introduces a technology called "Network Isolation," which handles this seamlessly.

How do you use Lab Management?

The lab environments can be used in four ways:

- By developers looking to install and execute their code in a clean environment

- As part of the automated build and testing process (extremely valuable and very sweet)

- By testers executing automated tests

- By testers executing manual tests.

For this article I'll talk about how Visual Studio Lab Management is used as part of the automated build and test process. Team Build uses Windows Workflow 4 in TFS 2010 and includes a Lab Management build template. (Note that MSBuild is still there for more granular control and for some of the underlying build processes.) Using this template, the build works (roughly) as shown in Figure 2.

[Click on image for larger view.] |

| Figure 2. The build process derived from the Lab Management build template. |

The build process is the standard automated build process, which can be done as part of a nightly build. The deploy step requires you to create scripts to copy the binaries to the appropriate systems and then execute your installation. Visual Studio 2010 includes MSDeploy, which much to my surprise works quite well for deploying Web applications. Combined with the power of the database tools, I found it easy to deploy an entire application across multiple tiers. Listings 1, 2 and 3 show the three scripts necessary to deploy a two-tier application.

Listing 1 -- Copy binaries to the remote server

set RemotePath=%1

set LocalPath=%2

if not exist %RemotePath% (

echo remote path %RemotePath% doesn't exist

goto Error

)

if exist %LocalPath% (

rmdir /s /q %LocalPath%

)

REM Copy files to the local machine

mkdir %LocalPath%

xcopy %RemotePath% %LocalPath% /s /y

@echo Copied the build locally

:Success

echo Deploy succeeded

exit /b 0

:Error

echo Deploy failed

exit /b 1

Listing 2 -- Setup the web server

REM Create a new web site

%windir%\System32\inetsrv\appcmd add site /name:"BlogEngineWeb" /id:2 /bindings:http://*:8001 /physicalPath:"C:\inetpub\wwwroot\BlogEngineWeb"

REM Deploy the website using MSDeploy

cmd /c %1\_PublishedWebsites\Package\BlogEngineWeb.deploy.cmd /Y %2

iisreset

Listing 3 -- Deploy the database

REM Deploy the logins to the master database

"C:\Program Files (x86)\Microsoft Visual Studio 10.0\VSTSDB\Deploy\vsdbcmd" /a:Deploy /dd+ /dsp:sql /model:%1\SharedDBServer.dbschema /manifest:%1\SharedDBServer.deploymanifest /p:TargetDatabase="master" /cs:"Server= DataServer;uid=SA;pwd=xxxxx"

REM Deploy the application database

"C:\Program Files (x86)\Microsoft Visual Studio 10.0\VSTSDB\Deploy\vsdbcmd" /a:Deploy /dd+ /dsp:sql /model:%1\BlogEngineData.dbschema /manifest:%1\BlogEngineData.deploymanifest /p:TargetDatabase="BlogEngineData" /cs:"Server=DataServer;uid=SA;pwd=xxxx"

Just for security sake, you can use integrated authentication, if you choose. I opted not to because I was lazy, which is probably not appropriate for a production environment!

In the end the process was pretty straightforward but was a bit hard to figure out. Use these scripts as a guide to get started and tweak from there. Just be aware that there are things missing here -- for example, the deployment script requires that you have a database project and that certain settings be in place for MSDeploy, etc.

Finally, your automated scripts execute and the results are reported back to TFS for later analysis by the test team. If you can master the automated build and test process, everything else is easy.

What infrastructure is required?

Many organizations are based on VMWare today and Lab Management 2010 does not support VMWare. So if you want to use Lab Management you have to use Hyper-V and SCVMM. The good news is that, as of this writing Lab Management includes a license for SCVMM when used for Lab Management only. In addition, because these are test environments, the license for the operating systems in these environments is covered by the MSDN agreement.

Disclaimer: I am not a licensing expert and licensing terms can vary from company to company. Please check with your licensing representative for specifics related to the software you are running.

You do not have to have a very beefy infrastructure. We have found that two machines with four hard drives and 16 GB of RAM each provide the ability to effectively run eight to twelve virtual machines simultaneously. Remember that environments are composed of VMs, so if you have one environment with four VMs you can run three copies of this environment simultaneously. Or if your environment has only one machine in it, you can run twelve copies of it simultaneously.

Now guess how much one of these systems costs? It's around $3,500 per system. What does it cost you to get a single physical server and manage it? Let's take a nice easy number of $1,000 for a single physical machine with one network card and one hard drive with 4GB of RAM -- and that doesn't include management costs (racking, monitoring, etc.). So even with this simple example, we are talking about a savings of $5,000 dollars. Mind you, this is a ridiculously simple example and the actual savings would be a lot higher because of management overhead. In addition, the virtual machines can be snapshot and rolled back -- something that is difficult to do with a physical machine.

What isn't it appropriate for?

When you have a mix of physical machines and VMs, the management of these environments can become difficult and it can negatively affect the repeatability of certain types of tests. If you have this type of situation you will need to more closely consider the potential impacts of mixing virtual and physical machines. For instance, if every virtual machine has to communicate with the same physical machine, might they be changing the same data, which would affect the outcome of the test?

Some software, due to licensing limitations, cannot be installed on virtual machines. For situations like this, there is really nothing you can do.

Another item that is difficult to work with is actual hardware devices. Let's say your company tests printers that have to be connected to the machine or the software for network cards. This is basically impossible to do with Lab Management. However, you can also use physical environments with MTM so you simply don't need the Lab Management component. You lose the ability to do snapshots, but you can very easily manage the deployment to physical environments as well.

So, is it right for you?

Remember that this is my opinion, but I think the answer is that if your situation is such that you can take advantage of Lab Management, then it may be right for you. I say may, because many organizations don't have a testing organization that can use it. Lab Management does provide benefits for developers but it shines with testers. For instance, it has the ability to snapshot the environment when there is a bug and link the snapshot to the actual bug that is filed with developers.

But if you have a testing organization that can take advantage of Lab Management, use it. It saves time, money and effort and will increase the efficiency of any testing team. After all, this gives testers the ability to execute multiple sets of tests in different environments at the same time, while they work on something else.

About the Author

Jeff Levinson is the Application Lifecycle Management practice lead for Northwest Cadence specializing in process and methodology. He is the co-author of "Pro Visual Studio Team System with Database Professionals" (Apress 2007), the author of "Building Client/Server Applications with VB.NET" (Apress 2003) and has written numerous articles. He is an MCAD, MCSD, MCDBA, MCT and is a Team System MVP. He has a Masters in Software Engineering from Carnegie Mellon University and is a former Solutions Design and Integration Architect for The Boeing Company. You can reach him at [email protected].