Cross Platform C#

A Developer's Introduction to iOS 11

With new APIs for augmented reality and machine learning -- along with many new and updated features -- the latest iteration of iOS is sure to make Apple mobile developers happy, our resident expert concludes in this hands-on review, complete with code samples.

It’s that time of year. Each year the fall season brings a winding down of summer fun, changing of the leaves, football and a new version of Apple’s iOS. The fall of 2017 is no different. My college football team lost the first weekend -- on national TV -- and Apple has delivered iOS 11.

iOS 11 is the latest version of Apple’s mobile app OS. It has a lot of new APIs and UI changes and comes loaded on the most recent iPhones (iPhone 8 models and the new iPhone X). iOS 11 comes with a new set of APIs that developers will care about. Some of these new APIs are:

- ARKit is a framework that allows developers to create augmented reality experiences for the iPhone. ARKit allows for digital objects and information to be blended visually with the natural environment around the user.

- CoreML allows developers to blend a variety of machine learning models into an application. One of the exciting areas of machine learning is vision. Supported features for CoreML with Vision are face tracking, face detection, landmarks, text detection, rectangle detection, barcode detection, object tracking and image registration -- and I’m sure others. Along with Vision, there’s support for natural language processing, which uses machine learning to deeply understand text such as language identification, tokenization, lemmatization, parts of speech and named entity recognition.

-

Mapkit enhancements include the clustering of annotated points, the ability to add a compass button, scale view and a user-tracking button.

- The photos framework has been updated.

- SiriKit provides new and updated intent domains, which include Lists and Notes, Visual Codes, Payments and Ride Booking.

Architectural Changes: Goodbye 32 Bits

The first set of changes you’ill notice with iOS 11 are the architectural changes. The first and most notable one is the type of applications supported. Since the iPhone 5s, Apple has supported both 32-bit and 64-bit applications in its OS. With iOS 11, there’s no more support for 32 bits -- only 64 bits. If you’ve been holding onto your beloved iPhone 5, I’m sorry, but you’ll just have to set it down or trade it in. The iPhone 5s is the oldest iPhone to support 64 bits. Given that Xamarin.iOS and Xamarin.Forms support 64 bits, you should be good to go there. The only thing you need to do is go into the Project Options, browse to the iOS Build tab, and change the supported architectures to their 64-bit versions as appropriate.

UI Changes

Besides the final stage of the 32- to 64-bit transition, Apple has other interesting changes in iOS 11, specifically to the UI. Some of these changes include:

- A user gets more help long pressing on a bar item via the LargeContentSizeImage property on a UIBarItem.

- The Navigation Bar titles can now be easier to read with the PrefersLargeTitles property of a NavigationBar.

- It’s easier to add a search controller directly to the Navigation Bar. There’s now a SearchController property on the NavigationItem object.

- A new property to handle margins called DirectionLayoutMargins can be used to set the space between views and subviews.

- A UITableView can now have its header, footer and cells sized automatically based on their content (thank goodness!).

- The App Store has had a fairly comprehensive redesign.

CoreML

Before I get into this, first a personal note: I’m a data guy. I love data. When I first heard of CoreML, I honestly thought, "No, that won’t work. You need lots of data to do machine learning, and so on." Of course, after a few minutes of thinking about the problem set and form factor, it started to make more sense to me that the fact CoreML applied to several problem sets was not only a good idea, but a great idea.

Apple’s toolkit for machine learning is introduced in iOS11, macOS 10.13 and other Apple software platforms released in September 2017. With CoreML, a developer can add defined machine learning algorithms into her applications.

CoreML allows a developer to take a trained machine learning model into an application. A model is the result of applying a machine learning algorithm to a set of data. That data is used to train the algorithm. The model will then make predictions based on the input data. The algorithm is trained on what the correct answer is.

What are some good examples of models?

- Is the picture one of a cat, dog or other animal? This might be useful for a veterinarian, zoologist or other professional.

- In golf, what is the effect of making a particular golf shot financially? Given the standings in a tournament, the golfer’s ability and the current weather, should a golfer try to go after a flag that’s on the right side of a green, given there’s a pond just off to the right? What about if the wind is blowing from the right to the left? Or vice versa? Note: This would be a very complicated model -- I’m just using it as an example in which I’d be interested.

- Given a set of information about someone who’s sick, what would be the best course of treatment for that person? This might be a good model to help a doctor with treatment.

- Given a set of house prices for the last five years, the number of bedrooms, the number of bathrooms and the distance from a town’s center, what’s the projected selling price of a house?

Clearly, there are a lot of potential models. The information about how to create a model is beyond the scope of this article. Thankfully, Apple has created a set of models that developers can use and has provided the ability to import models from other sources. Official documentation gives guidance on getting CoreML models. At this time, CoreML supports Vision for image analysis, Foundation for language processing and GameplayKit for evaluating learned decision trees. Vision, language processing and games make a lot of sense. These are significant mobile problems, so adding them to a machine learning framework initially is logical.

Let’s look at Xamarin’s CoreML and Vision example to determine a number in a picture.

The steps to do image processing with CoreML are:

1. Create a vision CoreML model. The model MNISTClassifier is loaded and then wrapped in a model to make the model available. Once the model is loaded, it’s made available to the Vision tasks. Two requests are made of Vision. The first is for finding the rectangles in an image. The next is processing a rectangle

// Load the ML model

var assetPath = NSBundle.MainBundle.GetUrlForResource("MNISTClassifier", "mlmodelc");

var mlModel = MLModel.FromUrl(assetPath, out NSError mlErr);

var vModel = VNCoreMLModel.FromMLModel(mlModel, out NSError vnErr);

// Initialize Vision requests

RectangleRequest = new VNDetectRectanglesRequest(HandleRectangles);

ClassificationRequest = new VNCoreMLRequest(vModel, HandleClassification);

2. Begin Vision processing. The following code start processing the request:

// Run the rectangle detector, which upon completion runs the ML classifier.

var handler = new VNImageRequestHandler(ciImage,

uiImage.Orientation.ToCGImagePropertyOrientation(), new VNImageOptions());

DispatchQueue.DefaultGlobalQueue.DispatchAsync(()=>{

handler.Perform(new VNRequest[] {RectangleRequest}, out NSError error);

});

3. Handle the results of Vision processing:

void HandleRectangles(VNRequest request, NSError error) {

var observations = request.GetResults<VNRectangleObservation>();

// ... omitted error handling ...

var detectedRectangle = observations[0]; // first rectangle

// ... omitted cropping and greyscale conversion ...

// Run the Core ML MNIST classifier -- results in handleClassification method

var handler = new VNImageRequestHandler(correctedImage, new VNImageOptions());

DispatchQueue.DefaultGlobalQueue.DispatchAsync(() => {

handler.Perform(new VNRequest[] { ClassificationRequest }, out NSError err);

});

}

4. Handle CoreML results. The request parameter that’s passed to this method contains the details of the CoreML request. By calling GetResults, the list of possible return results is obtained. The results are ordered by confidence from the highest to the lowest:

void HandleClassification(VNRequest request, NSError error){

var observations = request.GetResults<VNClassificationObservation>();

//... omitted error handling ...

var best = observations[0]; // first/best classification result

// render in UI

DispatchQueue.MainQueue.DispatchAsync(()=>{

ClassificationLabel.Text = $"Classification: {best.Identifier}

Confidence: {best.Confidence * 100f:#.00}%";

});

}

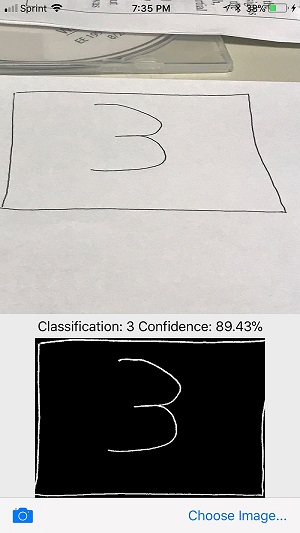

Figure 1 shows the image and the output of an example done with a board in my office.

[Click on image for larger view.]

Figure 1. The Output of the CoreMLVision App from Xamarin.

[Click on image for larger view.]

Figure 1. The Output of the CoreMLVision App from Xamarin.

Note that CoreML is in its infancy. There are going to be many things that it calculates incorrectly early on, and lots of jokes about errors. Over the next few months, I’m convinced that the models and everything else about CoreML will get better.

Wrapping Up

Although iOS is a fairly mature OS, the update from iOS 10 to iOS 11 can be thought of as evolving the product. The new version has lots of little features that can make developers’ lives much happier, and I’ve discussed a few here. Stay tuned, and I might revisit iOS 11 later after more real-world use.

About the Author

Wallace (Wally) B. McClure has authored books on iPhone programming with Mono/Monotouch, Android programming with Mono for Android, application architecture, ADO.NET, SQL Server and AJAX. He's a Microsoft MVP, an ASPInsider and a partner at Scalable Development Inc. He maintains a blog, and can be followed on Twitter.