In-Depth

How to Query JSON Data with SQL Server 2016

- By Dino Esposito

- 01/23/2018

JSON has stolen some of XML's thunder with features such as human and machine readability, a lightweight, compact text structure and support for many software and hardware platforms.

JSON (JavaScript Object Notation) is now the ubiquitous language for moving data among independent and autonomous systems, the primary function of most software these days. JSON is a text-based way to depict the state of an object in order to easily serialize and transfer it across a network from one system to the next -- especially useful in heterogeneous environments.

Because a JSON string equates to a plain text string, SQL Server and any other relational database management system (RDBMS) will let you work with JSON, as they all allow for storing strings, no matter their presentation.

That capability is enhanced in SQL Server 2016, the first-ever version that lets developers query within JSON strings as if the JSON were organized into individual columns. What's more, you can read and save existing tabular data as JSON.

For a structured and comprehensive overview of the JSON functions in SQL Server 2016, read the "JSON Data (SQL Server)" MSDN documentation. Also, the "JSON Support in SQL Server 2016" Redgate Community article provides a more business-oriented view of JSON in SQL Server 2016, along with a scenario-based perspective of the use of JSON data in a relational persistence layer.

The Persistence Layer and JSON Data

Two verbs are key to understanding the purpose of JSON: transmit and serialize. Therefore, JSON is the format in which you lay out the state of a software entity so that it can be transmitted across process spaces with the certainty it'll be well understood on both ends. Great, but here I'm discussing JSON in SQL Server and, hence, in the persistence layer. So, let's start with the base question: When would you save data in SQL Server as JSON?

A relational database table is articulated on a fixed number of columns and each column has its own data type, such as strings of variable or fixed length, dates, numbers, Booleans and the like. JSON is not a native data type. A SQL Server column that contains JSON data from the database perspective is a plain string column. You can write JSON data to a table column as you would write a regular string and you can do that in any versions of SQL Server, as well as in any other RDBMS.

Where do you get the JSON strings you eventually store into a database? There are two main scenarios: First, those strings might come from a Web service or some other form of an external endpoint that transmits data (for example, a connected device or sensor). Second, JSON data might be a convenient way to group together related pieces of information so that they appear as a single data item. This typically happens when you deal with semi-structured data, such as data that represents a business event to store in an event-sourcing scenario or, more simply, in a business context that's inherently event-driven, such as real-time systems for domains such as finance, trading, scoring, monitoring, industrial automation and control, and so on.

In all these cases, your storage can be normalized to a structured form serializing related information variable in length and format in a single data item that would fit in the string column of a relational table.

As mentioned, the JSON content you might persist can come from an external source or can be generated through serialization from instances of C# objects:

foreach (var c in countries)

{

// Serialize the C# object to JSON

var json = JsonConvert.SerializeObject(c)

// Save content to the database

record.JsonColumn = json;

}

You can use Entity Framework (EF), as well, to save JSON data into one column of a database table.

SQL Server 2016 takes this one level further and lets you transform JSON data in table rows. This ability might save a lot of work and CPU cycles off your code as now you can push the raw JSON text to the database without first parsing it to C# objects in the application code and then passing through EF or direct ADO.NET calls. The key to achieve this goal is the new OPENJSON function:

declare @country nvarchar(max) = '{

"id" : 101,

"name": "United States",

"continent": "North America"

}';

INSERT INTO Countries

SELECT * FROM OPENJSON(@country)

WITH (id int,

name nvarchar(100),

continent nvarchar(100))

You can use the function to insert or update regular table rows from plain JSON text. The WITH clause lets you map JSON properties to existing table columns.

Event Sourcing

In my December 2016

MSDN Magazine Cutting Edge column ("

Rewrite a CRUD System with Events and CQRS"), I discussed Event Sourcing as an emerging pattern to store the historical state of the application. Instead of saving the latest-known good state, with Event Sourcing you save every single business event that alters the state and rebuild the latest state replaying the past events.

The crucial aspect of an Event Sourcing implementation is how effectively you can save and retrieve the past events. Every event is different and might have a different schema, depending on the type and information available. At the same time, having a distinct (relational) store for each event type is problematic because events come asynchronously and might affect different entities and different segments of the state. If you keep them in different tables, rebuilding the state might become expensive because of cross-table JOINs. Hence, saving events as objects is the most recommended option and NoSQL stores do the work very well. Is it possible to do Event Sourcing with a relational database instead?

Saving the event as JSON is an option possible on any version of SQL Server, but reading JSON effectively, when large numbers of events are in store, might be unsustainable. With the native JSON features in SQL Server 2016, the landscape changes and using SQL Server in an Event Sourcing scenario becomes realistic. However, how would you query JSON from a database table?

Querying Data from JSON Content

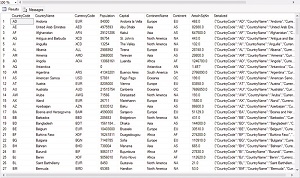

So let's say you managed to have one or more columns of JSON data in a canonical relational table. Therefore, columns with primitive data and columns filled with JSON data live side by side. Unless the new functions of SQL Server 2016 are used, the JSON columns are treated as plain text fields and can be queried only with T-SQL string and text instructions such as LIKE, SUBSTRING and TRIM. For the purpose of the demo, I built a column called Countries -- with a few tabular columns -- and another named Serialized that contains the entire rest of the record serialized as JSON, as shown in

Figure 1.

[Click on image for larger view.]

Figure 1. The Sample Countries Database with a JSON Column

[Click on image for larger view.]

Figure 1. The Sample Countries Database with a JSON Column

The JSON object serialized in the sample table looks like this:

{

"CountryCode":"AD",

"CountryName":"Andorra",

"CurrencyCode":"EUR",

"Population":"84000",

"Capital":"Andorra la Vella",

"ContinentName":"Europe",

"Continent":"EU",

"AreaInSqKm":"468.0",

"Languages":"ca",

"GeonameId":"3041565",

"Cargo":null

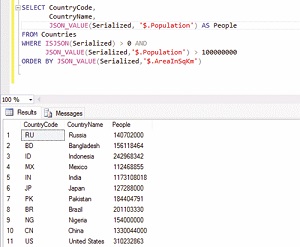

The following T-SQL query shows how to select only the countries that count more than 100 million inhabitants. The query mixes regular table columns and JSON properties:

SELECT CountryCode,

CountryName,

JSON_VALUE(Serialized, '$.Population') AS People

FROM Countries

WHERE ISJSON(Serialized) > 0 AND

JSON_VALUE(Serialized, '$.Population') > 100000000

ORDER BY JSON_VALUE(Serialized, '$.AreaInSqKm')

The JSON_VALUE function takes the name of a JSON column (or a local variable set to a JSON string) and extracts the scalar value following the specified path. As shown in Figure 2, the $ symbol refers to the root of the serialized JSON object.

[Click on image for larger view.]

Figure 2. Results of a JSON Query

[Click on image for larger view.]

Figure 2. Results of a JSON Query

Because the JSON column is configured as a plain NVARCHAR column, you might want to use the ISJSON function to check whether the content of the column is real JSON. The function returns a positive value if the content is JSON.

JSON_VALUE always returns a string of up to 4,000 bytes, regardless of the selected property. If you expect a longer return value, then you should use OPENJSON instead. At any rate, you might want to consider a CAST to get a value of the proper type. Looking back at the previous example, let's say you want the number of people living in a country formatted with commas. (In general, this might not be a good idea because formatting data in the presentation layer gives your code a lot more flexibility.) The SQL FORMAT function expects to receive a number and you receive an error if you pass the direct JSON value. To make it work, you must resort to an explicit CAST:

SELECT CountryCode,

CountryName,

FORMAT(CAST(

JSON_VALUE(Serialized, '$.Population') AS int), 'N0')

AS People

FROM Countries

WHERE ISJSON(Serialized) > 0 AND

JSON_VALUE(Serialized,'$.Population') > 100000000

ORDER BY JSON_VALUE(Serialized, '$.AreaInSqKm')

The JSON_VALUE can only return a single scalar value. If you have an array of a nested object that you want to extract, then you must resort to the JSON_QUERY function.

How effective is it to query over JSON data? Let's do some tests.

Indexing JSON Content

As obvious as it might sound, querying the entire JSON string from the database and then parsing it in memory through a dedicated library such as Newtonsoft JSON, albeit always functional, might not be an effective approach in all cases. Effectiveness mostly depends on the number of records in the database and how long it might really take to get the data you need in the format you need. Probably for a query that your application runs occasionally, in-memory processing of JSON data might still be an option. In general, though, querying through JSON-dedicated functions and letting SQL Server do the parsing internally results in slightly faster code. The difference is even bigger if you add an index on JSON data.

You shouldn't create the index on the JSON column, however, as it would index the JSON value as a single string. You'll hardly be querying for the entire JSON string or a subset of it. More realistically, instead, you'll be querying for the value of a particular property in the serialized JSON object. A more effective approach is creating one or more computed columns based on the value of one or more JSON properties and then indexing those columns. Here's an example in T-SQL:

-- Add a computed column

ALTER TABLE dbo.Countries

ADD JsonPopulation

AS JSON_VALUE(Serialized, '$.Population')

-- Create an index

CREATE INDEX IX_Countries_JsonPopulation

ON dbo.Countries(JsonPopulation)

Again, you should be aware that JSON_VALUE returns NVARCHAR, so unless you add CAST the index will be created on text.Interestingly, JSON parsing is faster than the deserialization of some special types, such as XML and spatial. You can find more information in the MSDN blog post "JSON Parsing – Performance Comparison." In summary, at least JSON parsing is better than fetching properties of other types.

JSON and Entity Framework

As a general remark, the JSON support in SQL Server 2016 is primarily exposed through the T-SQL syntax, as tooling is quite limited now. In particular, EF doesn't currently provide any facilities to query JSON data, except for the SqlQuery method in EF6 and FromSql in EF Core. However, this doesn't mean you can't serialize complex properties of C# classes (say, arrays) into JSON columns. An excellent tutorial for EF Core is "

Entity Framework: Storing Complex Properties as JSON Text in Database."

Wrapping Up

SQL Server 2016 introduces some native JSON capabilities so that you can more effectively query stored JSON data as a canonical rowset. This mostly happens when the JSON data is the serialized version of some semi-structured aggregate of data. Indexes built out of computed columns that reflect that value of one or more JSON properties definitely help improve the performance. JSON data is stored as plain text and isn't considered a special type, such as XML and Spatial.

However, this just enables you to use JSON columns in any SQL Server objects right away. The same can't be said for other complex types such as XML, CLR and Spatial that are still on the waiting list. In this column, I focused on the JSON-to-rowset scenario. However, SQL Server 2016 also fully supports the rowset-to-JSON query scenario when you write a regular T-SQL query and then map results to JSON objects via the FOR JSON clause. For more information on this feature, see "Format Query Results as JSON with FOR JSON (SQL Server)" in the Microsoft documentation.