News

GitHub Copilot AI Improved, Offered as API: 'A Taste of the Future'

"It will become possible to do more and more sophisticated things with your software just by telling it what to do."

Computer programming is about to change. A lot.

OpenAI has improved the Codex AI system that powers the controversial GitHub Copilot project for VS Code/Visual Studio and is offering its breakthrough natural language coding smarts via an API, claiming it's "a taste of the future."

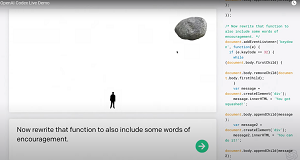

That future involves "programmers" saying or typing in natural language into a console and seeing it instantly transformed into working code, possibly very complicated working code. It works just fine, as the OpenAI team showed in an unrehearsed demo that spun up a fully functioning game (man evades falling boulder) with just normal English language commands. Another demo had Codex look up the current price of BitCoin and email it to attendees via the Mailchimp API, all done without typing in one line of code.

The OpenAI team didn't know how Codex was going to respond to each spoken command in the demo, but it basically passed each test fine. One amusing anecdote: Codex could come up with its own encouraging text for a game ("You can do it!") and write "Hello World" five times, but when asked to write Hello World with empathy, it promptly complied exactly as instructed, writing: "Hello world with empathy," which prompted some laughter. Apparently some nuances remain uncodeable.

[Click on image for larger view.] Turning Words into Code (source: OpenAI).

[Click on image for larger view.] Turning Words into Code (source: OpenAI).

Otherwise, Codex passed all the tests and did what it was asked correctly because it improves upon the previous AI system that powers GitHub Copilot, an AI "pair programmer" that was recently unveiled in preview as a tool for Visual Studio Code and then the Visual Studio IDE.

[Click on image for larger view.] GitHub Copilot (source: GitHub).

[Click on image for larger view.] GitHub Copilot (source: GitHub).

While that previous Codex version could solve 27 percent of benchmark problems, the new model can solve 37 percent. The original GPT-3 model on which Codex is based -- lauded not too long ago for its amazing capabilities -- couldn't solve any. The GitHub Copilot announcement also renewed the existential angst about AI coding replacing professional developers.

That angst seemed to be tamped down by most developers who said Codex wasn't much of a threat when GitHub Copilot was introduced, but now they might be changing their minds.

The new advanced capabilities may also draw further attention from organizations such as the Free Software Foundation (FSF), which called GitHub Copilot "unacceptable and unjust," issuing a call for papers to investigate legal, ethical and other implications of the project.

And all of this is kind of by accident, because OpenAI created Codex as a general-purpose AI language system and found that coding sparked great interest as a use case, and things took off from there, explained co-founder and CTO Greg Brockman in an Aug. 10 video.

"So a year ago we released GPT-3, which is a general-purpose language model," Brockman said. "It could basically do any language task you would ask it. So the thing that was funny for us was to see that the applications that most captured people's imaginations, ones that most inspired people, were the programming applications, because we didn't make that model to be good at coding at all. And so we knew if we put in some effort we could probably make something happen."

17

Something did happen, and, one day later, that video has already been viewed more than 21,000 times and has generated nearly 190 comments (both numbers climbing fast as I write this). "Exciting to witness a historical advance in AI!" said one comment, while another viewer wondered if Codex could do this command: "Rewrite my whole project and make it 99% faster." Laughable now, that kind of thing could soon come to pass.

"OpenAI Codex is a descendant of GPT-3; its training data contains both natural language and billions of lines of source code from publicly available sources, including code in public GitHub repositories," OpenAI said in an Aug. 10 blog post. "OpenAI Codex is most capable in Python, but it is also proficient in over a dozen languages including JavaScript, Go, Perl, PHP, Ruby, Swift and TypeScript, and even Shell. It has a memory of 14KB for Python code, compared to GPT-3 which has only 4KB -- so it can take into account over 3x as much contextual information while performing any task."

Because OpenAI Codex is a general-purpose programming model, able to be applied to essentially any programming task with varying results, the company has used it for transpilation, explaining code and refactoring code. But OpenAI says it knows it has only scratched the surface in discovering what Codex can do, so it's opening up its tech as an API to let the community experiment and explore what it's capable of.

"We're now making OpenAI Codex available in private beta via our API, and we are aiming to scale up as quickly as we can safely. During the initial period, OpenAI Codex will be offered for free. OpenAI will continue building on the safety groundwork we laid with GPT-3 -- reviewing applications and incrementally scaling them up while working closely with developers to understand the effect of our technologies in the world."

The potential effect of those technologies in the real word is what has many people and organizations such as the FSF all up in arms.

Rather than the "Terminator" movie's Skynet-like singularity that will reduce humans to subjects of AI overlords, the FSF is worried about more practical considerations.

"We can see that Copilot's use of freely licensed software has many implications for an incredibly large portion of the free software community," the FSF said. "Developers want to know whether training a neural network on their software can really be considered fair use. Others who may be interested in using Copilot wonder if the code snippets and other elements copied from GitHub-hosted repositories could result in copyright infringement. And even if everything might be legally copacetic, activists wonder if there isn't something fundamentally unfair about a proprietary software company building a service off their work.

"With all these questions, many of them with legal implications that at first glance may have not been previously tested in a court of law, there aren't many simple answers." Thus the call for white papers to explore those implications.

A scholarly paper introducing OpenAI Codex, "Evaluating Large Language Models Trained on Code," acknowledges many of those concerns.

"Codex has the potential to be useful in a range of ways," the paper says. "For example, it could help onboard users to new codebases, reduce context switching for experienced coders, enable non-programmers to write specifications and have Codex draft implementations, and aid in education and exploration. However, Codex also raises significant safety challenges, does not always produce code that is aligned with user intent, and has the potential to be misused."

That aforementioned developer existential angst is also addressed in the paper. In discussing the potential impacts on the developer profession, the paper states:

As with many tools that substitute investments in capital for investments in labor (or increase the productivity of labor) ... more sophisticated future code generation tools could potentially contribute to the displacement of some programmer or engineer roles, and could change the nature of, and power dynamics involved in, programming work. However, they might instead simply make the work of some engineers more efficient, or, if used to produce larger amounts of sloppier code, they could create the illusion of increased efficiency while offloading the time spent writing code to more detailed code reviews and QA testing.

So no one knows the final implications of Codex in the tech world and the world beyond, but the possibilities seem endless.

For example, another demo in the video had Codex working with text in a Microsoft Word app via Word's JavaScript API. "We send whatever request is put here to the API, and it generates actual code in the Microsoft Word API," explained Brockman.

Ilya Sutskever, co-founder and chief scientist at OpenAI, responded: "And what you see here is a taste of the future. As the model gets really good, as the neural network gets really good at turning instructions to correct API calls, it will become possible to do more and more sophisticated things with your software just by telling it what to do."

To help discover those more sophisticated things, developers can apply to join the OpenAI Codex API waitlist here.

About the Author

David Ramel is an editor and writer at Converge 360.