News

Wrangling Codex, Getting Paid for Prompt Engineering and 'New Bing' Epic Fail

Prompt engineering has recently become popularized with the advent of cutting-edge generative AI constructs based on the GPT-3 series of large language models (LLMs) created by Microsoft partner OpenAI.

Those include ChatGPT (an advanced chatbot using natural language processing, or NLP), Codex (a GPT-3 descendant optimized for coding by being trained on both NLP and billions of lines of code) and DALL-E 2, an AI system that can create realistic images and art from an NLP description.

The explosion in advanced AI capabilities has especially shaken up the software development space, leading to more than 400 AI-infused tools in the Visual Studio Code Marketplace.

Codex is the LLM of most interest to Visual Studio Magazine readers, as it powers the GitHub Copilot "AI pair programmer" tool that can be used in Microsoft's flagship Visual Studio IDE and the open-source-based, cross-platform VS Code editor.

Wrangling Codex

To better wrangle Code and customize it for specific use cases, Microsoft has published an article explaining "How to get Codex to produce the code you want!"

"Prompt engineering is the practice of using prompts to get the output you want," Microsoft explains. "A prompt is a sequence of text like a sentence or a block of code. The practice of using prompts to elicit output originates with people. Just as you can prompt people with things like a topic for writing an essay, amazingly you can use prompts to elicit an AI model to generate target output based on a task that you have in mind."

The article is a companion piece to the company's Prompt Engine GitHub repo that contains an NPM utility library for creating and maintaining prompts for LLMs. That repo says: "LLMs like GPT-3 and Codex have continued to push the bounds of what AI is capable of -- they can capably generate language and code, but are also capable of emergent behavior like question answering, summarization, classification and dialog. One of the best techniques for enabling specific behavior out of LLMs is called prompt engineering -- crafting inputs that coax the model to produce certain kinds of outputs. Few-shot prompting is the discipline of giving examples of inputs and outputs, such that the model has a reference for the type of output you're looking for."

Codex Best Practices

Microsoft lists many Codex best practices, including, for a few examples:

- Start with a comment, data or code. You can experiment using one of the Codex models in our playground (styling instructions as comments when needed.) To get Codex to create a useful completion it's helpful to think about what information a programmer would need to perform a task. This could simply be a clear comment or the data needed to write a useful function, like the names of variables or what class a function handles.

- Specify the language. Codex understands dozens of different programming languages. Many share similar conventions for comments, functions and other programming syntax. By specifying the language and what version in a comment, Codex is better able to provide a completion for what you want. That said, Codex is fairly flexible with style and syntax.

- Specifying libraries will help Codex understand what you want. Codex is aware of a large number of libraries, APIs and modules. By telling Codex which ones to use, either from a comment or importing them into your code, Codex will make suggestions based upon them instead of alternatives.

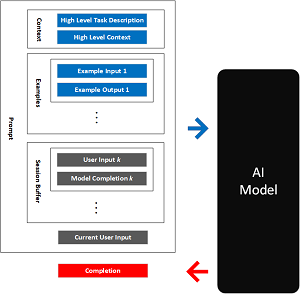

For a given application, Microsoft says, developers can provide Codex with a prompt consisting of:

- High level task description: Tell the model to use a helpful tone when outputting natural language

- High level context: Describe background information like API hints and database schema to help the model understand the task

- Examples: Show the model examples of what you want

- User input: Remind the model what the user has said before

[Click on image for larger view.] Codex Prompt (source: Microsoft).

[Click on image for larger view.] Codex Prompt (source: Microsoft).

Get Paid!

While all of that is well and good, learning how to provide good LLM prompts might also lead to a lucrative career (until OpenAI creates an LLM to provide prompts for LLMs) in the emerging field of prompt engineering, with high-paying jobs for prompt engineers.

"In prompt engineering, the description of the task is embedded in the input, e.g., as a question instead of it being implicitly given," says Wikipedia. "Prompt engineering typically works by converting one or more tasks to a prompt-based dataset and training a language model with what has been called 'prompt-based learning' or just 'prompt learning'."

For one example of those high-paying jobs, there's an "ML Engineer" job being advertised right now on the Indeed careers site with an annual salary range topping out at $230,000 for someone to prompt, fine tune and chat with LLMs.

While the $230,000 salary apparently is a top-range outlier (coming from an NLP contract review specialist seeking a "brilliant" engineer), it speaks to the high demand for LLM NLP expertise, exemplified by many other jobs advertised on Indeed and several other such sites.

For example, the description for an "AI Prompt Engineer" with a health-care focus advertised on Indeed says the position will be responsible for the following:

- Design and develop AI prompts using large language models (e.g., GPT-3, ChatGPT) and other solutions as they emerge for healthcare research studies and clinical practice

- Collaborate with researchers and clinicians to understand their needs and design prompts that will effectively gather data.

- Implement machine learning models to analyze collected data.

- Refine general purpose language models for healthcare specific applications.

- Test and evaluate the performance of AI prompts.

- Troubleshoot and resolve technical issues related to AI prompts and Solve complex problems with multi-layered data sets, as well as optimizing existing machine learning libraries and frameworks.

- Assist other team members in generating AI prompts for their specific needs.

- Stay current with the latest developments in AI and healthcare research. Document ML processes and keep abreast of developments in Machine Learning.

- Participate in team meetings and presentations

- Run tests, perform statistical analysis, and interpret test results.

The salary range for that position tops out at $98,100 per year.

But there's an ad for a "Prompt Engineer and Librarian" on another site with an even higher salary range, $175,000 - $335,000.

Asking ChatGPT and the New Bing

ChatGPT, with a knowledge cut-off date of September 2021, was unaware of the "prompt engineering" field, but tried to be helpful by offering up this:

In the context of machine learning, prompts are often used to fine-tune or "prompt" language models to perform specific tasks or generate specific outputs. This can involve designing prompts that elicit desired responses or behaviors from the model, as well as optimizing prompts to achieve better performance or efficiency.

A prompt engineer working with machine learning large language models may be responsible for tasks such as:

- Designing and testing prompts for specific tasks or applications, such as language translation, text completion, or question answering.

- Optimizing prompts to improve model performance, such as by adjusting prompt length, structure, or wording.

- Developing tools or frameworks to automate the prompt generation process, allowing for faster and more efficient prompt design and testing.

Collaborating with data scientists, machine learning engineers, and other stakeholders to understand requirements and constraints for prompt design and optimization.

- Staying up-to-date with the latest research and developments in the field of machine learning models and prompt engineering, and integrating these into their work as appropriate.

And Microsoft's "new Bing" search engine experience, which borrows the Copilot metaphor, said the following when asked about job descriptions for a prompt engineer:

A prompt engineer is a professional who works on developing and refining artificial intelligence (AI) models using prompt engineering techniques(1). Prompt engineering involves designing and crafting natural language prompts that are used to generate responses from AI models such as language models or chatbots(2). A prompt engineer's job is to identify the AI's errors and hidden capabilities so developers can address them(3)(4).

Some of the tasks that a prompt engineer may perform are:

- Converting one or more tasks to a prompt-based dataset and training a language model with what has been called "prompt-based learning" or just "prompt learning"(2).

- Developing models to improve the prompts that are input into generative models, and models that incorporate and learn from human feedback(5).

- Testing AI chatbots with different types of prose to find out their strengths and weaknesses(3)(4). [Editor: Notice that those two supposedly different resource links point to the same resource]

- Responding in a courteous and prompt manner to all guest questions and/or concerns (if working in a hospitality domain)(6).

The new Bing is running on a new, next-generation OpenAI large language model that is more powerful than ChatGPT and customized specifically for search, according to Microsoft.

About That New Bing Epic Fail

However, like all these LLMs, it still has a few kinks to work out and issues to resolve. Listing two supposedly different resources for a response is one minor example of that. For another example, when asked about the first reference to "prompt engineering" to appear on the internet, Bing pointed to the above-linked Wikipedia entry and a BusinessSolution.org web page titled "Prompt Engineering: The Ultimate Guide 2023 [GPT-3 & ChatGPT]."

Listing those links as resources, Bing said: "According to some web sources, the term 'prompt engineering' was first coined by Microsoft researchers in 2022 in their paper 'Prompt Engineering: A New Paradigm for Natural Language Processing.'"

But when asked to provide a link to that paper, it came up empty.

We also couldn't find it anywhere, including the Wikipedia entry and the BusinessSolution.org web page used as the basis of its response.

We even asked our resident data scientist, Dr. James McCaffrey of Microsoft Research (author of our Data Science Lab articles), who searched internal sources and couldn't find the paper "or anything really close." Seeing as how he works at Microsoft Research and the paper was purportedly published by Microsoft researchers, if he can't find the paper, no one can.

So that new Bing response seems to have been entirely made up of whole cloth.

Maybe the new Bing needs better prompt engineers.

About the Author

David Ramel is an editor and writer at Converge 360.