In-Depth

Using Copilot AI to Call OpenAI APIs from Visual Studio 2022

Can advanced AI in Visual Studio 2022 turn the sophisticated IDE into a replacement for low-code tools that is suitable for non-coders to create business apps?

The latter tools target "citizen developer" types who can't code much but can use wizards, drag-and-drop and other techniques to create business apps.

Some say advanced AI like GitHub Copilot and GitHub Copilot Chat free low-code devs from functionality restraints to use more robust development tooling like Visual Studio to create more performant apps.

I proved that notion for one specific use case in "Use AI to Quickly Spin Up a Data-Driven WinForms Desktop App."

Now I'm taking it a step further, exploring the use case of accessing OpenAI API endpoints from Visual Studio 2022 to infuse AI capabilities into business .NET apps.

To do this, you need to pay OpenAI for access to its APIs. I have a ChatGPT Plus subscription, which comes with the ability to generate secret API keys that I thought could be used to access OpenAI API endpoints. They can't. Access to APIs and ChatGPT is completely different, billed separately. So why the ChatGPT Plus secret API key? Authentication.

Having stocked my API account with more money, the next step was simply to confirm access to OpenAI API endpoints.

OpenAI's Developer Quickstart shows three ways to access OpenAI API endpoints: curl, Python or Node.js -- no mention of .NET or C# whatsoever.

I thought there would be an official, ready-made SDK or library or something from Microsoft to work with OpenAI APIs from within Visual Studio, as the companies are close partners based on a Microsoft $10 billion-plus investment into OpenAI. There isn't.

In fact, using Visual Studio doesn't seem to be a thing among OpenAI developers. In the OpenAI developers forum there are scant mentions of Visual Studio but many for VS Code, thanks to its Python extensions.

Microsoft guidance to Get started with OpenAI in .NET from April 2023 explains that access to OpenAI models like GPT-4 is available via REST APIs and libraries. For the latter, the company recommends the Azure OpenAI .NET SDK.

However, that requires applying for access to Azure OpenAI Service, which I did. But Microsoft seems to be stringently vetting those applications, asking for a Microsoft contact and so on, so I'm not counting on getting access anytime soon, or at all.

Though I was expecting readily available, official .NET/OpenAI SDKS or libraries or something from Microsoft to be plug-in ready for Visual Studio and found none, I did find several third-party offerings that sought to fill that gap. I tried one, OpenAI.Net, and it looked promising until running its example code resulted in an error returned from OpenAI that referenced a deprecated model, text-davinci-003.

That reference seems to be baked into the library, as I could find no way to specify a viable model. There may well be some way to do that by digging deep into the repo code, but that's not something a citizen developer should have to worry about.

In fact, many tutorials and much of the other guidance I examined were outdated because things are changing so fast in the AI space (duh!).

Following that deprecation problem with OpenAI.Net, I didn't even try to mess with other options like OpenAI-API-dotnet or OpenAiNg or Betalgo.OpenAI or OpenAI-DotNet of which OpenAI says, "Use them at your own risk!" because it doesn't verify their correctness or security.

That left me with Microsoft's second alternative after its recommended access-controlled Azure option, REST APIs.

First, I wanted to establish that I could access OpenAI API endpoints. I thought my ChatGPT Plus subscription, which can generate secret API keys, would work. It doesn't.

I learned API access and ChatGPT access are two completely different things, billed separately. So why the ChatGPT Plus secret API keys? Authentication.

Anyway, I had to give more money to OpenAI to test their APIs. So there's your first citizen developer tip: the ChatGPT Plus secret API key doesn't let you access APIs.

Consulting OpenAI's Developer Quickstart shows three ways to access its APIs: curl, Python or Node.js. No mention of .NET or C# whatsoever (I guess a $10 billion-plus investment only goes so far).

I tried OpenAI's example Node.js code to make an API request, which involved setting up an openai-test.js file and calling it from the command line. I used the exact sample code and exact command and got this error: "Cannot use import statement outside a module."

Then I tried the curl option because OpenAI said "curl is a popular command line tool used by developers to send HTTP requests to API's [sic]. It requires minimal set up time but is less capable than a fully featured programming language like Python or JavaScript."

That sounded great for a citizen developer. It also didn't work.

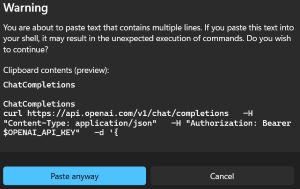

I used the exact code provided by OpenAI and tried to run it from the command line, receiving a pop-up warning that the multi-line input might result in the unexpected execution of commands.

[Click on image for larger view.] Curl Warning for OpenAI's Example Code (source: Ramel).

[Click on image for larger view.] Curl Warning for OpenAI's Example Code (source: Ramel).

It did.

I condensed the lines of code to one line and got an error message that no API key was provided, even though it was referenced in the curl command as a Windows environment variable I had set up: $OPENAI_API_KEY, as per OpenAI's instructions.

So I provided the secret key directly in the code, which you aren't supposed to do, resulting in an error message about not using HTTP properly. Again, this was OpenAI's exact example code.

There is supposedly a way to use ^ characters at the end of each line in a curl command to get it treated as one line, but that didn't work for me when Copilot tried it.

I eventually found installing Git Bash handled the multi-line curl command admirably:

[Click on image for larger view.] Git Bash to the Rescue (source: Ramel).

[Click on image for larger view.] Git Bash to the Rescue (source: Ramel).

With its Node.js example failing miserably and its curl example not working without installing more software, I didn't even try OpenAI's Python option. I know a tiny bit of C#, but no Python. Clearly C# just isn't a first-class citizen in the OpenAI dev space, which is why VS Code and its super-popular Python extensions are used so much more than Visual Studio.

I wonder what more than $10 billion gets you nowadays, if not good support of the investor's flagship programming language?

Anyway, having finally called an OpenAI API endpoint and received chatty responses back, I tested the REST approach in a Visual Studio 2022 console application.

That involved using Microsoft Copilot on the web and GitHub Copilot and GitHub Copilot Chat from within the IDE.

First, I explained what I was doing to Microsoft Copilot on the web and asked how to proceed. It provided some Program.cs code to start with, and from then on it was just a matter of playing Whack-an-Error with an AI hammer. In retrospect, it might have been better to ask Copilot Chat in Visual Studio.

The process devolved into repeated brute-force, trial-and-error cycles wherein I would run the code, get a new error and use Copilot Chat / slash commands and # references to fix things.

Sometimes Copilot would just run in circles, fixing one thing while introducing a new error that was fixed but reintroducing the old error, and so on.

I expected Copilot Chat to have thorough knowledge of my codebase. It didn't. Once it said, "I apologize for the confusion. Without seeing your current code, I can only provide a general example."

Why it couldn't "see" my current code is unknown. I was using the # reference mechanism to point Copilot Chat at specific code, so who knows?

On the other hand, the AI surprised me with its ability to peek into other repos and understand their code. I asked Copilot Chat about the deprecated OpenAI model when I tried to use the OpenAI.Net SDK. It said the SDK might not have a direct way to specify the model in the TextCompletion.Get method. I provided the SDK's URL and asked it to examine the SDK to find out, and it said:

I've examined the Newtonsoft.Json SDK at the provided GitHub link. Unfortunately, it appears that this SDK does not currently support specifying the model for text completions. The TextCompletion.Get method does not have a parameter for the model, and there is no other method or property that allows setting the model.

As a workaround, you can use the OpenAI API directly with the HttpClient class provided by .NET. This would give you full control over the API request and allow you to specify the model.

That, of course, is what I ended up doing in two console apps that used HTTPClient, one with Newtonsoft.Json and one with System.Text.Json (coincidentally, Microsoft last month published "Migrate from Newtonsoft.Json to System.Text.Json").

Perhaps because of its use of Newtonsoft.Json libraries, the first console app had much less Program.cs code:

Newtonsoft.Json App Code

using System.Text;

using Newtonsoft.Json;

class Program

{

static async Task Main(string[] args)

{

var openAiApiKey = Environment.GetEnvironmentVariable("OPENAI_API_KEY"); // Get the API key from environment variables

try

{

string prompt = "How long until we reach mars?";

string model = "gpt-3.5-turbo"; // Specify the model here

int maxTokens = 60;

var completionRequest = new

{

model = model,

messages = new[]

{

new { role = "system", content = "You are a helpful assistant." },

new { role = "user", content = prompt }

},

max_tokens = maxTokens

};

var json = JsonConvert.SerializeObject(completionRequest);

var data = new StringContent(json, Encoding.UTF8, "application/json");

using var client = new HttpClient();

client.DefaultRequestHeaders.Add("Authorization", $"Bearer {openAiApiKey}");

var response = await client.PostAsync("https://api.openai.com/v1/chat/completions", data);

var result = await response.Content.ReadAsStringAsync();

Console.WriteLine("Generated text:");

Console.WriteLine(result);

}

catch (Exception ex)

{

Console.WriteLine($"Error: {ex.Message}");

}

}

}

than did the System.Text.Json version:

System.Text.Json App Code

using System.Net.Http.Json;

using System.Text;

using System.Text.Json;

public class Program

{

public static async Task Main(string[] args)

{

var openAiApiKey = Environment.GetEnvironmentVariable("OPENAI_API_KEY");

if (string.IsNullOrEmpty(openAiApiKey))

{

Console.WriteLine("API key not found in environment variables.");

return;

}

var model = "gpt-3.5-turbo"; // Replace with your desired model

var maxTokens = 50; // Replace with your desired max tokens

var httpClient = new HttpClient();

httpClient.DefaultRequestHeaders.Add("Authorization", $"Bearer {openAiApiKey}");

var chatRequest = new

{

model = model,

messages = new[]

{

new { role = "system", content = "Once upon a time" }

},

max_tokens = maxTokens

};

var content = new StringContent(JsonSerializer.Serialize(chatRequest), Encoding.UTF8, "application/json");

var response = await httpClient.PostAsync("https://api.openai.com/v1/chat/completions", content);

if (response.IsSuccessStatusCode)

{

var chatResponse = await response.Content.ReadFromJsonAsync<ChatResponse>();

ProcessChatResponse(chatResponse!); // Use the null-forgiving post-fix to suppress the warning

}

else

{

Console.WriteLine($"Error: {response.StatusCode}");

}

}

static void ProcessChatResponse(ChatResponse chatResponse)

{

if (chatResponse?.Choices != null && chatResponse.Choices.Any())

{

var message = chatResponse.Choices[0].Message;

if (message != null)

{

Console.WriteLine("Generated text:");

Console.WriteLine(message.Content);

}

else

{

Console.WriteLine("No message content found in the response.");

}

}

else

{

Console.WriteLine("No choices found in the response.");

}

}

}

public class ChatResponse

{

public string? Id { get; set; }

public string? Object { get; set; }

public int Created { get; set; }

public string? Model { get; set; }

public Choice[]? Choices { get; set; }

public string? SystemFingerprint { get; set; }

public class Usage

{

public int PromptTokens { get; set; }

public int CompletionTokens { get; set; }

public int TotalTokens { get; set; }

}

public class Choice

{

public int Index { get; set; }

public Message? Message { get; set; }

public object? Logprobs { get; set; } // Assuming Logprobs is not used, set it as object

public string? FinishReason { get; set; }

}

public class Message

{

public string? Role { get; set; }

public string? Content { get; set; }

}

}

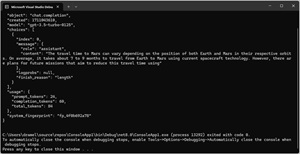

The Newtonsoft app's prompt was "How long until we reach mars?" resulting in this API response:

[Click on image for larger view.] Newtonsoft JSON App (source: Ramel).

[Click on image for larger view.] Newtonsoft JSON App (source: Ramel).

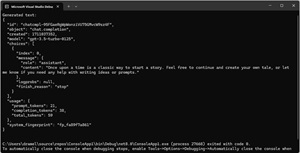

The System.Text.Json app's prompt was "Once upon a time" and resulted in this response:

[Click on image for larger view.] System JSON App (source: Ramel).

[Click on image for larger view.] System JSON App (source: Ramel).

As is common with LLMs, the responses varied from one API call to the next, though they often used similar names and themes. Coincidentally, when I submitted the story prompt to ChatGPT Plus, it just kept churning away until its creation neared novellette length, strangely using a same character name as was used in an API call response.

It would take a lot more work to create a full-blown chatbot, perhaps too much work for a "citizen developer" even armed with AI. That said, my proof-of-concept shows how to take the first step of accessing OpenAI API endpoints from Visual Studio 2022, aided by AI tools.

The next steps for a business app might be inclusion of text fields and buttons and other doodads in a WinForms or web or .NET MAUI app to make it all pretty and user-friendly, letting users submit text prompts to advanced GPTs and get replies back in turn. But then there would be authentication and security and privacy and compliance and other concerns to worry about.

So, in conclusion, it's possible to access OpenAI API endpoints from Visual Studio 2022, but it's not dead simple for "ordinary business users" or "citizen developers" armed with a smattering of coding knowledge.

With AI advances coming at a stunning pace, that might change soon, making low-code a relic of the past. Or the way of the future, obviating tools like Visual Studio. Or something between the two.

Interesting times indeed.