Agile Advisor

When Going Agile, Feast on Feedback

How to get that all-important feedback needed to make a project successful.

Effective software developers solicit and respond to feedback about software while creating it. Software shaped by feedback is invariably better received, simpler to use and ultimately fares better in app store rankings. Deep insights may be earned simply by showing your work, talking to users or measuring what happens with software when it's actually used.

Feedback is fundamental in yielding well-designed products from creative processes. Agile methods, like Scrum, exploit this by requiring feedback loops frequently throughout product development. Informing decisions with feedback is so necessary to making well-designed software that in my most recent column, I proposed "Soliciting and Responding to Feedback" as one of the three pillars of a new, simpler agility.

The faster feedback loops execute, the quicker we gain insight. Test-Driven Development (TDD) is an engineering feedback loop developers know well. A lesser-known bit of instant feedback, enabled by Visual Studio's IntelliSense, is also powerful: the red squiggle. Finding and fixing those squiggles may be the most frequently encountered code feedback loop in a developer's life: mistyping a line in code, spotting the squiggle and fixing the error is so common most of us don't notice it. In fact, its ease is what makes it so effective.

The Demo

Another popular feedback method in the tech industry is the demo. So effective that demos are baked right into Scrum's Sprint Review. Demos are common at Microsoft, we use them as status updates for management. Every member of a software development team, regardless of role, should be able to demo his or her product with little notice.

Demos give the viewer something to consider beyond static mockups; they show software's behavior. Instead of representing of an idea in the form of a drawing, model, slide or specification, a demo shows actual working software. Often, the most effective type of feedback is that which comes from working demos.

A typical demo is straightforward, and the best ones tell a simple story. A demo for a to-do list tool might go like this:

- Debbie Developer is trying to plan her week. Using Acme's To-Do List tool, she is able to list quickly, and easily record, all the things she needs to accomplish, like this (make a short to-do list).

- After listing her goals, Debbie can tag them, like this (tag the items).

- Finally, she can see when things are due by sorting via the due date (sort by due date).

- Debbie is ready to dive into her work week.

Whether formal or informal, demos provide an effective, targeted and usually inexpensive method of getting valuable feedback on software. Anyone constructing software should be able to demo it on demand. There's no substitute for practice to ensure things go smoothly.

User Studies

An increasingly popular technique for understanding a user's interpretation of software is a User Study. In a typical Visual Studio User Study, developers are invited in to see mockups of a new idea, play with a recently-implemented feature or prototype, or solve a problem. Sometimes the entire development team observes the user via video or a one-way mirror. It's humbling when a capable power user just can't see how to take the next logical step; but each time this happens, it results in an improved product.

I often get backlog-changing insights when users give voice to their thoughts as they work through a problem, when I see them struggle with a user interface, or when someone entirely misinterprets the intent of a given feature. Visual Studio teams host User Studies during envisioning, planning, and construction to iteratively mold software to our user's needs and expectations.

Hosting an effective User Study takes preparation and practice. To increase transparency in the discussions, care must be taken to create a safe and comfortable environment. Interviewers must ask open-ended, non-leading questions, like:

- What do you think should happen next?

- What are you considering now?

- How could this experience be improved?

- Why did you make that choice?

- What did that just do?

Interviewers can, knowingly or unknowingly, bias a subject's thinking and answers by providing leading information or asking close-ended questions. This is particularly common with interviewers new to the User Study techniques. Biased interview results are simply incorrect data, and can be deadly to your product.

It's important to remember that although you get rich and detailed information from User Studies and demos, their reach is fairly limited. Collecting feedback with a larger sample size is where things start to get interesting, and baking feedback features into the product is a great way to start.

Feedback Features

A simple idea that has become increasingly sophisticated over time is providing a feedback mechanism right inside the software. Web sites typically provide e-mail contact forms, and maybe some sophistication with the subject line that helps route your request or feedback to the appropriate recipient.

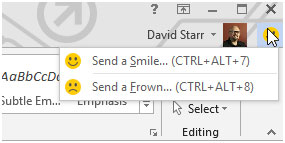

With a bit more consideration, designers can make it not only easy, but enjoyable for customers to voice their ideas, frustrations, and delights. For example, the version of Microsoft Word I'm using to write this article includes the "Send a Smile" feature at the upper right, which connects me directly to the product team (Figure 1).

Figure 1. Microsoft Word's "Send a Smile" feature, which is sent to a product team.

Figure 1. Microsoft Word's "Send a Smile" feature, which is sent to a product team.

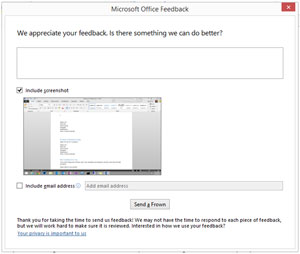

Clicking on the "Send a Frown" button yields a form meant to elicit more detail from the user, even including an optional screenshot, as shown in Figure 2.

[Click on image for larger view.]

Figure 2. The opposite of Sending a Smile, sending a frown comes with an optional complaint form.

[Click on image for larger view.]

Figure 2. The opposite of Sending a Smile, sending a frown comes with an optional complaint form.

Sophisticated feedback features like the ones in Word may even provide a contextual snapshot of what the user was doing at the time the feedback was provided. This could be a call trace, a click stream, a screen shot or some other measured quantity to accompany the freeform feedback the user was happy -- or irritated -- enough to send.

Once it's sent home via a service endpoint or some other means, feedback data can be aggregated, sliced, diced, reported on and visualized. Feedback data can reveal what your users struggle with, what features they find most useful or confusing, and where they spend the most time when using your software.

With a connection to the software's creator only a click or two away, users often provide more data than they would if they were sending an e-mail or filling out a contact form. This necessitates a mechanism for receiving, managing and acting on the feedback received; if not, it can overwhelm recipients and frustrate the users who quickly feel neglected by inaction.

Ignoring the feedback data is no different than not collecting it at all. To best take advantage of this powerful feedback mechanism, ensure your organization is ready to receive the information from users in a seamless, actionable way. If developed and managed well, an in-product feedback feature can be the most valuable capability you add to your software.

Product Telemetry

Empirically "pure" feedback can be gained when measuring the runtime behavior of software in production, then sending that data home for analysis. This type of data is known as telemetry. Questions that can be answered with software telemetry are numerous, and run the gamut from, "What code is leaking memory?" to "What features are most and least popular?" to "What sequence of actions did the user take?"

The maturing industry of software analytics is all about using telemetry data to help improve products and experiences. Numerous startups are sprouting up to help developers see their software differently through analysis of data collected when the software is running. Collecting and reporting on telemetry data is now mainstream and has various segments within which developers may focus.

Application Performance Monitoring (APM)

Monitoring and managing software performance and availability through data is the idea behind Application Performance Monitoring. APM provides data about the runtime characteristics of software in production environments, and may help find performance issues or provide feature insights.

Contemporary APM solutions often work without the need to write code. Simply by adding a NuGet package or including some JavaScript on a Web page, production software can report back about its performance and how it behaves at production runtime.

Logging and Analysis

There are more open source and commercial logging frameworks available than pebbles on a beach. Using them to gain insights into running applications is well understood, but new ways of dynamically adding logging support to running applications mean an application may be instrumented or augmented with new capabilities by a just-in-time compiler at runtime.

With the ubiquitous availability of "big data" analysis tools and services, there's no real excuse for failing to collect and inspect data generated from applications in the wild. Using log data and simple reporting tools can make it easy to learn where crashes occur, when memory leaks, and what code is least and most used.

Logging and analyzing feature data can show, for example, that users overwhelmingly choose default values on forms when installing and first launching software. For developers, this means it's important to pay attention to configuring your application's defaults.

Feature Experimentation

A simple example of live feature experimentation is A/B testing. In this automated feedback system, two or more feature implementations are compared though experimental means. An experiment on a Web site might issue one menu layout to 50 percent of site users, and a different menu layout to the other 50 percent.

I recently spoke to a developer who used this technique to learn which format his Web site's users preferred for listing product catalog search results. There was a clear winner, because users of the preferred search listings made more purchases. He learned that simple changes to layout resulted in more revenue.

Observing the actions of the different experiment populations will often show a preference for one option over another, even on seemingly minor UX variations. Although there is a cost to structuring and executing software experiments, the insights you earn may revolutionize your business.

Arguably the most accurate and empirically correct feedback one gets is observing what actually happens when software is running in production. What's more important, after all: the knowledge that code is well factored, or the discovery that it's never used?

How Does It Play With Mom?

If you create software, keep a demo handy. Be able to show your wares at a moment's notice, and learn how to tell the story when you do so. Actively listen to people's responses, and practice guiding the conversation where you need it to be.

Try an informal User Study. Recruit a co-worker or three to complete a task using your software. Practice being a good interviewer and your User Studies will bear even more fruit.

Watch your Mom try to use your fledgling app; if it's simple and intuitive enough for her, chances are you're on the right path.

Measuring software's behavior and usage in production is a core design discipline. Feedback features in your website or app are just the beginning. Automated instrumentation solutions are increasingly common, and any one of them provides more data than you have with nothing.

Whether asking, "What do you think?" or measuring what people actually do, numerous techniques exist to get feedback and measured insights about software. Try some. Acting on what you learn is crucial in realizing true business agility.

About the Author

David Starr is a Senior Program Manager at Microsoft, where he helps make tools for agile teams using Visual Studio. He has also been Chief Craftsman for Scrum.org, a five-time Visual Studio ALM MVP, a Pluralsight technical instructor, and agile coach.