News

Keyboardless Coding? GitHub Copilot 'AI Pair Programmer' Gets Voice Commands

Got a good microphone? As predicted here many months ago, the GitHub Copilot "AI pair programmer" coding assistant has introduced voice commands, experimental at first.

" 'Hey, GitHub!' enables voice-based interaction with GitHub Copilot, enabling the benefits of an AI pair programmer while reducing the need for a keyboard," Microsoft-owned GitHub said last week in a recap of the company's GitHub Universe 2022 event.

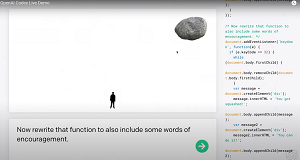

[Click on image for larger view.] GitHub Copilot (source: GitHub).

[Click on image for larger view.] GitHub Copilot (source: GitHub).

This was predicted here in May during Microsoft's Build developer conference in the Visual Studio Magazine article, "Microsoft Build Conference Heralds Era of AI-Assisted Software Development." That article said: "Heads are still exploding as Copilot, starting with relatively simple IntelliSense-like code completion -- even whole-line code completion -- has been improved to the point where normal-language typed commands (voice is surely coming) can create entire projects such as simple games."

[Click on image for larger view.] Turning Words into Code (source: OpenAI).

[Click on image for larger view.] Turning Words into Code (source: OpenAI).

GitHub Copilot shook up the software development space last year when it debuted with cutting-edge AI natural language processing tech that goes way beyond IntelliSense -- and even Microsoft's improvement on that, IntelliCode -- and which can be leveraged in the Visual Studio IDE and Visual Studio Code editor. The new voice functionality only works in the latter at this early stage.

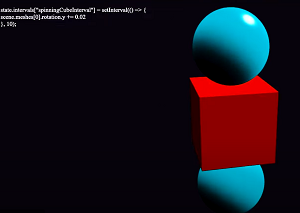

GitHub Copilot's capabilities are possible because of Codex, a machine learning model that can translate natural language commands into code in more than a dozen programming languages, created by AI research/development company and Microsoft partner OpenAI. A May 25 video shows a programmer using natural language commands with Codex to easily and quickly build 3D scenes by translating the commands into Babylon.js code (a 3D renderer that runs in the browser), with commands like "add teal spheres above and below the cube" and then "make the cube spin."

[Click on image for larger view.] Using Codex Natural Language to Build 3D Scenes (source: Microsoft).

[Click on image for larger view.] Using Codex Natural Language to Build 3D Scenes (source: Microsoft).

GitHub has continually improved the product through a long preview and many updates, with the voice command experiment the latest.

[Click on image for larger view.] 'Hey GitHub' in Action (source: Microsoft).

[Click on image for larger view.] 'Hey GitHub' in Action (source: Microsoft).

In addition to generating code for line completion, voice commands can also be used for navigation ("go to line 34"), controlling an IDE ("run the program") and code summarization, wherein a developer can ask GitHub Copilot to explain lines 3-10 and get a summary of what the code does, for example.

"If GitHub Copilot is our pair programmer, why can't we talk to it?" GitHub said last week. "That's exactly what the GitHub Next team is working towards. 'Hey, GitHub!' enables voice-based interaction with GitHub Copilot and more. With the power of your voice, we're excited about the potential to bring the benefits of GitHub Copilot to even more developers, including developers who have difficulty typing using their hands. 'Hey, GitHub!' only reduces the need for a keyboard when coding within VS Code for now, but we hope to expand its capabilities through further research and testing."

To take part in the experiment, developers can apply to join the waitlist.

About the Author

David Ramel is an editor and writer at Converge 360.